1. Introduction

Image recognition is a computerised technology that allows machines to interpret and process visual data to identify objects. Machine learning or ML is an important part of image recognition systems. ML helps in training machines to utilise various mathematical and statistical models along with other technologies to process analyse and understand visual data similar to a human. Image recognition or IR technology integrates artificial intelligence or AI, pattern recognition theory, and image processing to analyse complex data and identify patterns from it. IR is used in different industries for different use cases. It can be used in simple tasks similar to finding meta tags of an image, to advanced used cases in self-driving cars, autonomous robots, and accident-avoidance systems [1]. In this report, technical advancement and applications of image recognition have been explored in depth with an example of how IR is used for license plate recognition. The aim of the report is to understand IR-related technological advancement and how that is used in different use cases in different industries. The in-depth discussion on the use of IR in license plate recognition will help to understand a real-life use case of the technology. Image recognition is extensively used in automatic vehicle management on the road and in other areas. Automated license plate recognition or LPR is such a use case of IR in vehicle management. It is used for tolling, detection of stolen vehicles, smart billing, and ticketless parking in the EU, US and other countries. The report uses the LRP use case in detail to understand IR. As accuracy is an important parameter for performance of LRP. At the same time, it needs to perform recognition and data processing fast and securely. So the research is focused on finding out whether it is possible to use different ML based techniques to perform image recognition and data processing faster in LPR.

2. Literature Review

2.1. Definitions and Key Components

Traditionally, image recognition serves as a mechanism for discerning an object or a feature within an image and subsequently categorizing the image into specific classes, much like how a human being recognizes objects from an image or a collection of images. In the technological domain, image recognition represents an intelligent, machine-driven process of identifying and detecting a feature or an object from a digital image. IR employs two distinct approaches, which are founded on the underlying technologies of ML and deep learning. When leveraging ML technology, key features are identified and extracted from an image by means of ML algorithms. Then the extracted information is provided as an input to another ML model. Conversely, when a deep learning approach to IR is used, it involves the use of a convolution Neural Network to learn about relevant features from sample images and then it attempts to find the learned features in new images [2].

2.2. Computer Vision and Image Recognition

From the vantage point of a machine, a digital image is essentially a collection of data that encompasses a set of pixel values. Machines do not process information from the entirety of the image, instead, they work on the image data to seek out patterns and compare them with other patterns.If two patterns closely match, then both patterns are categorized into the same class [2]. Hence, the goal of IR is to identify, label and classify objects detected from images into different categories. Traditional computer vision tasks are used extensively in IR [3]. These tasks are presented in the following table 1.

Table 1: Overview of imaging tasks in computer vision

Task | Description |

Image Classification | It is about labelling an image and creating categories for it. |

Object Localization | It is about the identification of the location of an object in an image and then surrounding it with the bounding box method [3]. |

Object Detection | It is about determining whether an object is present in an image or not, with the help of the bounding boxes. It also categorises the object into a suitable class [3]. |

Object Segmentation | It is about distinguishing various elements in an image. It requires to identify and locate each element from an image. However, it does not use bounding boxes. But it highlights the contour of an object in an image [3]. |

2.3. Machine Learning and IR

Machine learning and IR require going through a set of steps for the process. It is fundamentally about learning to train models to perform image recognition tasks. The steps are explained in the following table 2.

Table 2: Steps to use Machine Learning in Image Recognition

Step | Description |

Data collection | It involves collecting data from heterogeneous sources to construct a well-constructed dataset. It collects data about a diverse range of images representing patterns, objects, and scenes. All these help the system to learn how to recognise those elements from another image. A comprehensive and well-constructed dataset has an important contribution to the ability of the IR system to generalise its learning across various scenarios. Data accuracy and reliability are two areas to take care of during data collection. Irrelevant data and inaccurate data will reduce the capability of the IR system. |

Data Preparation | It is the process of transforming and refining collected raw data into a dataset suitable for the training of ML models. It requires removing inconsistencies in data, resizing images to ensure a consistent form, and normalization of pixel values. The effectiveness of the data preparation process ensures effective learning for the IR system to find meaningful patterns from data. |

Model Selection | It is also a pivotal step to select a suitable ML model for learning. There are different learning models such as supervised learning, unsupervised learning, and reinforced learning models. The architecture of the selected model will determine the data interpretation and processing approach. There are different architectures for different levels of complexities of IR tasks. Those architectures are convolution neural networks or CNN, recurrent neural networks or RNN and transformer models. The model selection must be aligned with the requirements and availability of computational resources. |

Model Training | Depending on the selected model and architecture for pattern recognition and features of the training dataset, the model will be trained. It requires iterative optimization to adjust the parameters of the model to minimize differences between the actual and predicted outputs. The training process continues until the model reaches a certain level of accuracy and generalisation. Then it is considered to be proficient in recognising objects from an image on its own. |

Model Testing | It requires testing of the trained model to evaluate the performance of the model on a new and unseen set of data. It helps to evaluate the ability of the trained model for generalisation. Different metrics are used for the evaluation. Such metrics are precision, recall, accuracy, and F1 score to get insights into the strengths and limitations of the model. There must be rigorous testing to ensure the trained model is tuned for a high level of performance based on the requirements. |

Model Deployment | A model that is tested and evaluated enough to provide satisfactory performance, is used for deployment. It requires integrating the newly trained model with other real-world applications to perform the task in real time and follow the underlying business process to meet the requirements. It requires continuous monitoring post-deployment to ensure the model is working properly and accurately while learning to evolve with new data patterns and objects. |

2.4. Deep Learning and IR

Neural networks are used in deep learning to perform image recognition (IR) problems. In addition to improving the accuracy and dependability of infrared systems, the application of neural networks makes it easier for automated recognition of characteristics in pictures [5]. Recurrent neural networks, or RNNs, and convolutional neural networks, or CNNs, are widely employed in IR to address practical issues. In order to automatically extract and learn hierarchical features from image data, IR uses CNNs, a type of deep learning model. Convolution, pooling, and other fully-connected processes are carried out by CNNs using a number of layers. Applying various filters to the input picture data and identifying local patterns and edges are made possible by the convolution layer. Then, in order to reduce the computational load, the pooling layers are in charge of downsampling the feature map data while keeping important information [6]. Then, using the learnt characteristics, the fully linked layers contribute to decision-making. CNNs are used for image classification, segmentation, and object identification [7]. RNNs are employed in the analysis of sequential data. A network with this kind of internal memory may analyze sequences and extract temporal relationships from input. It is utilized for image captioning in IR. There is a vanishing gradient issue with conventional RNNs. The issue is lessened by advanced RNNs with Gated Recurrent Units (GRU) and Long Short Term Memory (LSTM) [8].

2.5. License Plate Recognition

LPR captures vehicle license plate images or videos, converts optical data to digital image data, and applies Image Recognition (IR) models for license plate identification. It operates in real-time, collecting vehicle and environmental data too [4]. Typically, an LPR camera at a control point captures plate images. Special OCR routines turn optical data into digital data. As license plate designs vary, an IR model with a proper training algorithm is needed for reading different plate designs from training data and for image recognition. AI and ML algorithms boost LPR's precision, accuracy, and make it automated and faster [9].

2.6. Applications of LPR

In different parts of the world, LPR is implemented with AI and IR with different names, such as ALPR or Automated License Plate Recognition in the US, and ANPR or Automated Number Plate Recognition in the EU and Asian countries. There are two types of ANPR systems, fixed and mobile. Fixed systems are installed at some specific control point location all the time. Such control points are border crossings, toll collection booths, and strategic locations at traffic and vehicle routes [10]. On the contrary, mobile systems are moving with some vehicles and are used to scan the license plates of other nearby vehicles with proximity. For example, such systems are used by police for security reasons at different locations to track down lost or stolen vehicles [11].

2.7. Challenges and Limitations of LPR

Accuracy is the main challenge for LPR. In tough conditions like fog, poor lighting, dirty or obscured license plates, and fast-moving vehicles, getting clear plate images is hard, increasing the risk of errors in pattern recognition from image data [12]. The installation of LPR cameras and components demands a large initial outlay and proficient staff for oversight. There's a risk of the technology being misused by authorities or individuals for negative purposes via data access [9]. An automated LPR process may need extra security to prevent damage or tampering, incurring additional maintenance and update costs. Since LPR collects and processes sensitive data, there are concerns about data privacy. There's a risk of privacy invasion or misuse to track individuals without consent, along with unauthorized access to stored data. In large-scale public use, privacy and security issues lead to mistrust [4].

3. Concepts and technologies of license plate recognition

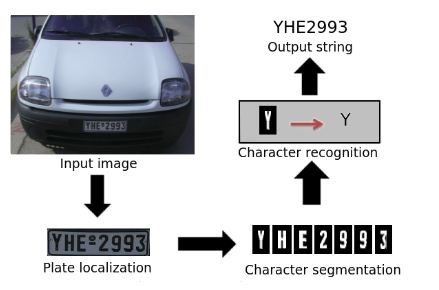

There are four important system components of an LPR system. Those, are image acquisition, license plate positioning, character segmentation, and character recognition(Figure 1).

Figure 1: LPR Process using Learning Algorithm and ML [13]

3.1. Image Acquisition and Pre-Processing

An LPR runs in a dynamic environment. The image acquisition and pre-processing components together help to ensure that an image provided to the system is stable, appropriate, and balanced under the working conditions. It consists of imaging units having an OCR camera and system to transform the optical image into digital data, and a pre-processor unit for the data. There may be an accompanying sensor network to collect further information about the vehicle and the environment. There may also be additional lighting units. The sensor network helps to detect several environmental conditions, and vehicle speed and triggers the imaging unit and lighting units to capture an image. Once the image is captured, the optical data is transformed into digital data and sent for pre-processing [13]. The pre-processing unit helps to enhance the quality of image data so that necessary features, objects, and patterns can be extracted for further analysis. There may be multiple units for pre-processing and the data goes through each of those. Pre-processing tasks may involve turning colour images into greyscale, balancing colour differences, reducing noise from images and so on.

3.2. License Plate Positioning

License plate positioning, also known as license plate localisation, constitutes the second crucial component of a LPR system. Once the pre-processed image data is available, it needs to determine whether the image was taken when the car was moving or not, and then to pinpoint the location of the number plates in the image. Typically, multiple images of the same vehicle are taken during the image acquisition phase. In an image, the location of the actual number plate is not fixed. So, it requires understanding and pinpointing the location of the number plate. Then the system keeps the number plate-related data and discards the rest of the image data. This is a complex process that needs to take care of many aspects like speed, lighting, design of number plate, shape and size, shadows and so on. Accuracy is a critical requirement for this component [14]. Morphological processing and edge processing are used in license plate positioning to extract the license plate location effectively from larger images. Morphological processing is applied to images and comprises various templates of small structural elements. Mathematical operations are conducted to identify the neighbourhood of a specific structural element within the pixel data. In some instances, it may be difficult to detect the edges of a number plate due to factors like colour, illumination, or indistinct edges. In such cases, edge processing helps to find edge boundaries accurately using histogram data [15].

3.3. Character Segmentation

Once license plate positions are found on images accurately, then the LPR system focuses on the character segmentation process for the characters on the number plate. Specific algorithms are used to recognise various zones containing valid characters within a plate [16].

3.4. Character Recognition

The final component is used for character recognition. When single character segments are identified from the images, then the character recognition process uses image recognition techniques to recognise the characters to understand the complete license number of a vehicle. It works to find out each single character on the plate accurately, and unambiguously. Various techniques such as Regional Syntactical Correction are used to confirm the identified characters to avoid any confusion [4].

4. System implementation and cutting-edge experimental data analysis

Python with different libraries can be used for the implementation of an LPR system. PyTorch, a machine learning library for Python, is well-suited for applications related to computer vision and natural language processing [17]. A training dataset should be developed comprising images of different vehicles in different environments, and nameplates with different shapes and sizes. Variations in images will help the ML model learn about characters and provide more accurate results. It also needs to develop an evaluation data set with evaluation metrics specified. The evaluation must be both practical and congruent with the system's objectives. This will assist in guaranteeing and gauging the accuracy of the PyTorch-implemented model. The implemented system should be subjected to testing for license plate detection, during which it will identify the locations of license plates within images and subsequently create segments for each character on the plate. Then the second testing process will run for license plate recognition. This will facilitate the actual recognition of the segmented characters and their comparison with the learned characters to accurately identify each character. Once all characters are identified, it will create the sequence for the number plate. Accuracy checking should consider factors like noise, tilt, edge, distortions, weather conditions, speed, and illumination to measure the performance of the implemented system.

5. Conclusion

The research has helped to track down how advancements in the field of image recognition have happened with ML, deep learning and advanced technologies like sensor networks and Python. Image recognition techniques require data-intensive and real-time data processing when they are used for LPR. LPR is becoming popular with automated traffic management, vehicle identification and law management, and smart cities. Image recognition is central to LPR. However, there are challenges related to accuracy, and data privacy concerns while implementing LPR at a large scale in the public domain. LPR has been implemented in different ways across the world. However, it still has dilemmas due to associated costs, risks of privacy breaches for individuals, and chances of inaccurate results. The research can be used as a base for future work to explore how an optimal solution can be developed to balance security and performance while focusing on accuracy.

Future work will be more focused on exploring how PyTorch can be used to improve the performance of the proposed LPR solution using deep learning algorithms. It will also focus on exploring how such a system can be protected from security and privacy breaches. In particular, it will explore the role of a cascade classifier to strengthen the license plate localisation in an image. Developing the cascading classifier with different features will help in increasing the accuracy without slowing down the system speed. LPR must work in real time. So, two challenging aspects are speed and security. Future work will be focused on exploring different implementation options to find out an optimal balance between speed and security.

References

[1]. L. Chen, S. Li, Q. Bai, J. Yang, S. Jiang, and Y. Miao, "Review of image classification algorithms based on convolutional neural networks," Remote Sensing, vol. 13, p. 4712, 2021.

[2]. P. Wang, E. Fan, and P. Wang, "Comparative analysis of image classification algorithms based on traditional machine learning and deep learning," Pattern recognition letters, vol. 141, pp. 61-67, 2021.

[3]. W. Rawat and Z. Wang, "Deep convolutional neural networks for image classification: A comprehensive review," Neural computation, vol. 29, pp. 2352-2449, 2017.

[4]. S. M. Silva and C. R. Jung, "License plate detection and recognition in unconstrained scenarios," in Proceedings of the European Conference on computer vision (ECCV), 2018, pp. 580-596.

[5]. M. Pak and S. Kim, "A review of deep learning in image recognition," in 2017 4th international conference on computer applications and information processing technology (CAIPT), 2017, pp. 1-3.

[6]. B. B. Traore, B. Kamsu-Foguem, and F. Tangara, "Deep convolution neural network for image recognition," Ecological informatics, vol. 48, pp. 257-268, 2018.

[7]. R. Chauhan, K. K. Ghanshala, and R. Joshi, "Convolutional neural network (CNN) for image detection and recognition," in 2018 first international conference on secure cyber computing and communication (ICSCCC), 2018, pp. 278-282.

[8]. L. Mou, P. Ghamisi, and X. X. Zhu, "Deep recurrent neural networks for hyperspectral image classification," IEEE transactions on geoscience and remote sensing, vol. 55, pp. 3639-3655, 2017.

[9]. L. Xie, T. Ahmad, L. Jin, Y. Liu, and S. Zhang, "A new CNN-based method for multi-directional car license plate detection," IEEE Transactions on Intelligent Transportation Systems, vol. 19, pp. 507-517, 2018.

[10]. S. Hadavi, H. B. Rai, S. Verlinde, H. Huang, C. Macharis, and T. Guns, "Analyzing passenger and freight vehicle movements from automatic-Number plate recognition camera data," European Transport Research Review, vol. 12, pp. 1-17, 2020.

[11]. G. R. Gonçalves, M. A. Diniz, R. Laroca, D. Menotti, and W. R. Schwartz, "Real-time automatic license plate recognition through deep multi-task networks," in 2018 31st SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), 2018, pp. 110-117.

[12]. R. Laroca, E. Severo, L. A. Zanlorensi, L. S. Oliveira, G. R. Gonçalves, W. R. Schwartz, et al., "A robust real-time automatic license plate recognition based on the YOLO detector," in 2018 international joint conference on neural networks (ijcnn), 2018, pp. 1-10.

[13]. P. Afeefa and P. P. Thulasidharan, "Automatic License Plate Recognition (ALPR) using HTM cortical learning algorithm," in 2017 International Conference on Intelligent Computing and Control (I2C2), 2017, pp. 1-4.

[14]. J. Shashirangana, H. Padmasiri, D. Meedeniya, and C. Perera, "Automated license plate recognition: a survey on methods and techniques," IEEE Access, vol. 9, pp. 11203-11225, 2020.

[15]. Y. Yuan, W. Zou, Y. Zhao, X. Wang, X. Hu, and N. Komodakis, "A robust and efficient approach to license plate detection," IEEE Transactions on Image Processing, vol. 26, pp. 1102-1114, 2016.

[16]. G. R. Gonçalves, S. P. G. da Silva, D. Menotti, and W. R. Schwartz, "Benchmark for license plate character segmentation," Journal of Electronic Imaging, vol. 25, pp. 053034-053034, 2016.

[17]. G. S. More and P. Bartakke, "Real-Time Implementation of Automatic License Plate Recognition System," in International Conference on Advances and Applications of Artificial Intelligence and Machine Learning, 2022, pp. 585-597.

Cite this article

Qin,S. (2025). Technological Development and Application of Image Recognition - License Plate Recognition. Applied and Computational Engineering,121,109-115.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. L. Chen, S. Li, Q. Bai, J. Yang, S. Jiang, and Y. Miao, "Review of image classification algorithms based on convolutional neural networks," Remote Sensing, vol. 13, p. 4712, 2021.

[2]. P. Wang, E. Fan, and P. Wang, "Comparative analysis of image classification algorithms based on traditional machine learning and deep learning," Pattern recognition letters, vol. 141, pp. 61-67, 2021.

[3]. W. Rawat and Z. Wang, "Deep convolutional neural networks for image classification: A comprehensive review," Neural computation, vol. 29, pp. 2352-2449, 2017.

[4]. S. M. Silva and C. R. Jung, "License plate detection and recognition in unconstrained scenarios," in Proceedings of the European Conference on computer vision (ECCV), 2018, pp. 580-596.

[5]. M. Pak and S. Kim, "A review of deep learning in image recognition," in 2017 4th international conference on computer applications and information processing technology (CAIPT), 2017, pp. 1-3.

[6]. B. B. Traore, B. Kamsu-Foguem, and F. Tangara, "Deep convolution neural network for image recognition," Ecological informatics, vol. 48, pp. 257-268, 2018.

[7]. R. Chauhan, K. K. Ghanshala, and R. Joshi, "Convolutional neural network (CNN) for image detection and recognition," in 2018 first international conference on secure cyber computing and communication (ICSCCC), 2018, pp. 278-282.

[8]. L. Mou, P. Ghamisi, and X. X. Zhu, "Deep recurrent neural networks for hyperspectral image classification," IEEE transactions on geoscience and remote sensing, vol. 55, pp. 3639-3655, 2017.

[9]. L. Xie, T. Ahmad, L. Jin, Y. Liu, and S. Zhang, "A new CNN-based method for multi-directional car license plate detection," IEEE Transactions on Intelligent Transportation Systems, vol. 19, pp. 507-517, 2018.

[10]. S. Hadavi, H. B. Rai, S. Verlinde, H. Huang, C. Macharis, and T. Guns, "Analyzing passenger and freight vehicle movements from automatic-Number plate recognition camera data," European Transport Research Review, vol. 12, pp. 1-17, 2020.

[11]. G. R. Gonçalves, M. A. Diniz, R. Laroca, D. Menotti, and W. R. Schwartz, "Real-time automatic license plate recognition through deep multi-task networks," in 2018 31st SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), 2018, pp. 110-117.

[12]. R. Laroca, E. Severo, L. A. Zanlorensi, L. S. Oliveira, G. R. Gonçalves, W. R. Schwartz, et al., "A robust real-time automatic license plate recognition based on the YOLO detector," in 2018 international joint conference on neural networks (ijcnn), 2018, pp. 1-10.

[13]. P. Afeefa and P. P. Thulasidharan, "Automatic License Plate Recognition (ALPR) using HTM cortical learning algorithm," in 2017 International Conference on Intelligent Computing and Control (I2C2), 2017, pp. 1-4.

[14]. J. Shashirangana, H. Padmasiri, D. Meedeniya, and C. Perera, "Automated license plate recognition: a survey on methods and techniques," IEEE Access, vol. 9, pp. 11203-11225, 2020.

[15]. Y. Yuan, W. Zou, Y. Zhao, X. Wang, X. Hu, and N. Komodakis, "A robust and efficient approach to license plate detection," IEEE Transactions on Image Processing, vol. 26, pp. 1102-1114, 2016.

[16]. G. R. Gonçalves, S. P. G. da Silva, D. Menotti, and W. R. Schwartz, "Benchmark for license plate character segmentation," Journal of Electronic Imaging, vol. 25, pp. 053034-053034, 2016.

[17]. G. S. More and P. Bartakke, "Real-Time Implementation of Automatic License Plate Recognition System," in International Conference on Advances and Applications of Artificial Intelligence and Machine Learning, 2022, pp. 585-597.