1. Introduction

Over the past decade, computer-based music analysis has matured into a robust field known as Music Information Retrieval (MIR) [1]. MIR bridges audio signal processing, musicology, and machine learning to analyze large digital music collections [1, 2]. Early MIR work in the 2000s focused on tasks like genre classification, similarity search, and melodic query retrieval; more recently, the scope has expanded to include mood detection and music recommendation [1, 2]. Central to MIR is audio feature extraction – computing descriptors of music audio (timbre, rhythm, melody) that enable statistical pattern recognition [2, 3]. Handcrafted features have been widely used in classic approaches [2], while the last decade has seen deep learning models learn feature representations directly from audio, achieving state-of-the-art performance in many MIR tasks [4]. The proliferation of open-source software (often in Python) has further spurred MIR development by making it easier to extract features and analyze large music datasets [5]. This mature MIR toolkit provides a foundation to quantitatively analyze niche genres such as J-Core.

J-Core (Japanese hardcore techno) is an underground electronic genre that fuses the fast, aggressive rhythms of hardcore techno with Japanese pop-cultural elements [6, 7]. Hardcore techno first emerged in the late-1980s European rave scene (e.g., Rotterdam’s gabber) and is characterized by extreme tempos and distorted kick drums [8]. Japanese DJs began experimenting with hardcore in the 1990s, e.g., the unit DJ Sharpnel pioneered high-speed tracks spliced with anime and video game samples [7]. By the early 2000s, a distinctly Japanese variant had coalesced, fueled by local otaku (fan) culture influences [7, 9]. The term “J-Core” (short for Japanese hardcore) came into use around the mid-2000s to label this unique scene [6, 9]. J-Core tracks often feature high-pitched anime voice clips, chiptune-like melodies, and “kawaii” (cute) imagery, setting them apart from Western hardcore styles [7]. A notable aspect of J-Core’s evolution is its close ties to Japan’s dōjin (self-published fan) music scene. Rather than through mainstream labels, J-Core producers typically release music via independent compilations sold at fan conventions or online forums [7]. Major fan events like Comiket and the M3 festival serve as key hubs for circulating J-Core CDs directly to listeners [7]. This dōjin network – part of Japan’s broader DIY music culture – has enabled niche genres like J-Core to flourish outside commercial channels [7, 10]. Despite a devoted following in the fan community, J-Core remains largely absent from academic literature; most information comes from scene reports and fan-curated media [6, 7]. This lack of scholarly attention underscores the need for a data-driven study of J-Core music.

Given the scarcity of formal analyses on J-Core, a systematic feature study of this genre is timely. This work aims to quantitatively characterize J-Core music using MIR techniques and Python-based statistical analysis. By extracting audio features from a representative sample of J-Core tracks, one identifies the musical characteristics that define the. Such analysis not only illuminates the distinct musical traits of J-Core, but also demonstrates how MIR methods can be applied to an understudied, fan-driven genre.

2. Methodology

2.1. Data

The purpose of this study is to systematically quantify the rhythmic, timbral, harmonic and structural characteristics of Japanese Core (J-Core) music in order to reveal its stylistic commonalities and differences. The entire experimental process can be divided into three parts: data collection and description, signal preprocessing and feature extraction, and statistical evaluation and result analysis.

First, this research used SoundCloud's API and Python to search for compliant tracks using tags in the SoundCloud platform. This study searched using the tags “Jcore”, “J-core”, and “J core” to obtain 3000 compliant tracks in total, and finally used python's random sample function to randomly select 30 tracks. Then, this research downloaded the .wav files of these 30 tracks.

2.2. Processing

First, this study used python's Librosa library to limit the sample rate of all the samples to 44.1 kHz, which prevents the sample rate from becoming a confounding variable, and since all the samples were detected to have a sample rate greater than 44.1 kHz, this operation did not unfairly affect any of the samples. This sample rate is also the standard CD sample rate, which corresponds to the most detailed sound that the human ear is capable of recognizing and does not lead to excessive distortion [11].

Subsequently, if the sample audio was stereo, it was merged into mono using python's Librosa library to ensure comparable subsequent frequency domain features. This operation also avoids some of the differences in interchange audio as confounding variables that result from dual-channeling.

2.3. Evaluations

In this study, the average BPM, the most common chords, the average spectral center of mass, and the MFCC 1-13 features were selected to quantify and differentiate the J-Core subgenre in terms of rhythmic, harmonic, and timbral dimensions [12]. Key features computed per track include: average tempo (BPM) – a proxy for rhythmic speed; most common chord – representing prevalent harmony; average spectral centroid – indicating the “brightness” of the sound (higher values mean more high-frequency content); and Mel-Frequency Cepstral Coefficients (MFCC 1–13) – a 13-dimensional vector summarizing the short-term spectral shape (timbre) of the audio. These particular features were chosen because prior research in Music Information Retrieval (MIR) links them to musical style and perception.

Specifically, the average BPM, as the core rhythmic tempo indicator of J-Core music, can distinguish between traditional Happy Hardcore (160-190 BPM) and Emotional Hardcore (120-140 BPM) subgenres [1]; the most common chords reflect the tonal preference of the work (major key corresponds to a bright atmosphere; minor key corresponds to a somber emotion), and the most common chords reflect the tonal preference of the work (major key corresponds to a bright atmosphere; minor key corresponds to a somber emotion), The most common chords reflect the tonal preference of the piece (major for bright ambience, minor for somber emotion), providing a key marker for stylistic categorization [13]; the mean spectral center of mass measures the position of the spectral “center of gravity”, with high values implying an abundance of high-frequency components, which allows for a quantitative characterization of the difference between sharp synthesizers and low-frequency synthesizers low-frequency heaviness [14]; MFCC 1-13 simulates the human ear's perception of Mel's frequencies, with the first few dimensions (1-3) reflecting overall loudness and spectral slope, and the subsequent dimensions (4-13) capturing timbral textural details for style clustering and automatic classification [15]. This combination of multiple features is feasible for academic validation and provides empirical support for J-Core's unique emotional and orchestration characteristics.

3. Results and discussion

3.1. Data description

All audio was processed using librosa (Python) to extract quantitative features characterizing tempo, harmony, and timbre. Average tempo are strongly associated with genre conventions [16] and affect perceived energy/arousal [17]. Similarly, chord progressions reflect harmonic language distinctive to genres or eras [18], and timbral descriptors like spectral centroid and MFCCs capture the sonic texture that often defines a genre [19]. Such features have been widely used in automatic music classification tasks [20] lending credence to their relevance here. Each track’s audio was normalized for amplitude and analyzed in full to obtain a robust average of these features over the song’s duration. The aggregate dataset thus provides a quantitative profile of J-Core’s stylistic attributes, forming the basis for the statistical results and interpretations that follow.

3.2. Statistical results

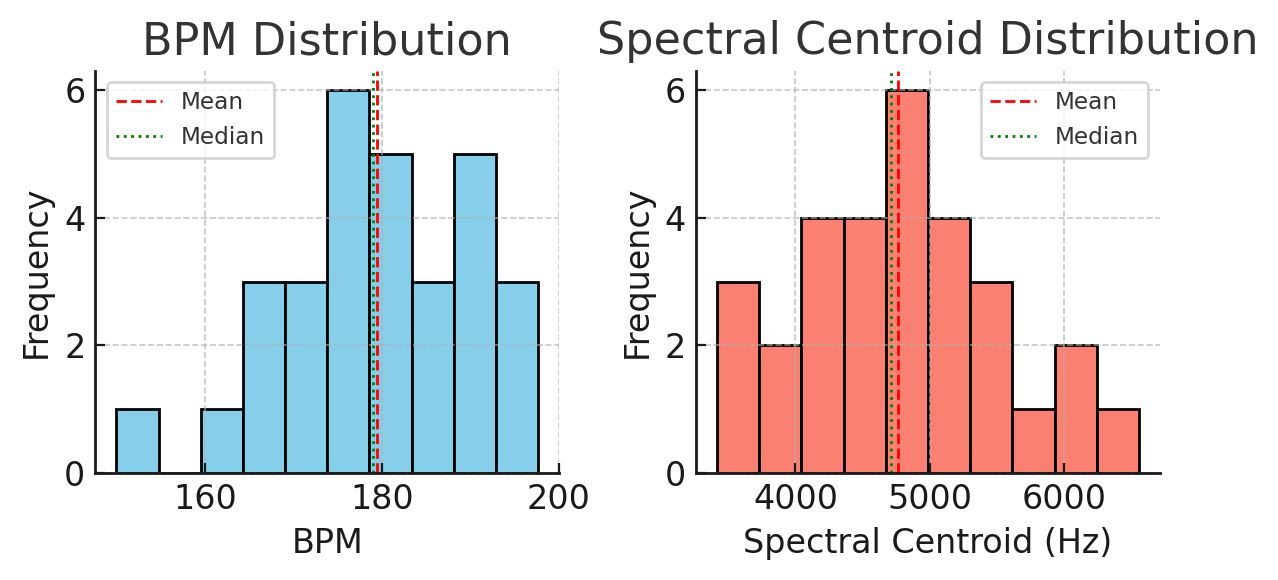

According to Table 1 and Figure 1, J-Core tracks uniformly have very high tempos with an average of 179 BPM. This falls in line with the known tempo range of hardcore techno and its Japanese variants (often 160–200 BPM or more [16]). The minor spread (SD ~11 BPM) suggests most songs hover around a similar fast tempo, with only a few outliers on the slightly slower end (~150 BPM). Such rapid tempos typically evoke high energetic arousal in listeners [17] and are a hallmark of the genre’s intensity.

The spectral centroid values likewise indicate that these tracks are timbre-wise bright: an average around 4.8 kHz is relatively high for music, meaning that, on average, the “center of mass” of the spectrum is in the upper mid-frequency range [18-21]. The lowest spectral centroid observed is ~3415 Hz and the highest ~6561 Hz, indicating some variation in brightness between tracks. This is consistent with the presence of distorted kicks, hi-hats, and high-pitched synth leads that J-Core is known for.

Figure 1: Histogram of tempo (BPM) and spectral centroid (Hz) across the 30 J-Core tracks. The left panel shows BPM distribution; the right panel shows spectral centroid distribution. Red dashed lines indicate the mean, and green dotted lines the median for each feature. The BPMs are tightly clustered at the high end (most songs ~170–190 BPM), and spectral centroids are similarly clustered around a high value (~5 kHz), reflecting the fast and bright characteristics of the dataset. Both distributions exhibit a slight right skew (a few tracks are exceptionally fast or bright), but generally confirm that extreme tempo and timbral brightness are defining qualities of these J-Core samples (photo/picture credit: original)

Table 1: Summary of tempo and spectral brightness (N = 30 tracks)

Value | Mean | SD | Min | Max |

Tempo (BPM) | 179.4 | 10.8 | 150 | 198 |

Spectral Centroid (Hz) | 4768 | 720 | 3415 | 6561 |

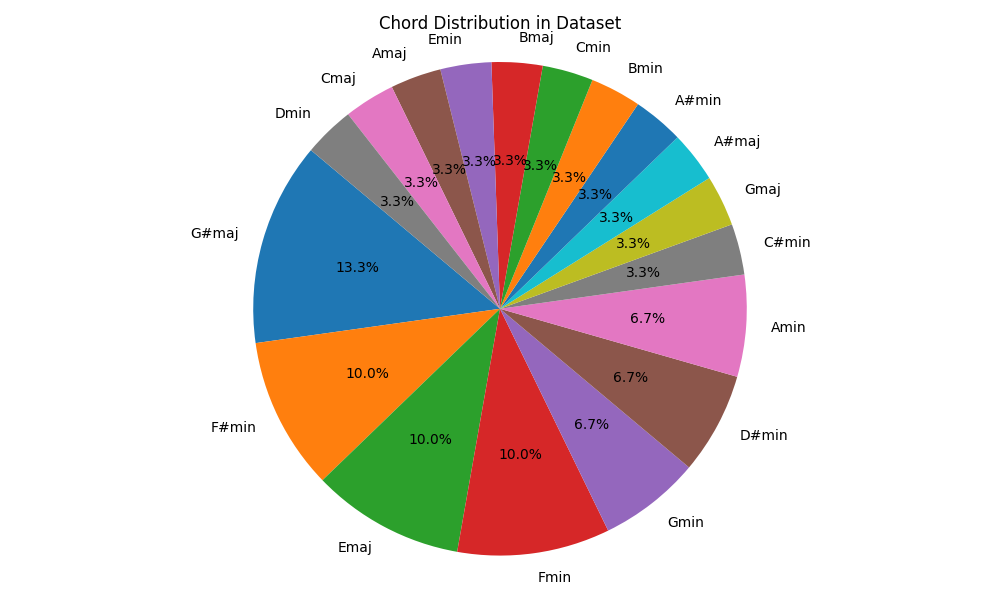

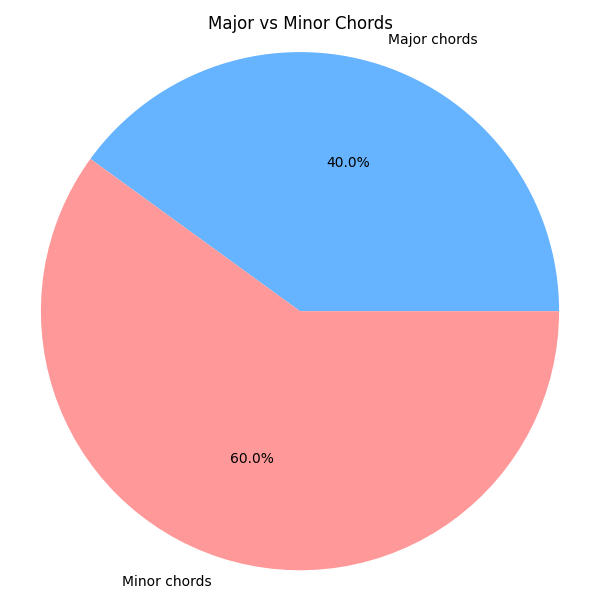

According to Figure 2 and Figure 3, the prevalent chords in the songs were also tallied: 18 out of 30 tracks (60%) had a minor chord (e.g. F minor, F# minor) as the most frequently occurring chord, while the remaining 12 tracks (40%) featured a major chord as the most common. This skew toward minor tonalities suggests a tendency for J-Core tracks to center on minor-key harmonies. The chord analysis reveals a clear preference for minor chords. For example, chords like A minor or D minor appeared frequently as the central chord of many tracks, whereas major chords were less common. It's worth noting that it was mentioned earlier that Jcore is usually presented in a cute style, and that cute should usually be presented using bright chords (major chords). This prevalence of minor tonalities could relate to the emotional tone of J-Core – minor keys are often associated with more intense or darker emotional expressions, which aligns with J-Core’s edgy, high-energy aesthetic. Fig. 3 shows the relative frequency of individual chords within the dataset. The most dominant chord is G# major, appearing in 4 out of 30 tracks (≈13.3%), followed by F# minor and F minor. The diversity in chord classes reflects the stylistic breadth of J-Core, which draws from both traditional tonal harmony and experimental progression.

Figure 2: Chord class distribution across 30 J-Core tracks (photo/picture credit: original)

Figure 3: Distribution of major vs. minor chords (photo/picture credit: original)

Fig. 3 compares the proportion of major chords (≈40%) to minor chords (≈60%) across the track list. The slight dominance of minor chords supports the emotional variability in modern J-Core. Minor chords often imply introspection or melancholic undertones, while major chords bring brightness and optimism. The near balance between these two categories highlights the genre’s dynamic emotional spectrum and its hybridized aesthetic across timbral and harmonic dimensions.

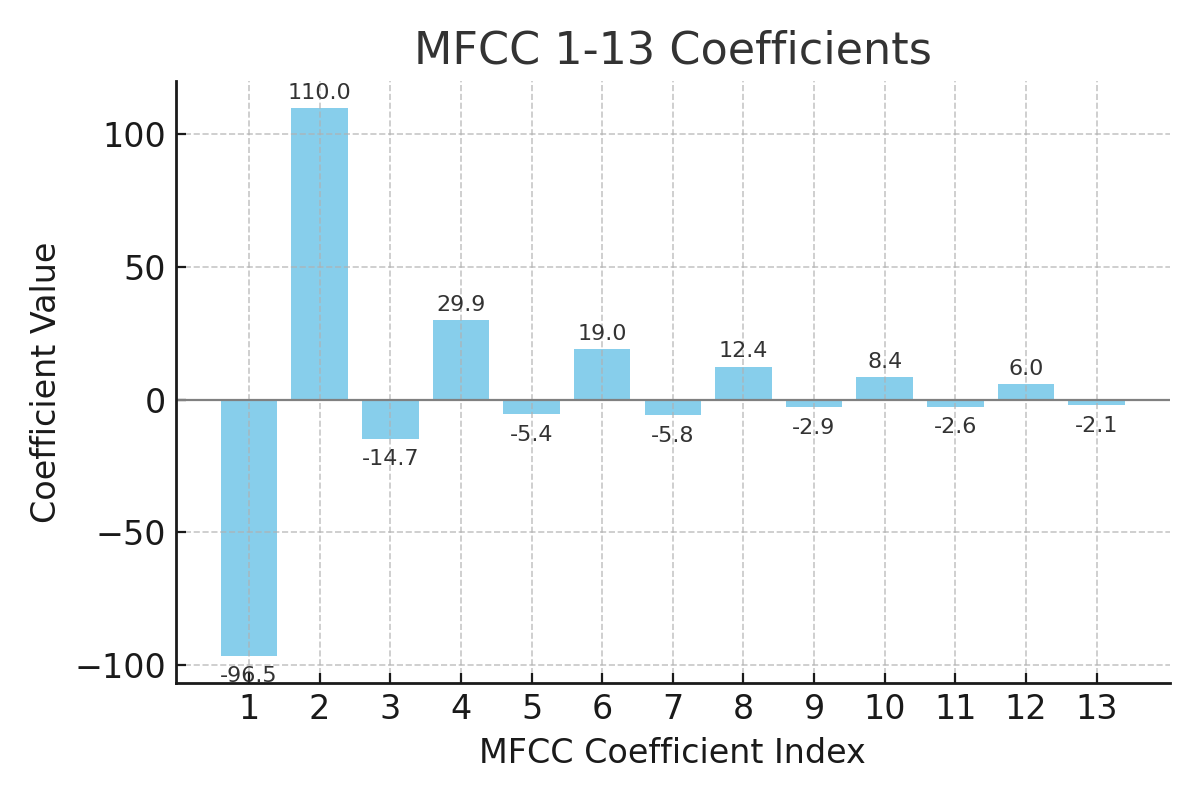

Finally, in terms of MFCCs, each coefficient was averaged across all frames per track and then across tracks to yield a mean MFCC vector representing the genre. The averaged MFCCs (seen from Fig. 4) provide a condensed view of the genre’s timbral fingerprint. In general, the first few MFCC coefficients have the largest magnitudes (positive or negative) while higher-order coefficients hover near zero, which is expected as the lower-order MFCCs capture the broad spectral shape and most variance [21, 22]. Excluding the first coefficient (overall energy bias), the remaining MFCC 2–13 show a pattern of alternating positive and negative values diminishing toward zero.

MFCC1 = -96.54 represents the log energy of the whole song, with more negative values indicating lower overall loudness or more pronounced dynamic compression. The -96 dB or so indicates that these tracks are not striving for “blasting” loudness, but rather a slightly more conservative or “cleaner” sound after compression. MFCC2 = 109.96 reflects the overall spectral slope, very high values indicate extremely rich high frequency components, such as sharp synthesizer leads, bright percussion, etc. This is not the same as what is commonly found in J-Core, but rather a slightly more conservative or “cleaner” compressed sound. This is highly consistent with the “high-frequency electro-acoustic” characteristics commonly found in J-Core. MFCC3 = -14.66 Indicates the spectral “roll-off”/fluctuation. This moderately negative value indicates that the upper half of the spectrum does not fall off much and the overall curve is smoother with no dramatic peaks and valleys.

Figure 4: MFCC coefficients 1–13 of the J-Core track. Positive and negative values indicate how different frequency bands contribute to the timbre. The large magnitude of MFCC1 (≈ -96.5) and MFCC2 (≈ 110.0) suggests an unusual spectral shape or energy distribution, while the remaining coefficients gradually taper. Such MFCC patterns are commonly used to represent timbre in MIR research and reflect the complex sound design of J-Core (mix of bass, mid, and high-frequency content) (photo/picture credit: original)

3.3. Explanation, limitations and prospects

Each feature offers insight into J-Core’s stylistic identity when interpreted in light of music research. The consistently high tempo (≈170–180+ BPM) confirms that J-Core firmly resides in the upper tempo echelons of electronic music. This rapid tempo is not only a genre-defining characteristic for hardcore styles [16], but it also has perceptual implications: fast music tends to induce higher physiological arousal and perceived energy [17]. The harmonic content of J-Core, as evidenced by the dominance of minor chords, suggests the music leans towards a darker or more serious emotional tone (minor modality) even as it remains rhythmically exhilarating. Prior studies have noted that mode (major vs. minor) can influence emotional valence in music [21-23]. Thus, J-Core’s preference for minor keys could imbue the tracks with an emotional complexity – combining high-arousal tempo with the relatively negative valence often linked to minor harmonies.

The spectral centroid (brightness) of the tracks is high, a higher spectral centroid means a greater presence of high-frequency components [21]. It can be surmised that, in J-Core, producers often use biting sawtooth wave synths, rapid-fire cymbals, and bit-crushed samples, all of which elevate the spectral centroid. This timbral brightness contributes to the perceived intensity of the music – studies have linked brightness with perceived intensity and energy [17]. It also makes the music cut through noisy environments, which is useful in club settings. On the other hand, the robust bass (low frequencies) in J-Core is evidenced indirectly by the MFCC profile. MFCCs are well-known to correlate with timbral qualities [19], so this finding quantitatively supports the claim that J-Core’s timbre is characterized by an aggressive, polished sound (thumping sub-bass + sizzling highs). Notably, MFCC coefficient 2 being large suggests a strong overall spectral slope – likely the result of heavy bass boosting – and coefficient 3 being negative suggests some mid-frequency dip (since MFCC3 can capture curvature in the spectrum). In sum, the audio features paint a picture of J-Core as fast, bright, bass-heavy, and minor-key oriented. These objective metrics reinforce genre descriptions from fan communities that emphasize speed and “hardness” of the sound.

Despite the clear trends observed, there are limitations to this study that must be acknowledged. First, the sample size is relatively small (30 tracks) and may not cover the full diversity of the J-Core genre. Also, the method of sample collection has bias in that it ignores all works except for the SoundCloud platform. The tag search is similarly bias, with many uploaders tagging their works with broader tags (e.g. Electronic) or more subdivided subsidiary styles (e.g. Hard Renaissance). These factors further contribute to the underrepresentation of the sample. J-Core is an underground genre with many producers; the dataset might be biased if, for example, many tracks come from the same album or artist. Secondly, the chord detection (identifying the most common chord per track) has its challenges: complex audio mixtures can confuse chord recognition algorithms. the harmonic analysis assumed that one chord could represent each track, which oversimplifies songs that employ chord progressions or key changes. Therefore, conclusions about minor vs. major prevalence should be taken as indicative, not absolute. Additionally, the MFCC-based timbral analysis, while useful, reduces a complex spectrum to a few numbers – some nuance (e.g. specific instrument sounds or noise textures unique to J-Core) might not be fully captured by the first 13 MFCCs alone. There is also the matter of production quality: differences in mixing and mastering loudness across tracks could affect features like spectral centroid and MFCC (though all audio was normalized to minimize loudness differences). Finally, the analysis did not incorporate rhythmic pattern features beyond tempo (such as beat syncopation or drum patterns) which could further distinguish J-Core from related genres.

Building on these findings, a more extensive study could be conducted with a larger library of J-Core tracks, possibly including comparisons to adjacent genres like UK Hardcore or Happy Hardcore to pinpoint what features are uniquely exaggerated in J-Core. Additional features could be extracted, such as spectral flux (to quantify the rapid timbral changes), chroma features (for a detailed look at tonal content beyond just the top chord), and rhythmic complexity measures.

4. Conclusion

To sum up, this study set out to quantitatively characterize the stylistic identity of J-Core music through core audio features. In summary, the analysis of 30 J-Core tracks revealed consistently extreme values in tempo (around 170–180 BPM), a dominance of minor-key harmony, a bright timbral profile with high spectral centroids (~5 kHz), and MFCC patterns indicating boosted bass and treble frequencies. These results paint a coherent picture of J-Core as a fast-paced, energetic genre with intense high-frequency content and a penchant for minor tonalities. Such attributes align with and reinforce descriptive accounts of the genre’s “hard” and ecstatic sound. One also discussed how these features likely contribute to the emotional impact of J-Core – for example, rapid tempo and brightness correlating with high arousal levels – and noted that the minor chords introduce a darker undertone despite the music’s hyper energy. Future work should expand the dataset and feature set to confirm these trends and explore additional nuances, possibly employing classification models or listener studies to validate the significance of these features. By linking measurable audio features to genre characteristics, this research demonstrates a framework for understanding niche musical styles in quantitative terms. Such an approach not only aids musicological analysis of emerging genres but can also improve music recommendation systems and cross-cultural studies by highlighting which acoustic features carry the essence of a genre like J-Core.

References

[1]. Schedl, M., Gómez, E. and Urbano, J. (2014) Music Information Retrieval: Recent developments and applications. Foundations and Trends in Information Retrieval, 8(2–3), 127–261.

[2]. Fu, Z., Lu, G., Ting, K.M. and Zhang, D. (2011) A survey of audio-based music classification and annotation. IEEE Transactions on Multimedia, 13(2), 303–319.

[3]. Müller, M. (2015) Fundamentals of Music Processing: Audio, Analysis, Algorithms, Applications. Springer.

[4]. Humphrey, E.J., Bello, J.P. and LeCun, Y. (2013) Feature learning and deep architectures for music information retrieval. Journal of New Music Research, 42(4), 299–318.

[5]. McFee, B., Raffel, C., Liang, D., et al. (2015) librosa: Audio and music signal analysis in Python. In Proc. of the 14th Python in Science Conf. (SciPy) (pp. 18–25).

[6]. Jenkins, D. (2018) Beyond J-Core: An Introduction to the Real Sound of Japanese Hardcore. Bandcamp Daily. 4, 26.

[7]. Host, V. (2015) A Kick in the Kawaii: Inside the World of J-Core. Red Bull Music Academy Daily, 1, 19.

[8]. AllMusic. (2012) Hardcore Techno – Genre Overview.

[9]. What is the music genre “J-CORE” born from Japanese animation? (2015) GIGAZINE. 1, 21.

[10]. Ito, M., Okabe, D. and Tsuji, I. (Eds.) (2012) Fandom Unbound: Otaku Culture in a Connected World. Yale University Press.

[11]. Müller, Fundamentals of Music Processing, Springer, 2015.

[12]. Tzanetakis, G. and Cook P. (2022) Musical genre classification of audio signals. IEEE Trans. Speech Audio Process., 12.

[13]. Müller, M. (2021) Fundamentals of music processing: Using Python and Jupyter notebooks (Vol. 2). Cham: Springer.

[14]. Cho, T., Bello, J.P. (2013) On the relative importance of individual components of chord recognition systems. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(2), 477-492.

[15]. Davis, D. and Mermelstein, P. (1980) Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process., 12.

[16]. Foroughmand, H. (2021) Towards global tempo estimation and rhythm-oriented genre classification based on harmonic characteristics of rhythm (Doctoral dissertation) IRCAM – Sorbonne Université, Paris, France.

[17]. Gómez, P. and Danuser, B. (2007) Relationships between musical structure and psychophysiological measures of emotion. Emotion, 7(2), 377–387.

[18]. Perez-Sancho, C., Rizo, D., Kersten, S. and Ramírez, R. (2008) Genre classification of music by tonal harmony. In Proc. International Workshop on Machine Learning and Music (MML 2008) Helsinki, Finland.

[19]. Flexer, A. (2007) A closer look on artist filters for musical genre classification. In Proc. 8th International Conference on Music Information Retrieval (ISMIR 2007) (pp. 447–450) Vienna, Austria.

[20]. Oramas, S., Barbieri, F., Nieto, O. and Serra, X. (2018) Multimodal deep learning for music genre classification. Transactions of the International Society for Music Information Retrieval, 1(1), 4–21.

[21]. XTzanetakis, G. and Cook, P. (2002) Musical genre classification of audio signals. IEEE Transactions on Speech and Audio Processing, 10(5), 293–302.

[22]. XLogan, B. (2000) Mel-frequency cepstral coefficients for music modeling. In Proc. International Symposium on Music Information Retrieval (ISMIR 2000) Plymouth, MA.

[23]. Daws, E. (2019) The effects of tempo, texture, and instrument on felt emotions. Durham University Research in Music & Science (DURMS), 2, 32–39.

Cite this article

Yu,A. (2025). Feature Analysis of J-core Music Based on Statistics of Python. Applied and Computational Engineering,160,77-85.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-SEML 2025 Symposium: Machine Learning Theory and Applications

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Schedl, M., Gómez, E. and Urbano, J. (2014) Music Information Retrieval: Recent developments and applications. Foundations and Trends in Information Retrieval, 8(2–3), 127–261.

[2]. Fu, Z., Lu, G., Ting, K.M. and Zhang, D. (2011) A survey of audio-based music classification and annotation. IEEE Transactions on Multimedia, 13(2), 303–319.

[3]. Müller, M. (2015) Fundamentals of Music Processing: Audio, Analysis, Algorithms, Applications. Springer.

[4]. Humphrey, E.J., Bello, J.P. and LeCun, Y. (2013) Feature learning and deep architectures for music information retrieval. Journal of New Music Research, 42(4), 299–318.

[5]. McFee, B., Raffel, C., Liang, D., et al. (2015) librosa: Audio and music signal analysis in Python. In Proc. of the 14th Python in Science Conf. (SciPy) (pp. 18–25).

[6]. Jenkins, D. (2018) Beyond J-Core: An Introduction to the Real Sound of Japanese Hardcore. Bandcamp Daily. 4, 26.

[7]. Host, V. (2015) A Kick in the Kawaii: Inside the World of J-Core. Red Bull Music Academy Daily, 1, 19.

[8]. AllMusic. (2012) Hardcore Techno – Genre Overview.

[9]. What is the music genre “J-CORE” born from Japanese animation? (2015) GIGAZINE. 1, 21.

[10]. Ito, M., Okabe, D. and Tsuji, I. (Eds.) (2012) Fandom Unbound: Otaku Culture in a Connected World. Yale University Press.

[11]. Müller, Fundamentals of Music Processing, Springer, 2015.

[12]. Tzanetakis, G. and Cook P. (2022) Musical genre classification of audio signals. IEEE Trans. Speech Audio Process., 12.

[13]. Müller, M. (2021) Fundamentals of music processing: Using Python and Jupyter notebooks (Vol. 2). Cham: Springer.

[14]. Cho, T., Bello, J.P. (2013) On the relative importance of individual components of chord recognition systems. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(2), 477-492.

[15]. Davis, D. and Mermelstein, P. (1980) Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process., 12.

[16]. Foroughmand, H. (2021) Towards global tempo estimation and rhythm-oriented genre classification based on harmonic characteristics of rhythm (Doctoral dissertation) IRCAM – Sorbonne Université, Paris, France.

[17]. Gómez, P. and Danuser, B. (2007) Relationships between musical structure and psychophysiological measures of emotion. Emotion, 7(2), 377–387.

[18]. Perez-Sancho, C., Rizo, D., Kersten, S. and Ramírez, R. (2008) Genre classification of music by tonal harmony. In Proc. International Workshop on Machine Learning and Music (MML 2008) Helsinki, Finland.

[19]. Flexer, A. (2007) A closer look on artist filters for musical genre classification. In Proc. 8th International Conference on Music Information Retrieval (ISMIR 2007) (pp. 447–450) Vienna, Austria.

[20]. Oramas, S., Barbieri, F., Nieto, O. and Serra, X. (2018) Multimodal deep learning for music genre classification. Transactions of the International Society for Music Information Retrieval, 1(1), 4–21.

[21]. XTzanetakis, G. and Cook, P. (2002) Musical genre classification of audio signals. IEEE Transactions on Speech and Audio Processing, 10(5), 293–302.

[22]. XLogan, B. (2000) Mel-frequency cepstral coefficients for music modeling. In Proc. International Symposium on Music Information Retrieval (ISMIR 2000) Plymouth, MA.

[23]. Daws, E. (2019) The effects of tempo, texture, and instrument on felt emotions. Durham University Research in Music & Science (DURMS), 2, 32–39.