1. Introduction

According to Encyclopedia Britannica, the origins of computer music can be traced back to the 1950s. Hiller and Lejaren composed the Illiac Suite for string quartet using the ILLIAC I computer. This piece is a full-length piece of music by a computer, blending random and new elements with traditional music composition. Alan Turing’s works also introduced early attempts at generating computer-based music, demonstrating the ability to perform simple melodies on the machine. In “Artificial intelligence and music: History and the future perceptive.”, Verma continues to explain that composers such as Max Mathews pioneered digital audio synthesis at Bell Labs in the 1960s created MUSIC I, which is a program that allows the computer to generate sound waves. This helped lay the foundation for the field of algorithmic composition, and enable composers to generate music by defining mathematical and logical rules [1-3]. Later on, scholars highlighted the creation of FM Synthesis developed by John Chowning, by the 1970s and 80s [4]. The FM Synthesis allowed the creation of complex tones that mimic the sound of natural instruments. The release of the Yamaha DX7 synthesizer made this technology accessible to the world. In the 1990s, artificial intelligence began to play an essential role in the music generation. AI models began to use statistical techniques such as Markov Chains to mimic compositional styles which led to more complexed algorithm compositions. Based on “Tools for AI Music Creatives: Mapping the field” modern AI tools used for music creation and composition such as Amper music or Google’s Magenta etc. can generate more realistic compositions across genres [5].

Previous study researched the increasing role of AI in assisting musicians such as chord analysis, lyrics generation, and audio mastering [6]. Another study explores the implication of AI generated on the industry and discusses how AI models learn from vast data sets and apply them and methods to generate original pieces. Some scholars highlighted platforms such as Amper Music and AIVA has modified the composition process [7]. The software used machines learning models generate high quality music in different genres. Music composition for dummies” discusses the incorporation of AI features into mainstream advanced digital audio workstations for instance Ableton Live, Logic Pro or FL Studio, the software offer AI assisted features which reduces the technical barriers of music composers. As cloud computing began to rise modern music composing software has shifted towards cloud-based software. The impact of artificial intelligence on musicians analyzed how AI has transformed music mastering, making professional music more accessible. Software such as LANDR or Emaster utilizes machine learning to provide more high-quality solutions.

The motivation of this paper is to dig into the difference between music composing software, focusing no their machine learning, functions, performances, and marketing comments. In this paper, three different music composing software will be introduced: Amper music, Ml studio, and AIVA. Each software will be presented individually on its development, basic overview, and functions and cutting-edge uses. In the end, the three software will be compared to one another, the strengths and limitations and future expectations will be discussed.

2. Descriptions of music composing software

According to research, the classification of music composing software can be split into four types. First is a traditional digital audio workstation (DAW). Traditional DAWs serve as the backbone of music computing, allowing people to compose, record, mix, and master music. Main characteristics of this type of music composing software are multitrack and editing, MIBI sequencing, audio effects and plugins and automation. Some computing software examples are Ableton Live and FL Studio. DAWs remain the industry standard by integrating AI-powered features to assist it [8].

The second type is the AI-assisted music computing software. AI-assisted music computing software generates original compositions or assists composers in arranging melodies, harmonies and structures. These tools use learning models to analyze existing current music and create new compositions. The main characteristics are automated music generation, AI based harmonization, genre and mood selection, and real-time adaptation. Some popular examples of music computing softwares are AIVA and Amper Music. Others note that AI assisted tools are widely used and adopted in background music production such as video content and advertising [9-11].

The third type is cloud-based music computing platforms, which allows composers to compose, edit, and collaborate remotely. Machine learning studios provide softwares and platforms cloud-based tools and frameworks. The key features are online music collaboration, developing machine learning models from multiple source data, cloud storage, AI-Powered mixing and mastering, and loop and sample libraries. Popular software examples are BandLap, Endless, and ML Studio. Scholars show that cloud based softwares has become popular for independent musicians [12].

The last type is melody generators and AI lyric generators. This type of software assists composers to generate lyrics and melodies based on specified themes, emotions, and styles. Main features are melody composition, voice synthesis, and AI based lyric generation. Examples of software are Melobytes and Humtap. Other study discusses the usefulness of AI lyric generators for songwriters and giving them the opportunity to explore and experiment with different lyrical structure and melodies [13-15].

The main uses of music computing software are music production and recording, film and video soundtracks, advertising and marketing, content creation, and education and learning. Professional composers use DAWs for studio-quality music production and recording, music computing software enables composers to record multiple tracks, the creation of music without physical instruments using MIDI composition and virtual instruments, advanced plugins and effects to help mix, refine, and balance sounds, and finalization of tracks with assistance of AI. Film composers, game developers and sound designers use music computing software for soundtracks, software could help with orchestral and cinematic scoring to generate orchestral arrangement; and to generate sound effects and ambiance, and background scores. Brands and marketing agencies use music computing software for advertising and marketing, AI assisted software can customize background music in a short time, and brands can avoid license fees by generating music with software. Users of social media such as podcasts or YouTube can avoid concerns of copyright and also help creators design unique and signature sounds for their content; it is also easy to handle for non-musicians. For teachers and students, music composing software can be used as education and learning tools. Cloud based platforms can enable real-time composition, and for beginners such as students they can experiment with melody and harmonic generation.

3. Amper music

Amper music was one of the first AI driven music composing software and was founded in 2017. It was developed by Drew Silverstein, Sam Estes, and Michael Hobe. Their goal was to allow content creators, filmmakers, and musicians accessible to AI assisted music composition. The system was designed to generate music pieces through minimal input of users allowing users to specify the genre, mood, and instrumentation. In 2019, Amper music was acquired by Shutterstock, this made Amper music as a leading AI tool for stock music creation. At the same time, Amper music improved in its AI to analyze musical structures, create harmonized compositions, and generate royalty-free music on demand [6]. Currently, Amper music ability is now enhanced by machine learning models to create more realistic instrument sounds and dynamic compositions [3], also users now can interact with the Ai to refine compositions by tempo, melody, and harmony. This software also helps in podcasts production, game development and film scoring., which the software customized for various industries. Researchers highlight the usage and implication of AI generated music on copyright and human creativity.

The basic overview of Amper Music can be categorized into four parts. First is AI powered generation. Amper Music uses AI to generate music in a short period of time based on the input information of the user. Users could select and request certain parameters such as style, dynamic, mood and instruments. Next is that Amper Music has a cloud-based platform. It is accessible online for everyone and requires no installation. It also integrates with other AI tools, e.g., Shutterstock. Amper Music is also a user-friendly software. It was designed for non-musicians and professionals, in which Amper music provides pre-generated loops and melodies. Last, Amper music has royal free music licensing. It generates commercial use music with full licensing rights and it reduces the need for traditional licensing [7].

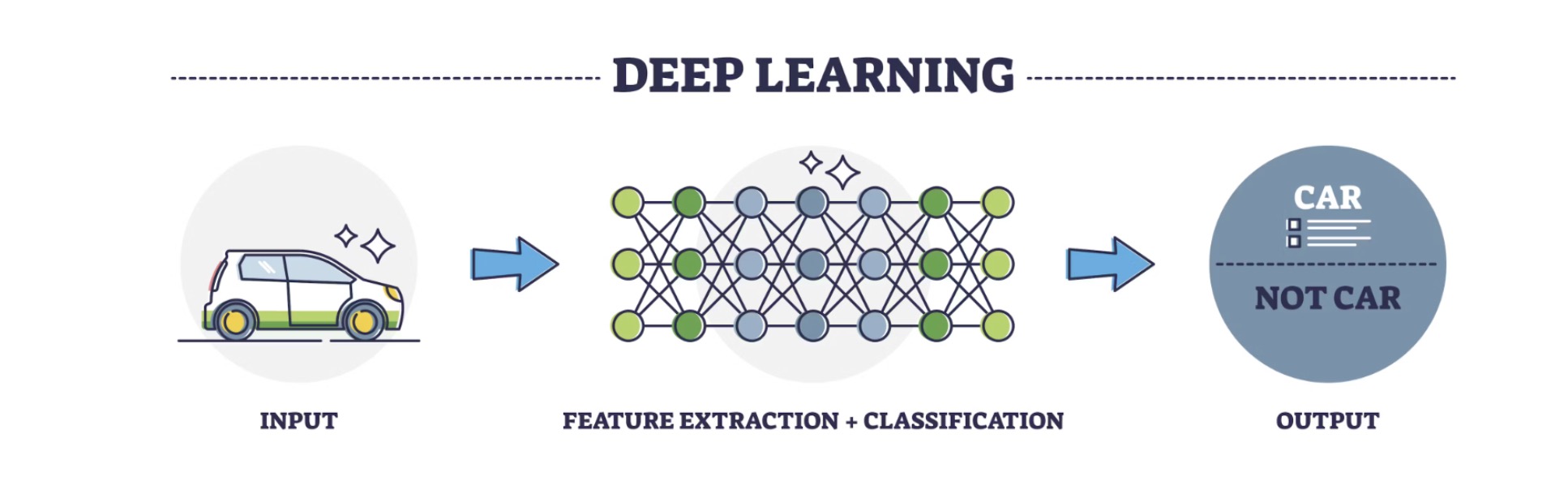

The functionality and cutting-edge uses of Amper Music are designed to simplify and automate the music making process. The core functions of Amper Music are: AI generated music composition. As shown in Fig. 1 [12], users input genre, mood, tempo, and instrumentation into Amper music , and thus then generates tracks that satisfy the users’ demands. The system uses deep learning algorithms and symbolic representations to generate music structures, by using neural networks trained on vast datasets of musical compositions. The model then extracts musical features such as rhythm, harmony, and melody patterns. By using these features, it classifies and predicts suitable musical continuations or compositions based on learned structures. The output is a new generated, coherent music piece. Another function is royalty free licensing, platforms such as Amper Music and Aiva allow creators to generate royalty-free music for use in various commercial contexts, opening up new opportunities for emerging artists to enter the music business without the need for extensive financial investment. AI democratizes music creation, making it more accessible to a global pool of artists [6]. Amper music helps creators and users remove legal barriers. In addition, Amper music is easy to use for users with no formal music education. The developers behind Amper created the tool to be used by anyone regardless of their former music background and expertise, but also regardless of which physical tools, used for composing music, you have at your own disposal [7]. Furthermore, Amper music could be used as a tool for music editing. Users can edit tempo, key, instrumentation, and arrangement and transitions, the platform allows real-time preview and export of tracks for edit needs.

4. Mlstudio

Microsoft Azure Machine Learning Studio, commonly known as ML studio, is a cloud based integrated development environment (IDE) designed to simplify the process of machine learning models. ML Studio was introduced by Microsoft in the mid 2010s as part of the Azure cloud services. In 2015, targeting both professional and beginner users, initial launch was made as a visual, specifically drag and drop, ML service. In 2018, Major updates were introduced, such as deeper learning capacity, more integrated automated ML or AutoML, and support for open-source tools. In the 2020s, cloud-native ML pipelines became the standard which allows faster model employment at scale, especially for real-time industries, for instance health care, music production and finance. Adoption was made in creative domains such as music enervation, sound classification, and audio enhancement [3]. Nowadays, ML Studio supports from data preparation to model training, validation, deployment, and monitoring, integrated with Azure cloud services. The evolution of the ML Studio reflects a broader industry shift toward low code AI development environments.

The basic features of ML Studio are that it is a Cloud based platform, meaning that it allows salable processing and global access when operating entirely on Microsoft Cloud. Another key feature is that it has no code or low code environment, the drag and drop interface allows users to create ML models without writing a code, which is ideal for users such as business analysts, data scientists, and researchers who do not have strong programming skills. In addition, ML Studio provides custom code support. For users that require more complex and advanced functionality, ML studio supports by integrating Python and R scripts. This provides users with the flexibility to augment usual workflows and introduce customized steps in pipeline [11]. Next is that ML Studio contains pre-built algorithms and modules. The platform contains rich built in machine learning algorithms, which are regression, classification, and clustering, in which includes data preprocessing, statistical functions, and evaluation metrics. The modules are for processing, feature selection and validation [11]. Another feature is data source integration. ML Studio allows users to integrate easily with a range of sources, including Azure Blob Storage, SQL Azure Databases, Web APIs, and. Local files. This allows users to pull data from different diverse environments without having a complex setup. The automated machine learning (AutoML) is also a core feature. It can help users by simplifying the model creation process by automatically selecting and tuning algorithms to optimize performance. This accelerates the development time and reduces the trial-and-error modeling.

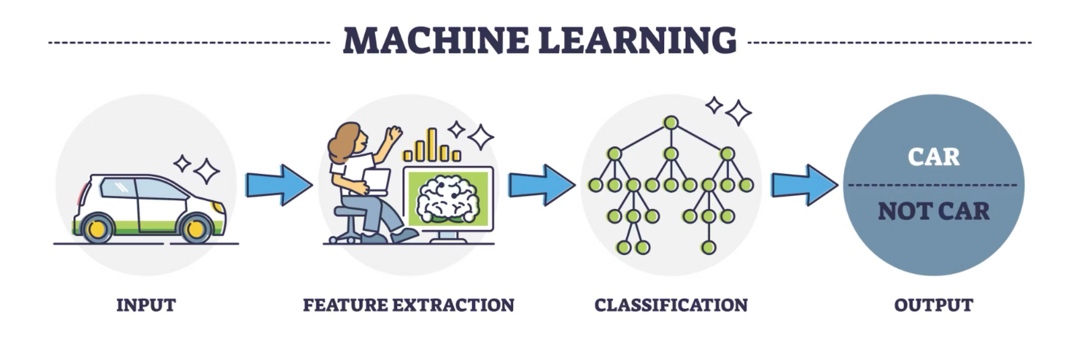

One major function is end-to-end pipeline or model lifecycle. As shown in Fig. 2 [12], the graph shows a brief idea and concept of how machine learning works in a cycle. The machine learning workflow starts with ingestion, in which the user brings raw data from multiple sources, the purpose of this is to ensure the user has clean and structured data. Next is the core machine learning phase called training. The user selects an algorithm and trains the model on the dataset, tools that ML Studio provides are built-in modules, python scripting and Auto ML as mentioned before. The purpose of this step is to teach the model to recognize the patterns in the data. The third step is sting, in this step this machine is seen on unseen data to validate accuracy, avoid overfitting, and select the best data. The last step of the cycle is deployment. Once the model is performed well, the model is deployed for real world use, the purpose of deployment is to ensure that the del is usable by the user. However, ML studio is a one-stop platform for ML operations and monitoring. Which implies ML Studio does not stop at deployment, it also includes tools to manage a full cycle. For instance, version control, pipeline orchestration, monitoring etc. [11]. The last main function are the collaboration tools provided by ML Studio. It promotes collaboration with team and other users online with version control to track changes in experiments and models, allowing multiple users work on the same project also ensures reproducibility and transparency.

5. AIVA

Artificial Intelligence Virtual Artis, abbreviated as AIVA, is a pioneering Ai-powered music composition software that is designed to generate original music. was found and first developed in 2016 by a startup based in Luxemburg. The goal was by using deep learning algorithms to mimic the styles of great composers. The development of AIVA, by demonstrating a leap from early rule-based systems to neural network driven engine, is a key milestone in the history path of AI in music [1]. Using deep learning and reinforcement learning to understand the harmonies, structures, and styles; AIVA was trained with large datasets of classical music scores.

One basic functional overview of AIVA is music composition in different genres, such as classical, pop, jazz, cinematic, and ambient etc., by using deep learning algorithms trained on thousands of classical, cinematic, jazz, pop scores, AIVA exemplifies systems that leverage multi-level representations for instance note sequences, chords, and structures to produce music that is structurally and harmonically coherent [3]. Another function of AIVA is style imitation and customization, based on the user’s request and selection of composer style and mood, AIVA can replicate styles of composers or specific genres. AIVA is capable of style transfer, where models adapt learned patterns to generate music reflective of specific influences [13]. AIVA can also be functioned as an interactive composition tool. AIVA contains user-friendly tools such as tempo, mood, key, and instrumentation setting; compositions via piano roll or score view editing; and length and complexity of compositions adjusting. AIVA is suitable for non-musician users, the drag-and-drop interface and usual music editing makes AIVA accessible for learners and creators [4]. Furthermore, AIVA can be used for audio and MIDI export for production. Work generated in AIVA can be exported in MIDI format, which is used in Digital Audio Workstations (DAWs); audio file for virtual instrument usage; and sheet music, used for performance and educational purposes. Another function is the use in media and soundtracking. AIVA is commonly used in film scoring, video game soundtracks, advertisements, and background music for content creators. Tools like AIVA generate adaptive soundtracks, which means adjusting the music in real time based on visual or emotional input [12]. AIVA also serves as a collaborative and educational application. AIVA supports users and computers a collaborative workflow, where composers can blend AI output with human creativity in co-creative workflows. It also demonstrates music theory and composition in real-time, acting as a teaching tool.

Some typical cutting-edges of AIVA are that it can be used as a film and game soundtrack generation. AI tools like AIVA are now being used to generate adaptive soundtracks for video content, dynamically adjusting music to match scenes or player emotions in games [12]. Another cutting-edge is personalized music creation. AIVA is now used to generate and customize soundtracks for YouTube, influencers, and small businesses, reducing the dependence on stock music, which would help eaters avoid copyright issues. In addition, the collaboration between human and AI in composition is also another cutting edge. AIVA allows users whether musicians or nonmusicians to collaborate with AI by starting with an idea of melody, key, or mood, and letting AI extend and complete it. AIVA also allows style transfer and cross genre. AIVA enables users to lend in classical motifs with modern rhythms or pop structures, facilitating new musical forms by fusing incompatible styles in a coherent, rule-based way [13].

6. Comparison, limitations and prospects

Amper music, ML Studio (Microsoft Azure Machine Learning Studio) and AIVA (Artificial Intelligence Virtual Artist) are leading tools among the AI driven music creation. Amper music founded in 2017, was primarily designed for content creators, filmmakers, and non-musicians, to enable them to generate royal free music with minimal input. Its technology base is deep-learning and symbolic representations, which allowed users to select genre, mood, tempo, and instrumentation, automating the process using AI and machine or deep learning. The platform is easy to use, it is cloud-based and user-friendly designed for users with no prior knowledge of music and formal musical education. The cutting edges and the key strengths of Amper music are fast music generation with royalty-free licensing and its editing tools. Its target users and used cases are film scoring, podcasting, stock music, game development, content creators, and marketers.

On the other hand, AIVA established in 2016, had a goal of using AI composition to mimic styles of famous composers or genres, and by using deep learning and reinforcement learning to innovate upon the classical compositions. Due to reinforcement learning trained on large amounts of classical and different genre scores. AIVA can mimic styles of composers and genres, and allow customization on the tempo, key, mood, and instrumentation. AIVA is also accessible to non-musician users and provides collaborative between human and robot tools. The cutting edges of AIVA are style imitation, adaptive soundtracks, and cross-genre composition. It used cases include film/game soundtracks, education, influencer music, learners and small businesses.

Whereas, Microsoft Azure Machine Learning Studio stands apart in its domain as a machine learning platform rather than a music-specific tool. ML Studio was founded in the mid-2010s, with a goal of having full ML pipeline management for all industries. ML Studio is a cloud-based, end-to-end machine learning development environment. It supports no-code/low-code model creation via a Drag-and-drop ML interface with AutoML and Python and R scripting. Although not tailored to music, ML Studio has extended its sound classifications and music generation through integrations. The key strengths of ML Studio is its scalability, data integration, and collaborative tools that support real-time team-based development. Targeting on health, finance, audio processing, ML education, analysts, researchers, and developers [14, 15].

Each software has constraints and limitations. Amper music is limited in genre complexity compared to human composers. This may lead to less emotions sounding and contained within the composition, the piece wouldn’t be able to convey emotions and feelings. Although it is advantageous for non-musicians, it lacks the intricate compositional capabilities of DAWs which is more advanced. Amper music lacked the focus on advanced musical theory or deep style imitation, which may limit the depth of the composition. The repetition and reliance on pre-built templates and loops will lead to music that lacks uniqueness, which the output may feel predictable or formulaic. AIVA also lacks emotional authenticity, despite the software having impressive technical ability, the composition may lack emotional depth and originality associated with human-created music. And style transfer and when blending in multiple music styles, some may produce inconsistent or musically incoherent results, depending on the complexity of the genres involved. Although AIVA is user friendly and editing lean, it still has limitations in fine-tuning and advanced editing features

ML Studio is not specialized for music, it is a general-purpose machine learning platform. At the same time while Drag-and-drop interfaces support low-coders, using music to build custom models requires expertise in Machine Learning, which makes it less accessible for those without a technical background. Furthermore, it has high resource demands due to its cloud-based nature, ML Studio may be resource intensive for low-coders, particularly in pipeline deployment scenarios and complex orchestrations. For all three software, the future is promising. For Amper Music, it is likely to evolve with more nuanced AI models that can offer better harmonization, more emotional depth, and personalized music generation based on user behavior. An area of future development is the incorporation of AI-generated vocals and lyrics, allowing users to create full music compositions without needing external tools. AIVA can become a true co-creative partner with its advancements in reinforcement learning and real-time interactivity, AIVA could offer dynamic soundtrack adaptation not just based on user input but real-time environmental feedback. The blend of human and AI collaboration may also usher in new musical genres from this creativity. For the prospect of ML Studio, the software could integrate with Generative AI for Audio Synthesis. ML Studio could increase support models to generate more realistic music or audios. Due to this, developers and users could use ML Studio to train or deploy generative models that create entire music pieces, voices, or sound textures from text or structured prompts.

7. Conclusion

In this study, three representative music composing software, i.e., Amper Music, AIVA, and Microsoft Azure ML Studio, focused on their development, functionalities and cutting-edges. The analysis revealed that Amper Music is highly accessible, ideal for content creators and non-musicians with royalty-free music through a cloud-based platform. AIVA stands out for its deep learning-driven composition capabilities, style imitation, and adaptability in film scoring and personalized content creation. Meanwhile, ML Studio, though not music-specific, supports powerful end-to-end machine learning pipelines that can be applied to music generation, classification, and enhancement tasks. By comparing these three software, each software has its limitations: Amper and AIVA may lack emotional depth and advanced customization, while ML Studio demands technical expertise and is less intuitive for music-specific use cases. Looking ahead, these platforms are expected to evolve with deeper emotional modeling, real-time interactivity, and broader integration of generative audio capabilities and may shape the future of both music production and human-AI collaboration.

References

[1]. Maganioti, A.E., Chrissanthi, H.D., Charalabos, P.C., Andreas, R.D., George, P.N. and Christos, C.N. (2010)

[2]. Verma, S. (2021) Artificial intelligence and music: History and the future perceptive. International Journal of Applied Research, 7(2), 272-275.

[3]. Hiller, L. (2025) Electronic music. In Encyclopedia Britannica.

[4]. Ji, S., Luo, J. and Yang, X. (2020) A comprehensive survey on deep music generation: Multi-level representations, algorithms, evaluations, and future directions. arXiv preprint arXiv: 2011.06801.

[5]. Jarrett, S. and Day, H. (2024) Music composition for dummies. John Wiley & Sons.

[6]. Efe, M. The History of Artificial Intelligence in Music. MÜZİK ve GÜZEL SANATLAR EĞİTİMİ, 33.

[7]. Fox, M., Vaidyanathan, G. and Breese, J.L. (2024) The impact of artificial intelligence on musicians. Issues in Information Systems, 25(3).

[8]. Martin, E. and Avila Rojas, L. O. (2022) Tools for AI Music Creatives: Mapping the field.

[9]. Gera, S. (2025) The Impact of Artificial Intelligence on Music Production: Creative Potential, Ethical Dilemmas, and the Future of the Industry.

[10]. Geelen, T.V. (2020) Motivations for using Artificial Intelligence in the popular music composition and production process (Master's thesis).

[11]. Milošević, M., Lukić, D., Ostojić, G., Lazarević, M., and Antić, A. (2022) Application of cloud-based machine learning in cutting tool condition monitoring. Journal of Production Engineering, 20-24.

[12]. Borra, P. (2024) Advancing data science and AI with azure machine learning: A comprehensive review. International Journal of Research Publication and Reviews, 5(6), 1825-1831.

[13]. Turgay, E. Y. (2024) The Use of Artificial Intelligence Techniques for the Creation of Soundtracks from Videos.

[14]. Karpov, N. (2020) Artificial Intelligence for Music Composing: Future Scenario Analysis.

[15]. Frid, E., Gomes, C. and Jin, Z. (2020) Music creation by example. In Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1-13).

Cite this article

Yan,A.S.T. (2025). Comparison of Music Composing Software: Amper Music, MLstudio and AIVA. Applied and Computational Engineering,166,169-176.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-SEML 2025 Symposium: Machine Learning Theory and Applications

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Maganioti, A.E., Chrissanthi, H.D., Charalabos, P.C., Andreas, R.D., George, P.N. and Christos, C.N. (2010)

[2]. Verma, S. (2021) Artificial intelligence and music: History and the future perceptive. International Journal of Applied Research, 7(2), 272-275.

[3]. Hiller, L. (2025) Electronic music. In Encyclopedia Britannica.

[4]. Ji, S., Luo, J. and Yang, X. (2020) A comprehensive survey on deep music generation: Multi-level representations, algorithms, evaluations, and future directions. arXiv preprint arXiv: 2011.06801.

[5]. Jarrett, S. and Day, H. (2024) Music composition for dummies. John Wiley & Sons.

[6]. Efe, M. The History of Artificial Intelligence in Music. MÜZİK ve GÜZEL SANATLAR EĞİTİMİ, 33.

[7]. Fox, M., Vaidyanathan, G. and Breese, J.L. (2024) The impact of artificial intelligence on musicians. Issues in Information Systems, 25(3).

[8]. Martin, E. and Avila Rojas, L. O. (2022) Tools for AI Music Creatives: Mapping the field.

[9]. Gera, S. (2025) The Impact of Artificial Intelligence on Music Production: Creative Potential, Ethical Dilemmas, and the Future of the Industry.

[10]. Geelen, T.V. (2020) Motivations for using Artificial Intelligence in the popular music composition and production process (Master's thesis).

[11]. Milošević, M., Lukić, D., Ostojić, G., Lazarević, M., and Antić, A. (2022) Application of cloud-based machine learning in cutting tool condition monitoring. Journal of Production Engineering, 20-24.

[12]. Borra, P. (2024) Advancing data science and AI with azure machine learning: A comprehensive review. International Journal of Research Publication and Reviews, 5(6), 1825-1831.

[13]. Turgay, E. Y. (2024) The Use of Artificial Intelligence Techniques for the Creation of Soundtracks from Videos.

[14]. Karpov, N. (2020) Artificial Intelligence for Music Composing: Future Scenario Analysis.

[15]. Frid, E., Gomes, C. and Jin, Z. (2020) Music creation by example. In Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1-13).