1. Introduction

Bone fracture is a partial or complete break in a bone which has a real damage to the people’s health. For example, researches show that changes which are the result of isolated long bone fracture might lead to secondary cardiac injury [1]. As the proportion of the aged will be double by 2050 and the appearance of bone fractures was thought to rise in the future, bone fracture healing is becoming a new problem in public health area [2]. That is why many researchers want to find an effective way to improve the cure of bone fracture. Some researchers begin to integrate Artificial Intelligence (AI) technology into bone fracture treatment. That is mainly because AI has a lot of advantages in helping doctor cure their patients. AI can not only change dynamics such as health care providers but also has possibility to improve the outcome of patient safety [3]. Also, AI has the ability to assist clinicians making better decisions and has contributed to the development of drug [3]. AI can work whole day which means that they can give patients treat help when doctors do not work.

There already have a lot of successful case about how AI help doctor complete their cure their patient. For example, AI based on deep learning is quickly closing the gap between AI and real human [4]. Actually in the area of breast cancer screening, the ability of AI is not weaker than real human doctor [4]. Some scholars built a predict system by data mining tools and techniques [5]. There is no surprise that many scholars want to use the machine learning to assist the cure of bone fracture healing to help solve the future public health problem making by the increasing patients who have bone fracture. For example, they built up a lot of models to help doctors to classify the category of the bone fracture. However, when scholars want to train their models, they need lots of bone fracture patient X-ray picture as their training data and it is really hard to collect the data because the total case report is limited, and it is difficult to share the data between different countries. It really impedes the development of bone fracture cure assisted by machine learning.

To solve the limitation, getting abundant data to build the model, this study built a generate model which can generate the bone fracture by Deep Convolutional Generative Adversarial Network (DCGAN). The bone fracture X-ray pictures data set from the website Kaggle were collected. Five layers network was built in the generator and discriminator. This study trained the model in 900 epochs. In each epoch, this study used generator to generate some fake picture by noise at first. Then it used discriminator to discriminate the real data and fake data to get the real loss and fake loss. This study added the real loss and the fake loss as discriminator loss and updated discriminator using the discriminator loss. Next, it used generator to generate some fake picture by random. After that this study used the discriminator to discriminate it and got the generator loss which used to updated generator. The goal of discriminator is to increase the loss between the generating picture and real picture so with the increasing ability of the generator generating picture the discriminator will have a better ability to discriminate whether the picture is real or not. Also, the increasing ability of the discriminator will urge the generator to generate more real like picture. By the end of the training, the BCE loss of the discriminator is 0.8281 and the BCE loss of the generator is 1.9012.

2. Method

2.1. Dataset description and preprocessing

The data set of this study comes from Kaggle, bone fracture detection using x-rays [6]. The original data is built for image classifier which can detect fractures in x-ray image. It comprises of fractured and non-fractured x-ray images of different joints in the upper extremities. In this article, however, it just uses the fractured x-ray image of the original data because the goal of the study is to create a generator which can generate fractured image. It is useless for this study using the non-fractured bone x-ray image. The number of the data which is really used in this study is 4480. The size of the single image in the data set the study used is not uniform but most of them is 224×224 and the style of the picture is RGB. Figure 1 provide some sample images on the collected dataset.

|

Figure 1. The sample images on the collected dataset. |

This study also carried out some preprocessing for the original data. It uses the Resize and CenterCrop in the transform to resize the image to 64×64 for reducing the computational cost. Then the data was normalized by using Normalize of transform.

2.2. GAN model

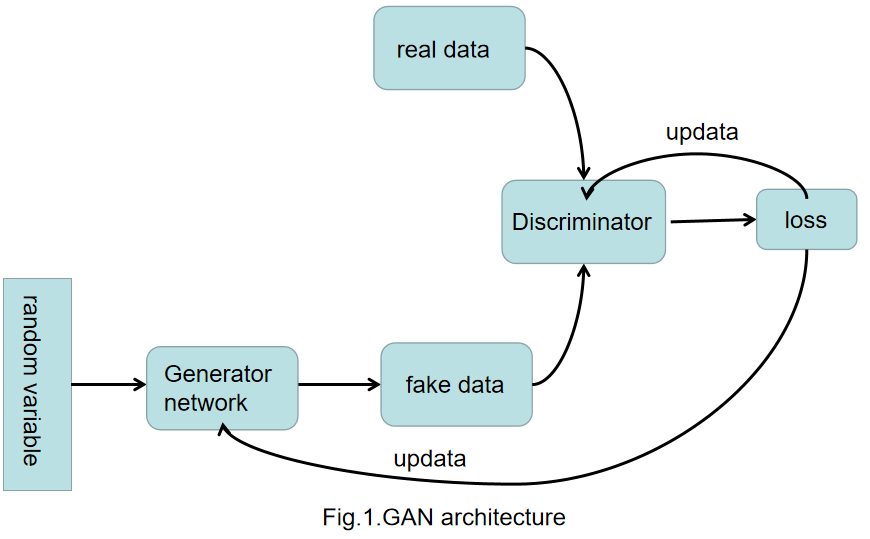

Generative Adversarial Networks (GAN) was created by Ian Goodfellow et al in 2014 [7]. The min-max, zero-sum theory is the base of GANs [8]. Thus, the two main networks of GANs are Generator and Discriminator. The goal of Generator is to learn how to generate fake data which can deceive Discriminator while the goal of Discriminator is to learn how to distinguish the fake data and the real data [8].

|

Figure 2. The architecture of the GAN. |

Figure 2 shows the GAN. At first, generating some random variable and put them into the Generator to generate fake data. Then it put the fake data into the Discriminator which will distinguish the fake data and the real data and feedback the loss of Generator. By the end, the generator will use the loss to update itself. The Discriminator is a binary classifier which can discriminate the real data and the fake data. It can calculate the loss of the generator and feed it back.

Deep Convolutional Generative Adversarial Network (DCGAN) is an improvement model based on GAN. DCGAN is built by Raford et al by introducing deep convolutional into both Generator and Discriminator in the GAN [8]. This is mainly because Convolutional Neural Networks (CNN) has a better performance in image area than Multiple Layer Perceptron (MLP) which is used in original GAN architecture [8]. In addition, the superiority of the CNN model has been also proved in many other computer vision tasks [9, 10].

In this study, it built the Generator and Discriminator by using five layers CNN. In the building of Generator, this study used method ConvTranspose2d() in the torch.nn to build fractionally-strided convolutions layer. After that, this study used BatchNorm2d() in the torch.nn to normalize the data and use ReLU() as the activation function. In the last layer, however, this study used Tanh() as the activation function. In the building of Discriminator, this study used Conv2d() in the torch.nn to build convolutions layer. Also, it used BatchNorm2d() to normalize the data but used SiLU() as the activation function of Discriminator instead of ReLU(). In the last layer of Discriminator, however, this study used Sigmod() as the activation function.

2.3. Implementation details

This study mainly used Pytorch to build the module. The code was running on the website Kaggle using the GPU P100. The loss function used in this study is Binary CrossEntropy Loss. The learning rate of the module is 0.002 and the batch size is 32. This study used the Adaptive Moment Estimation as the optimizer by using optim.Adam(). The beta of the optim.Adam() are 0.5 and 0.999. The total training epochs of this study is 900.

3. Result and discussion

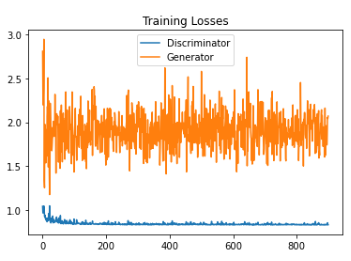

This study used DCGAN model to generate bone fractured X-ray pictures. Figure 3 shows the losses of both the Generator and the Discriminator during the whole training process.

|

Figure 3. The training losses of Discriminator and Generator. |

The orange line is the curve of training losses related to the Generator. At the beginning of the training, the loss of the Generator was 3.1071 and it vibrated between 1.2 and 2.9. After training 100 epochs, it vibrated between 1.5 and 2.2. During the training progress the range of the Generator losses became narrow. By the end of the training, the loss of the Generator is 1.9012. The green line is the curve of training losses related to the Discriminator. In the first epoch, the loss of discriminator was 1.6084. Generally speaking, the losses of the Discriminator was decreased before 210 epochs. After that, the losses of the Discriminator always vibrated near 0.84. By the end of the training, the loss of the Generator is 0.8281.

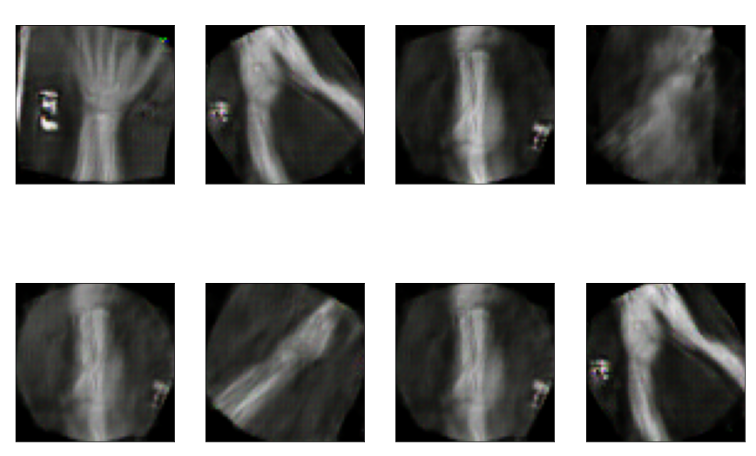

|

Figure 4. The final generated pictures. |

|

Figure 5. The original data. |

Figure 4 shows the generated pictures at the 900 epoch and Figure 5 show the original data. The resolution of the picture was really low firstly, and the Generator had a tend to generate simple pictures. Training the model by using classified data sets may improve the generating ability of the model because different kinds of fractured bone part picture are totally different between each other. This will make the training of model harder. Additionally, partitioning the fractured bone types may help to address the issue encountered in the current study, where the model generated some types of bone fractures more frequently than others. On the other hand, this study only tried DCGAN model to achieve the goal of generating fractured bone X-ray data. Trying other GANs models may make contribution in improving the generating ability of the model. Also, the study only uses a small data set and some data in the data set were making by rotating the same picture in the data set. Using a large data set with higher quality data can also enhance the performance of the study.

4. Conclusion

To help other scholars using AI to solve the public health problem, bone fracture, this study solves the usual problem scholars meet--the lack of a large number of bone fractured X-ray picture which can gain expediently. This study built a DCGAN model to generate bone fractured X-ray pictures. This study trained the model in 900 epochs in total and at the end of the 900 epochs the loss of the Discriminator is 0.8281 and the loss of the Generator is 1.9012. The limitation of this study is that the study just used one kind of the generating model and only trained it in 900 epochs, which means it does not come out its best preference and it can be improved largely. In the future, other GANs were considering to build the generating model like BEGAN, SRGAN and so on to make my study better. Also, further study will try other optimizer and loss function to make the result of my model better. On the other hand, the build model will be utilized to generate other data in medical science area to help other scholars to solve the problem of the lack of case picture when they want to train their AI model which help doctors cure their patents.

References

[1]. Weber B Lackner I Knecht D et al. 2020 Systemic and cardiac alterations after long bone fracture Shock (Augusta, Ga.) 54(6) 761

[2]. Cheung W H et al. 2016 Fracture healing in osteoporotic bone Injury-international Journal of the Care of the Injured 47 S21-S26

[3]. Choudhury A Asan O 2020 Role of Artificial Intelligence in Patient Safety Outcomes: Systematic Literature Review JMIR Med Inform Jul 24 8(7): e18599

[4]. Rodriguez R A Lång K Gubern M A et al. 2019 Stand-alone artificial intelligence for breast cancer detection in mammography: comparison with 101 radiologists JNCI: Journal of the National Cancer Institute 111(9) 916-922

[5]. Kiranjeet K Lalit M S 2016 Heart Disease Prediction System Using PCA and SVM Classification International Journal of Advance Research Ideas and Innovations in Technology 2(3)

[6]. Kaggle 2023 Bone fracture detection using xrays. https://www.kaggle.com/datasets/vuppalaadithyasairam/bone-fracture-detection-using-xrays

[7]. Goodfellow Ian et al. 2014 Generative Adversarial Nets Neural Information Processing Systems MIT Press

[8]. Dash A Ye J Wang G 2021 A review of Generative Adversarial Networks (GANs) and its applications in a wide variety of disciplines--From Medical to Remote Sensing arXiv preprint arXiv:2110.01442

[9]. Qiu Y Chang C S Yan J L et al. 2019 Semantic segmentation of intracranial hemorrhages in head CT scans 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS) IEEE 112-115

[10]. Xie L Wisse L E M Wang J et al. 2023 Deep label fusion: A generalizable hybrid multi-atlas and deep convolutional neural network for medical image segmentation Medical Image Analysis 83: 102683

Cite this article

Bai,Y. (2023). Bone Fractured X-ray Data Generation Based on DCGAN. Applied and Computational Engineering,8,162-167.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2023 International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Weber B Lackner I Knecht D et al. 2020 Systemic and cardiac alterations after long bone fracture Shock (Augusta, Ga.) 54(6) 761

[2]. Cheung W H et al. 2016 Fracture healing in osteoporotic bone Injury-international Journal of the Care of the Injured 47 S21-S26

[3]. Choudhury A Asan O 2020 Role of Artificial Intelligence in Patient Safety Outcomes: Systematic Literature Review JMIR Med Inform Jul 24 8(7): e18599

[4]. Rodriguez R A Lång K Gubern M A et al. 2019 Stand-alone artificial intelligence for breast cancer detection in mammography: comparison with 101 radiologists JNCI: Journal of the National Cancer Institute 111(9) 916-922

[5]. Kiranjeet K Lalit M S 2016 Heart Disease Prediction System Using PCA and SVM Classification International Journal of Advance Research Ideas and Innovations in Technology 2(3)

[6]. Kaggle 2023 Bone fracture detection using xrays. https://www.kaggle.com/datasets/vuppalaadithyasairam/bone-fracture-detection-using-xrays

[7]. Goodfellow Ian et al. 2014 Generative Adversarial Nets Neural Information Processing Systems MIT Press

[8]. Dash A Ye J Wang G 2021 A review of Generative Adversarial Networks (GANs) and its applications in a wide variety of disciplines--From Medical to Remote Sensing arXiv preprint arXiv:2110.01442

[9]. Qiu Y Chang C S Yan J L et al. 2019 Semantic segmentation of intracranial hemorrhages in head CT scans 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS) IEEE 112-115

[10]. Xie L Wisse L E M Wang J et al. 2023 Deep label fusion: A generalizable hybrid multi-atlas and deep convolutional neural network for medical image segmentation Medical Image Analysis 83: 102683