Volume 151

Published on May 2025Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

With the deepening exploration of marine resources and the global emphasis on sustainable development, intelligent fishery has emerged as a critical domain for advancing ecological conservation and operational efficiency. Underwater vision technology, a cornerstone of intelligent fishery systems, encounters substantial challenges due to complex underwater environments—such as light attenuation, turbidity, biofouling, and dynamic currents—which degrade image quality and impede real-time decision-making. To address these limitations, this paper systematically reviews the integration of OpenCV-based image processing techniques with edge computing frameworks, which collectively enhance the robustness and adaptability of underwater visual systems. OpenCV’s advanced algorithms, including Contrast-Limited Adaptive Histogram Equalization for low-light enhancement, geometric transformations for distortion correction, and YOLO-based object detection, have been shown to significantly improve image clarity and target recognition accuracy. Simultaneously, edge computing alleviates latency and bandwidth constraints by enabling real-time data processing on embedded devices, achieving sub-200 ms response times for critical tasks such as dissolved oxygen monitoring and fish behavior analysis. Field validations underscore performance improvements, such as 92% recognition accuracy in coral reef monitoring and 85% mean Average Precision for aquatic species detection using MobileNet-SSD models. Despite these advancements, challenges remain in extreme conditions, computational resource optimization for edge devices, and the need for interdisciplinary collaboration to integrate marine biology insights into algorithmic design. Future research directions highlight hybrid architectures combining physics-based restoration with quantized deep learning, bio-inspired optical sensors, and socio-technical frameworks to ensure equitable technology adoption.

View pdf

View pdf

This paper presents a novel data augmentation strategy that combines GAN-generated samples with optimized sampling to address class imbalance in image classification. Our approach significantly enhances classification accuracy on the CIFAR-10 dataset, achieving a 99.79% accuracy rate—an improvement of 43.57 percentage points over the baseline. Compared to traditional augmentation methods, our strategy better mitigates class imbalance and improves dataset diversity. Further validation on MNIST and STL-10 confirms the generalizability of our method.

View pdf

View pdf

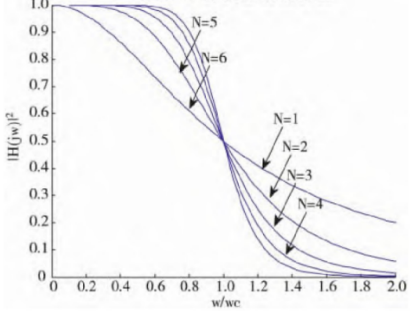

With the widespread adoption of wearable devices, pulse oximeters face challenges such as motion artifacts and environmental noise in dynamic scenarios, limiting their accuracy and reliability. This study focuses on optimizing a hybrid filtering framework combining low-pass filtering and LMS adaptive filtering to enhance the performance of photoplethysmography (PPG)-based oximetry. Utilizing a publicly available dataset from Biagetti et al., which includes PPG and triaxial accelerometer data from seven subjects under rest and exercise conditions, we implemented a dynamic low-pass filter with adjustable cutoff frequencies and an LMS algorithm with motion-dependent step size adaptation Comparative experiments with wavelet denoising demonstrated that the proposed framework achieved a signal-to-noise ratio (SNR) of 61.37 dB, a mean squared error (MSE) of 0.0029, and a correlation coefficient (R) of 0.9849, significantly outperforming conventional methods. Results validate the hybrid framework’s effectiveness in suppressing noise while preserving physiological signal integrity, offering a robust solution for wearable health monitoring in dynamic environments.

View pdf

View pdf

Telomeres, specialized DNA-protein structures located at the ends of chromosomes, play critical roles in maintaining genomic stability and regulating cellular lifespan. With each cellular division, telomeres gradually shorten, eventually triggering cellular senescence or apoptosis. However, cancer cells often bypass this replicative limit through telomerase activation or alternative lengthening mechanisms, enabling indefinite proliferation and tumor progression. This review systematically discusses the complex relationships between telomeres, cellular aging, and cancer, emphasizing telomere length dynamics, regulatory enzymes such as telomerase, and influencing factors including genetic predispositions, lifestyle, and environmental stressors. It also highlights innovative technological advancements in telomere analysis and potential clinical applications in anti-aging therapies, cancer treatments, and regenerative medicine. Despite promising advances, significant challenges remain, such as ethical considerations and balancing therapeutic telomere extension against cancer risks. Future interdisciplinary research integrating molecular biology, genetics, advanced imaging, and bioinformatics is crucial for translating telomere biology into effective clinical strategies aimed at extending healthy human lifespan and combating age-related diseases.

View pdf

View pdf

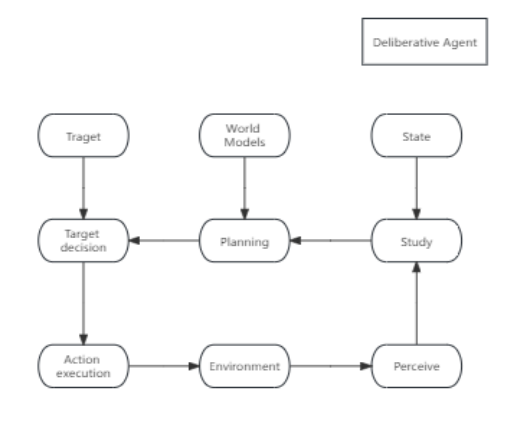

With the explosive growth of data and the increasing complexity of computing tasks, traditional computing models can no longer meet the demands. Parallel and distributed processing technologies have become key forces driving innovation in information technology. This paper reviews the research progress of intelligent computing platform technologies, focusing on parallel processing and distributed processing technologies. It primarily examines the basic principles and applications of multi-core processor technology, large-scale parallel algorithms, parallel programming, industrial computer networks, multi-agent systems, and cloud-edge-end architectures. Looking ahead, research on optimizing multi-core processors, innovating parallel algorithms, enhancing the intelligence and security of distributed systems, the deep integration of cloud-edge-end architectures with specific computing tasks, and the collaboration and planning of multi-agent systems will further improve the computational capacity, flexibility, and scalability of intelligent computing platforms.

View pdf

View pdf

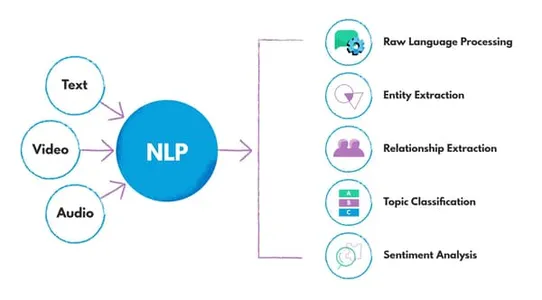

With the rapid development of social media platforms, public opinion analysis and trend forecasting have become key decision-making capabilities for governments and enterprises. In this study, a real-time public opinion monitoring system is built by integrating multimodal AI technology, in which the convolutional neural network is responsible for emotion classification, the topic model mines hot events, and the graph neural network tracks the propagation path. The experiment captured data from Twitter and other platforms, adopted an efficient preprocessing process and feature extraction method, and confirmed that the accuracy rate of the CNN model in the emotion determination task reached 92.5%, which was significantly improved compared to traditional methods. In the case database, typical events such as the fluctuation of public opinion during the US election and the release of new energy vehicle products were successfully identified, and the GNN model effectively predicted the diffusion trajectory of related topics. This technological integration system provides accurate data for analyzing business competition and formulating public policies.

View pdf

View pdf

Under the impact of the digital wave, small and medium-sized theaters and live performance venues are facing the dual challenges of reducing passenger flow and intensifying competition from commercial entertainment platforms. This study explores the synergistic effect of artificial intelligence technology in optimizing audience expansion strategies and strengthening cultural identity, and builds a multidimensional analysis model through K-means clustering, affective semantic analysis, and random forest algorithms. Based on operational data from 37 venues in Beijing, Kuala Lumpur, and Melbourne, the study accurately identified five types of audience groups and their behavioral characteristics, and established a correlation model between performance planning and passenger flow forecasting. Empirical data shows that personalized recommendation strategies can increase the attendance rate of target groups by 14%, and optimized programming combined with audience feedback can increase the satisfaction index by 23 percentage points. The research proves that artificial intelligence can not only achieve precision marketing, but also strengthen the identification of the value of cultural space through emotional resonance analysis, and provide decision support for regional cultural venues to maintain their uniqueness in digital competition.

View pdf

View pdf

As the world becomes increasingly dependent on marine resources, applying automation technology in ocean engineering is becoming increasingly important. This paper discusses the latest progress and problems of automation technology in ocean engineering. Research shows that underwater vehicle (ROV) technology and Digital Twin technology are gradually changing the way ocean exploration, construction and maintenance are done. Accurate data acquisition and real-time monitoring significantly improve operational efficiency and safety. In sustainable ocean development, automation technology not only optimizes resource utilization, but also finds solutions for environmental monitoring, such as intelligent monitoring systems created by remote sensing technology and sensor networks, which can effectively deal with the problems of Marine ecological protection. The study identifies bottlenecks including technical complexities, environmental and safety risks, and regulatory hurdles. These challenges must align with technological innovation and policy development. Future research should enhance system intelligence and autonomy while fostering technical collaboration to effectively address the complexities of the marine environment.

View pdf

View pdf

As cybercrime continues to pose significant threats to individuals, businesses, and national security, digital forensics has become an important tool for investigating cybercrime. This article explores the application of digital forensics technology, with a focus on disk forensics, memory forensics, and network forensics. It provides an overview of common forensic techniques, including key forensic methods, their advantages, limitations, and real-world applications. This study emphasizes the latest advancements in forensic tools and technologies, including AI-driven automation and machine learning-based anomaly detection, highlighting their role in recovering digital evidence, identifying cybercrime activities, and supporting legal proceedings. However, despite these advancements, challenges such as encrypted data, anti-forensic techniques, and increasingly complex network threats were also discussed. These findings emphasize the necessity of standardized forensic protocols, the integration of AI-driven automation, and improved forensic methods to enhance investigation efficiency and ensure the integrity and reliability of digital evidence in the legal environment.

View pdf

View pdf

The optimization and dispatching of microgrids is the main issue in achieving efficient operation of smart grids. In recent years, various optimization algorithms have been widely used in microgrid dispatching. This paper systematically reviews the research progress that has made in this field and compares the advantages and disadvantages of different optimization methods. First, the classic intelligent optimization algorithms, such as particle swarm optimization, genetic algorithm, and differential evolution are introduced, and in this essay, their applications and improvement strategies in microgrid dispatching are discussed. Secondly, the optimization methods based on reinforcement learning, including deep reinforcement learning (DRL), deep deterministic policy gradient (DDPG), and proximal policy optimization (PPO), are analyzed, focusing on their advantages in dealing with high-dimensional, nonlinear, and real-time dispatching problems. Additionally, the ‘prediction plus optimization’ combination strategy, such as Bayesian optimization, metaheuristic optimization, and multi-scenario optimization methods based on machine learning, is discussed to deal with the uncertainty and robustness problems of microgrids. These three aspects represent three kinds of most popular research on microgrid optimization. Finally, this paper summarizes the applicability of different optimization methods and looks forward to future development trends. Comprehensive analysis shows hybrid optimization strategies (such as PSO +GOA) are also worthy of further study in improving robustness.

View pdf

View pdf