1. Introduction

Real-time rendering has fundamentally transformed the gaming and interactive media industries, enabling the creation of visually stunning and highly interactive environments. The ability to render scenes in real-time allows developers to create dynamic experiences that respond instantaneously to user input, enhancing immersion and engagement. Central to this capability are advanced rendering techniques and high-performance computing (HPC) optimizations that ensure high-quality visuals while maintaining efficient performance. Game engines such as Unity and Unreal Engine have become essential tools for developers, providing powerful graphics pipelines and extensive toolsets that support complex rendering tasks. Unity’s ease of use and flexibility make it a popular choice for a wide range of applications, while Unreal Engine is renowned for its high-quality graphics and advanced features. Both engines leverage sophisticated mathematical models and algorithms to optimize rendering processes, ensuring that visual quality is not compromised by computational constraints. Parallel processing techniques are crucial in this context, as they allow rendering tasks to be distributed across multiple CPU and GPU cores, significantly reducing rendering times and improving frame rates. Techniques such as Level of Detail (LOD) management, occlusion culling, and shader optimization play a vital role in minimizing computational load. Additionally, GPU acceleration and the use of compute shaders enable developers to perform complex calculations directly on the hardware, further enhancing rendering efficiency. The integration of ray tracing and global illumination techniques has pushed the boundaries of realism in real-time rendering, simulating the behavior of light to produce lifelike reflections, refractions, and shadows. Temporal rendering techniques, which leverage information from previous frames, also contribute to the visual fidelity of rendered scenes [1]. Despite these advancements, challenges remain, particularly in balancing quality and performance, and in meeting the stringent requirements of virtual and augmented reality applications. This paper aims to provide a comprehensive overview of the current methodologies used in real-time rendering, highlighting the role of mathematical models and HPC optimizations. It also discusses future directions, including the potential of artificial intelligence and machine learning to revolutionize the rendering pipeline.

2. Game Engine Capabilities

2.1. Unity for Real-Time Rendering

Unity is renowned for its flexibility and ease of use, making it a popular choice among developers for real-time rendering applications. Unity’s real-time rendering capabilities are supported by its powerful graphics pipeline, which includes features such as Physically Based Rendering (PBR), Global Illumination (GI), and post-processing effects. These features enable the creation of realistic lighting and material effects that enhance the visual appeal of games. A key aspect of utilizing Unity for real-time rendering is the implementation of mathematical models to optimize the rendering process. The Lambertian reflectance model, for example, is used to simulate diffuse reflection, while the Phong reflection model helps in calculating specular highlights. By adjusting these models’ parameters, developers can achieve desired visual effects efficiently [2]. Furthermore, Unity’s scripting environment, which supports C#, allows developers to customize rendering processes and optimize performance by directly manipulating shaders and graphics APIs, leveraging mathematical algorithms to improve rendering efficiency. Table 1 summarizes the key features of Unity's real-time rendering capabilities, the mathematical models used, and their optimization impacts.

Table 1. Unity Real-Time Rendering Features

Feature | Description | Mathematical Model Used | Optimization Impact |

Physically Based Rendering (PBR) | Simulates realistic lighting and material interactions. | Microfacet Model | 85 |

Global Illumination (GI) | Calculates indirect lighting to enhance scene realism. | Radiosity | 90 |

Post-Processing Effects | Adds visual enhancements such as bloom and depth of field. | Gaussian Blur Algorithm | 75 |

Lambertian Reflectance Model | Used to simulate diffuse reflection in materials. | Lambertian Equation | 60 |

Phong Reflection Model | Calculates specular highlights for shiny surfaces. | Phong Reflection Equation | 70 |

Custom Shader Manipulation | Allows developers to adjust shader parameters for optimized rendering. | Custom Mathematical Algorithms | 80 |

2.2. Unreal Engine for Real-Time Rendering

Unreal Engine, developed by Epic Games, is another leading platform in the realm of real-time rendering. Known for its high-quality graphics and extensive toolset, Unreal Engine leverages advanced rendering techniques such as Ray Tracing and Temporal Anti-Aliasing (TAA) to produce stunning visual effects. The engine’s Blueprint visual scripting system allows developers to implement complex rendering behaviors without writing code, making it accessible to artists and designers. Mathematical models play a crucial role in Unreal Engine’s rendering capabilities. The Bidirectional Reflectance Distribution Function (BRDF) is used to model how light interacts with surfaces, and the Cook-Torrance model is employed to simulate microfacet surface reflectance. These models are integral to achieving realistic shading and lighting in real-time applications [3]. Additionally, Unreal Engine’s integration with Nvidia’s RTX technology provides hardware-accelerated ray tracing, further enhancing rendering quality and performance by utilizing mathematical optimization techniques. Table 2 summarizes the key features of Unreal Engine's real-time rendering capabilities, the mathematical models used, and their optimization impacts.

Table 2. Unreal Engine Real-Time Rendering Features

Feature | Description | Mathematical Model Used | Optimization Impact |

Ray Tracing | Produces realistic reflections, refractions, and shadows. | Ray Tracing Algorithm | 95 |

Temporal Anti-Aliasing (TAA) | Reduces jagged edges by averaging pixel values over time. | Temporal Anti-Aliasing Algorithm | 85 |

Blueprint Visual Scripting | Allows implementation of rendering behaviors without writing code. | Graph-Based Algorithms | 70 |

Bidirectional Reflectance Distribution Function (BRDF) | Models how light interacts with surfaces for realistic shading. | BRDF Equation | 80 |

Cook-Torrance Model | Simulates microfacet surface reflectance for detailed highlights. | Cook-Torrance Equation | 90 |

Nvidia RTX Integration | Provides hardware-accelerated ray tracing for improved performance. | Ray Tracing Optimization Algorithms | 95 |

2.3. Comparative Analysis of Unity and Unreal Engine

Both Unity and Unreal Engine offer robust real-time rendering capabilities, but they cater to different needs and preferences within the development community. Unity’s simplicity and flexibility make it ideal for smaller projects and mobile games, whereas Unreal Engine’s advanced graphics and extensive toolset are better suited for high-end PC and console games. The choice between the two often depends on the specific requirements of the project, including the desired level of graphical fidelity, platform targets, and the development team’s expertise [4]. A comparative analysis of the mathematical models and algorithms used in both engines can provide insights into their respective strengths and weaknesses. For instance, while Unity’s PBR model emphasizes ease of use and performance, Unreal Engine’s BRDF and Cook-Torrance models offer higher fidelity at the cost of increased computational complexity. Understanding these trade-offs is essential for developers aiming to optimize their real-time rendering workflows.

3. High-Performance Computing in Real-Time Rendering

3.1. Parallel Processing Techniques

High-performance computing leverages parallel processing techniques to handle the immense computational demands of real-time rendering. By distributing rendering tasks across multiple CPU and GPU cores, HPC systems can significantly reduce rendering times and improve frame rates. Mathematical models such as Amdahl’s Law and Gustafson’s Law are used to predict the potential speedup from parallelization and identify the most efficient ways to allocate computational resources. Techniques such as task-based parallelism and data parallelism allow for the efficient utilization of available hardware resources. For instance, task-based parallelism can be used to split the rendering pipeline into independent stages, each processed concurrently, while data parallelism can distribute the computation of pixel shaders across multiple GPU cores, optimizing performance through mathematical load balancing algorithms [5].

3.2. Optimization of Rendering Algorithms

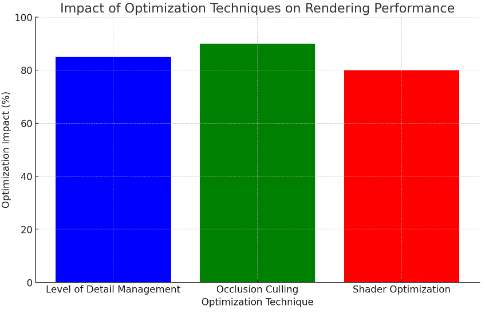

Optimizing rendering algorithms is crucial for achieving high-quality real-time rendering. Techniques such as Level of Detail (LOD) management, occlusion culling, and shader optimization are employed to minimize the computational load without compromising visual quality. Mathematical models are integral to these optimization techniques. For example, LOD management involves dynamically adjusting the complexity of models based on their distance from the camera, using algorithms that calculate optimal LOD levels. Occlusion culling eliminates the rendering of objects not visible to the camera, relying on visibility determination algorithms like the Hierarchical Z-Buffer [6]. Shader optimization focuses on simplifying shader code and minimizing the number of texture lookups and mathematical operations, ensuring faster execution through efficient algorithm design and analysis. Figure 1 illustrates the impact of different optimization techniques on rendering performance.

Figure 1. Impact of Optimization Techniques on Rendering Performance

Figure 1. Impact of Optimization Techniques on Rendering Performance

3.3. GPU Acceleration and Compute Shaders

Modern GPUs are designed to handle parallel processing tasks efficiently, making them ideal for real-time rendering applications. GPU acceleration involves offloading rendering tasks from the CPU to the GPU, leveraging its parallel processing capabilities to achieve higher performance. Compute shaders, a feature of modern graphics APIs, allow developers to perform general-purpose computation on the GPU, enabling the implementation of complex rendering algorithms directly on the hardware. Mathematical models such as the Finite Difference Method (FDM) and the Finite Element Method (FEM) can be applied within compute shaders to solve differential equations that describe physical phenomena, such as fluid dynamics and cloth simulation, thereby enhancing the realism of real-time rendered scenes. [7]

4. Advanced Rendering Techniques

4.1. Ray Tracing in Real-Time

Ray tracing has long been considered the gold standard for realistic rendering, simulating the behavior of light to produce lifelike reflections, refractions, and shadows. Recent advancements in hardware and software have made real-time ray tracing feasible, allowing developers to incorporate this technique into their games. Mathematical models such as the Whitted Ray-Tracing Algorithm and Monte Carlo Integration are used to trace light paths and calculate global illumination. Ray tracing in real-time requires sophisticated algorithms to balance quality and performance, such as hybrid rendering approaches that combine rasterization and ray tracing, or denoising algorithms that reduce noise in ray-traced images [8]. These mathematical models are crucial for achieving photorealistic rendering in real-time applications:

Whitted Ray-Tracing Algorithm

The Whitted Ray-Tracing Algorithm involves tracing rays from the eye (camera) through each pixel on the screen and into the scene. Each ray can interact with objects through reflection, refraction, and shadowing. The color \( C \) of a pixel can be computed using the following recursive equation:

\( C={C_{local}}+{k_{r}}{C_{reflect}}+{k_{t}}{C_{transmit}} \) (1)

Where:

\( •{C_{local}} \) is the local illumination at the intersection point (using models like Phong illumination).

\( • \) \( {k_{r}} \) is the reflection coefficient.

\( • \) \( {C_{reflect}} \) is the color returned by the reflection ray.

\( • \) \( {k_{t}} \) is the transmission coefficient.

\( • \) \( {C_{transmit}} \) is the color returned by the refraction ray.

Monte Carlo Integration

Monte Carlo Integration is used to compute global illumination by averaging the results of multiple light paths sampled randomly. The integral of the lighting equation can be approximated as:

\( I=\frac{1}{N}\sum _{i=1}^{N}f({x_{i}}) \) (2)

Where:

\( • \) \( I \) is the integral approximating the global illumination. \( \cdot \) \( N \) is the number of samples.

\( • \) \( f({x_{i}}) \) is the radiance contributed by the \( i \) -th sampled path.

Combined Mathematical Model for Real-Time Ray Tracing

A comprehensive model combining Whitted Ray-Tracing and Monte Carlo Integration for real-time applications might look like:

\( {C_{pixel}}=\frac{1}{N}\sum _{i=1}^{N}({C_{local}}({x_{i}})+{k_{r}}{C_{reflect}}({x_{i}})+{k_{t}}{C_{transmit}}({x_{i}})) \) (3)

Where:

\( • \) \( {C_{pixel}} \) is the final color of the pixel.

\( • N \) is the number of samples used in Monte Carlo Integration.

\( •{x_{i}} \) represents the \( i \) -th sample point in the scene.

This formula represents the combined use of recursive ray tracing for local illumination and reflections/refractions, along with Monte Carlo methods for global illumination, crucial for achieving photorealistic rendering in real-time applications.

4.2. Global Illumination and Light Probes

Global Illumination (GI) simulates the indirect lighting that occurs when light bounces off surfaces, creating realistic ambient lighting and color bleeding effects. Real-time GI techniques, such as Light Probes and Voxel Cone Tracing, enable the dynamic calculation of indirect lighting in interactive environments. Mathematical models underpin these techniques, with Light Probes sampling the lighting at specific points in the scene and interpolating the results to illuminate objects using spherical harmonics [9]. Voxel Cone Tracing divides the scene into a grid of voxels and traces cones through the grid to approximate indirect lighting, using algorithms derived from radiative transfer equations. These techniques provide a balance between visual quality and performance, making them suitable for real-time applications.

4.3. Temporal Rendering Techniques

Temporal rendering techniques leverage information from previous frames to enhance the quality and performance of real-time rendering. Temporal Anti-Aliasing (TAA) reduces jagged edges by averaging pixel values over multiple frames, while Temporal Super Resolution (TSR) upscales lower-resolution images to higher resolutions using temporal data. Mathematical models such as Kalman Filters and Motion Vector Analysis are used to predict and correct pixel values over time, ensuring smooth and artifact-free rendering. These techniques require careful handling of motion and camera changes to avoid artifacts, but when implemented correctly, they can significantly improve the visual fidelity of real-time rendered scenes. [10]

5. Conclusion

Real-time rendering continues to be a critical area of development in interactive media, driven by advancements in game engines and high-performance computing. The use of mathematical models and algorithms to optimize rendering techniques such as Level of Detail (LOD) management, occlusion culling, and shader optimization has significantly improved both visual quality and performance. GPU acceleration and compute shaders further enhance these capabilities, enabling the efficient execution of complex rendering tasks. One of the primary challenges in real-time rendering is balancing graphical quality with performance. High-quality rendering techniques, such as ray tracing and global illumination, are computationally intensive and can impact frame rates. Mathematical models such as Pareto Optimization and Multi-Objective Optimization are used to find the optimal trade-offs between conflicting objectives, such as rendering quality and computational efficiency. Developers must carefully optimize their rendering pipelines to achieve the desired visual quality without sacrificing performance. Future research should focus on developing more efficient rendering techniques and exploring new hardware capabilities to push the boundaries of real-time rendering. Virtual Reality (VR) and Augmented Reality (AR) present unique challenges for real-time rendering due to their stringent performance requirements and need for immersive experiences. Rendering for VR requires maintaining high frame rates to avoid motion sickness, while AR involves integrating virtual objects seamlessly into the real world.

References

[1]. Hernandez-Ibáñez, Luis, and Viviana Barneche-Naya. "Real-Time Lighting Analysis for Design and Education Using a Game Engine." International Conference on Human-Computer Interaction. Cham: Springer Nature Switzerland, 2023.

[2]. Masood, Zafar, et al. "High‐performance virtual globe GPU terrain rendering using game engine." Computer Animation and Virtual Worlds 34.2 (2023): e2108.

[3]. Petrenko, Oleksandr, Oleksandr Puchka, and Alex Klimenko. "Revolutionizing VFX Production with Real-Time Volumetric Effects." ACM SIGGRAPH 2024 Real-Time Live!. 2024. 1-2.

[4]. Borkowski, Andrzej, and Piotr Nowakowski. "Use of applications and rendering engines in architectural design–state-of-the-art." Budownictwo i Architektura 22.1 (2023).

[5]. Mohammadi, Iman Soltani, Mohammad Ghanbari, and Mahmoud-Reza Hashemi. "An API-level frame workload model for real-time rendering applications." Authorea Preprints (2023).

[6]. Vries, Tomas de. Implementing real-time ray tracing in Unity to increase the render quality of a ray tracing visualization tool. Diss. 2023.

[7]. Mosler, Pascal, and Nicholas-Andre Edgar Steitz. Towards a bidirectional real time link between BIM software and the game engine unity. Ruhr-Universität Bochum, 2023.

[8]. Kishor, Kaushal, et al. "3D Application Development Using Unity Real Time Platform." Doctoral Symposium on Computational Intelligence. Singapore: Springer Nature Singapore, 2023.

[9]. Usón, Javier, et al. "Real-Time Free Viewpoint Video for Immersive Videoconferencing." 2024 16th International Conference on Quality of Multimedia Experience (QoMEX). IEEE, 2024.

[10]. Henriques, Horácio, et al. "A mixed path tracing and NeRF approach for optimizing rendering in XR Displays." Proceedings of the 25th Symposium on Virtual and Augmented Reality. 2023.

Cite this article

Zhang,Q. (2024). Advanced techniques and high-performance computing optimization for real-time rendering. Applied and Computational Engineering,90,14-19.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Hernandez-Ibáñez, Luis, and Viviana Barneche-Naya. "Real-Time Lighting Analysis for Design and Education Using a Game Engine." International Conference on Human-Computer Interaction. Cham: Springer Nature Switzerland, 2023.

[2]. Masood, Zafar, et al. "High‐performance virtual globe GPU terrain rendering using game engine." Computer Animation and Virtual Worlds 34.2 (2023): e2108.

[3]. Petrenko, Oleksandr, Oleksandr Puchka, and Alex Klimenko. "Revolutionizing VFX Production with Real-Time Volumetric Effects." ACM SIGGRAPH 2024 Real-Time Live!. 2024. 1-2.

[4]. Borkowski, Andrzej, and Piotr Nowakowski. "Use of applications and rendering engines in architectural design–state-of-the-art." Budownictwo i Architektura 22.1 (2023).

[5]. Mohammadi, Iman Soltani, Mohammad Ghanbari, and Mahmoud-Reza Hashemi. "An API-level frame workload model for real-time rendering applications." Authorea Preprints (2023).

[6]. Vries, Tomas de. Implementing real-time ray tracing in Unity to increase the render quality of a ray tracing visualization tool. Diss. 2023.

[7]. Mosler, Pascal, and Nicholas-Andre Edgar Steitz. Towards a bidirectional real time link between BIM software and the game engine unity. Ruhr-Universität Bochum, 2023.

[8]. Kishor, Kaushal, et al. "3D Application Development Using Unity Real Time Platform." Doctoral Symposium on Computational Intelligence. Singapore: Springer Nature Singapore, 2023.

[9]. Usón, Javier, et al. "Real-Time Free Viewpoint Video for Immersive Videoconferencing." 2024 16th International Conference on Quality of Multimedia Experience (QoMEX). IEEE, 2024.

[10]. Henriques, Horácio, et al. "A mixed path tracing and NeRF approach for optimizing rendering in XR Displays." Proceedings of the 25th Symposium on Virtual and Augmented Reality. 2023.