Volume 196

Published on October 2025Volume title: Proceedings of CONF-MLA 2025 Symposium: Intelligent Systems and Automation: AI Models, IoT, and Robotic Algorithms

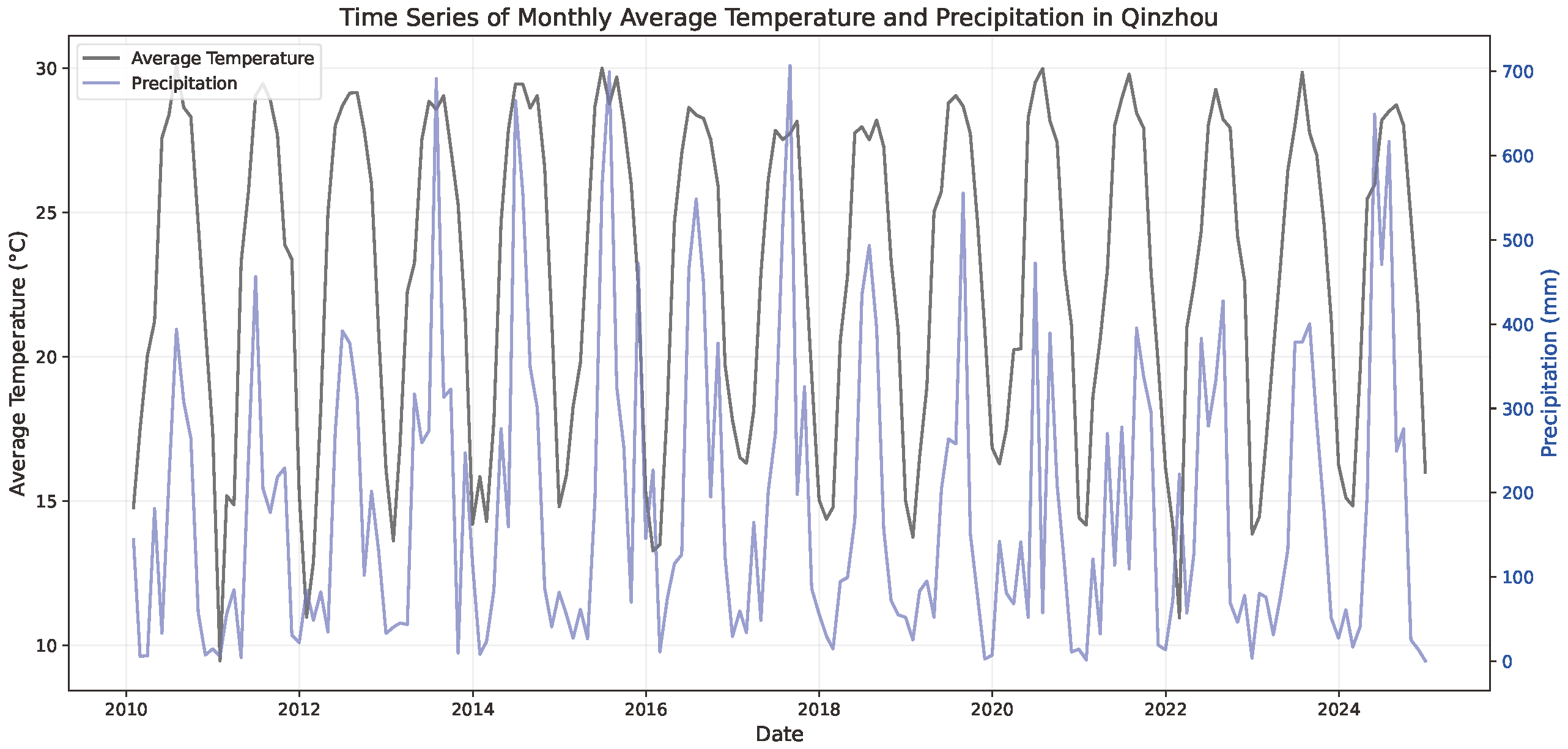

Qinzhou, Guangxi Zhuang Autonomous Region, China, experiences intense seasonal precipitation and relatively high temperatures, which often lead to droughts or floods. Forecasting precipitation and temperature is an essential step in taking precautions against damages caused by weather. The Seasonal Autoregressive Integrated Moving Average (SARIMA) model is effective for forecasting time series with regular patterns. This paper uses the SARIMA model to forecast the monthly precipitation and average temperature of Qinzhou. The training set comprises data provided by the National Oceanic and Atmospheric Administration (NOAA) from 2010 to 2022, inclusive, while data from 2023 to 2024 are used as the test set. By analyzing the augmented Dickey-Fuller (ADF) test results, and comparing Akaike information criterion (AIC) values and models' accuracy, sets of reasonable model parameters are selected. Coefficients of determination (R2) suggest the SARIMA model can effectively forecast monthly average temperature and precipitation, but it shows shortcomings in capturing unexpected extreme values.

View pdf

View pdf

Volatility is a crucial indicator for quantifying risk levels, guiding as- set allocation, and assisting in formulating macro policies, so realizing the prediction has important significance. Researchers are increasingly applying machine learning techniques, such as support vector machines, LSTM, and deep convolutional networks, to enhance the accuracy of volatility predictions in complex environments. Although numerous studies have proposed diverse methods that combine with machine learning for volatility forecasting, systematic reviews that sort out these methods remain scarce. To fill this gap, this paper systematically summarizes novel models developed by scholars for forecasting stock market volatility, aiming to provide valuable references for researchers to develop new forecasting technologies. By checking 19 empirical studies and 6 review articles, along with authoritative writings, this paper systematically organizes foundational methods related to stock market volatility prediction and demonstrates machine learning- based forecasting techniques. The review finds that machine learning methods such as support vector machine (SVM) and random forest (RF) enhance prediction accuracy by efficiently capturing the nonlinear characteristics of financial data through kernel functions and ensemble learning. Deep learning models, such as LSTM and GRU, excel in forecasting stock market volatility by capturing long-term dependencies and processing complex sequential data, significantly improving prediction accuracy compared to traditional models like GARCH. In addition, hybrid models (such as GARCH-LSTM and GARCH-MIDAS) further combine the advantages of econometrics and machine learning, and have demonstrated superiority in multiple empirical studies.

View pdf

View pdf

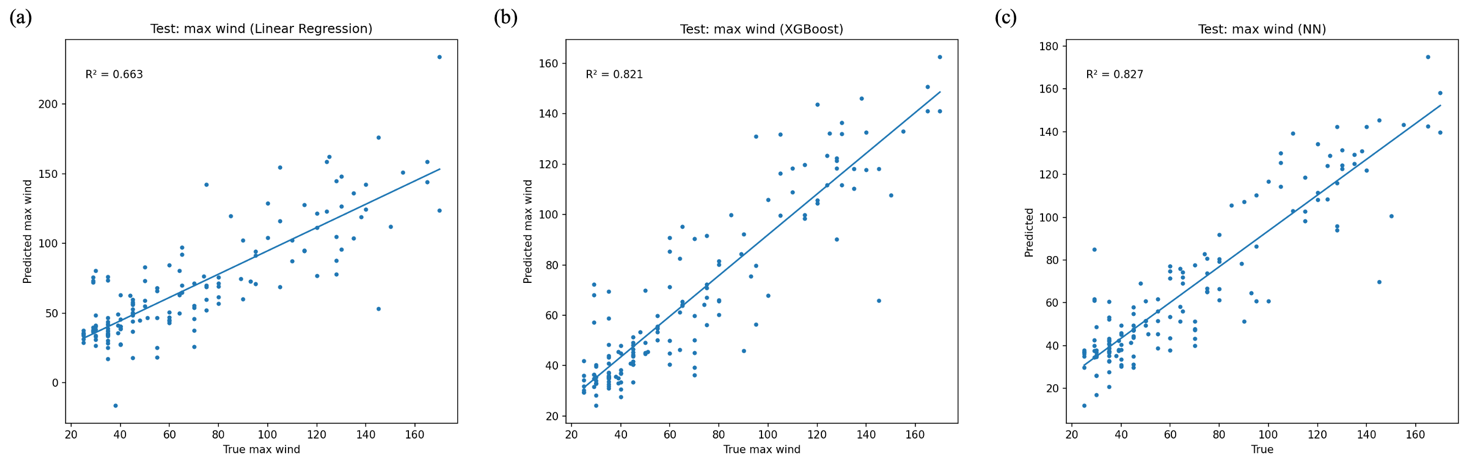

This study forecasts tropical-cyclone peak intensity 24 hours in advance using a parsimonious, track-centric feature set. From 400 Western North Pacific storms (2012–2025), standardized pre-peak histories are constructed from 37 three-hourly coordinates and augmented with month, initial wind and pressure, and 24-hour prior wind and pressure. Best-track records provide targets for peak maximum sustained wind and minimum central pressure, and models are assessed on a temporally held-out cohort of 151 post-2020 storms. Compared with a linear baseline, nonlinear learners substantially improve accuracy for peak wind (R² ≈ 0.82–0.83; RMSE ≈ 16.3–16.6 kt versus 22.8 kt) and for minimum pressure, where gradient-boosted trees perform best (R² ≈ 0.81; RMSE ≈ 13.2 hPa). Stratified analyses show consistent gains across months, latitude bands, and intensity classes, though errors increase for major and super typhoons. Interpretation of model behavior indicates that 24-hour prior wind and pressure dominate predictive skill, while latitude and longitude primarily modulate outcomes rather than acting as strong main effects. The approach is fast, portable, and interpretable, offering a low-latency prior when richer environmental or satellite inputs are unavailable.

View pdf

View pdf

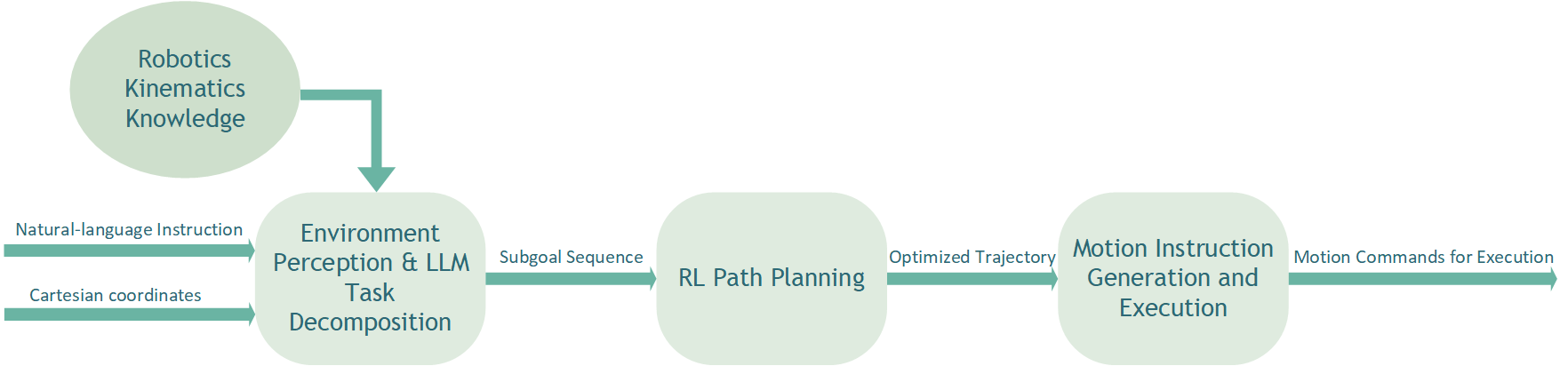

Robot control is a current research hotspot, with robotic arm trajectory planning being a key direction. However, traditional methods exhibit insufficient adaptability and flexibility, making it difficult to meet the demands of complex tasks and dynamic environments. This paper proposes a path planning system based on the collaboration of reinforcement learning (RL) and large language models (LLM). The system consists of three modules: environment perception and LLM-based task decomposition and scheduling, RL-based trajectory planning, and motion command generation. By integrating the cognitive capabilities of LLM with the optimization capabilities of RL, the system enables task-driven robotic arm trajectory planning. In terms of design, the upper layer employs LLM for task analysis and high-level command generation, while the lower layer uses RL for trajectory optimization, forming a hierarchical collaborative mechanism. To verify its effectiveness, experiments were conducted on both simulated and real COBOT platforms for a static block-grabbing task, comparing three schemes: pure RL, pure LLM, and the proposed LLM-RL fusion. Results show that the LLM-RL approach outperforms the baselines in terms of average path length and execution time, while also significantly improving RL training efficiency.

View pdf

View pdf

With the increasing complexity of network security threats, web crawler technology has become an important tool in the field of network security due to its automated information collection capability. This study synthesizes 10 relevant literatures to explore the application of crawler technology in network security. It first outlines the main types of crawlers and their technical principles, with a focus on analyzing Python-based frameworks. Then, it examines its specific applications in vulnerability detection, such as XSS cross-site scripting vulnerability detection, automated SQL injection detection, and malicious crawler identification. In addition, combined with some researches, it discusses the challenges faced by crawler technology, such as anti-crawler mechanisms, compliance, and privacy protection. Finally, it looks forward to integrating machine learning into crawler strategy optimization and constructing an intelligent security detection framework, aiming to provide references for network security research and practice. It also provides future research directions for researchers in related fields.

View pdf

View pdf

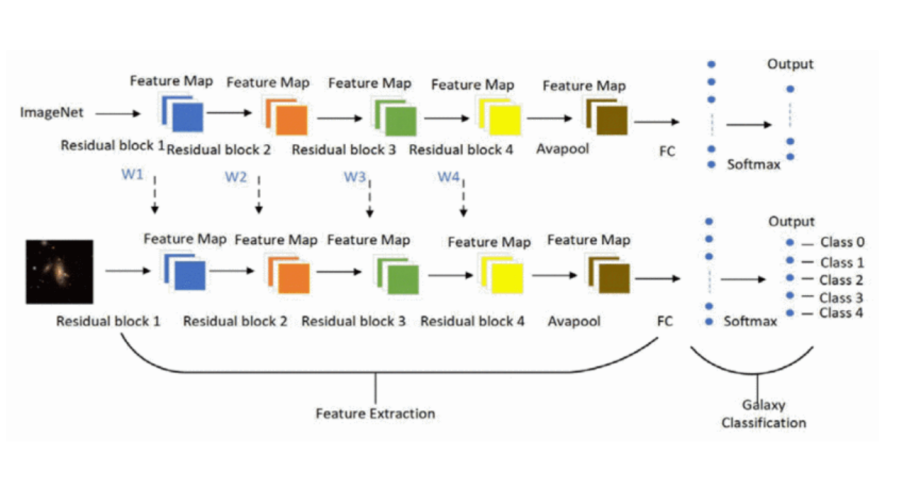

This article systematically reviews the research status, key technological breakthroughs, and application prospects of the ResNet18 model and its improved variants in the fields of medical imaging and industrial vision detection. ResNet18 effectively alleviates the deep network degradation problem with its residual structure, providing an efficient benchmark model for tasks such as image classification, object detection, and defect recognition. This article focuses on analyzing the improvement strategies of ResNet18 for different application scenarios, such as introducing attention mechanisms (SE, SimAM), combining transfer learning, data augmentation, and model lightweight pruning, which significantly enhance performance in tasks including coronary artery narrowing classification, road pothole detection, metal defect evaluation, flame stability recognition, epilepsy EEG classification, and coal gangue sorting, achieving high accuracy levels generally ranging from 94% to 99.5%. Additionally, this article discusses challenges such as model lightweight deployment, few-shot learning, cross-domain generalization, and standardized dataset construction, and looks forward to future research directions, including deeper structural optimization, multimodal fusion, and embedded applications in broader industrial scenarios, providing comprehensive technical references for researchers in related fields.

View pdf

View pdf

This study tackles short-term wind power forecasting using hourly data (2017–2021) from four utility-scale sites. An end-to-end machine learning pipeline is constructed with strict quality control and physics-informed features, including u/v wind vector decomposition, nonlinear wind-speed terms, and compact calendar encodings (hour and month). Model evaluation combines rolling-origin time splits with leave-one-site-out (LOSO) cross-site testing. Stable feature importance analysis yields a Top-27 feature set, ensuring comparability across sites and deployment readiness. In pooled training, LightGBM performs best (RMSE ≈ 0.161, R² ≈ 0.45), while results reveal strong site heterogeneity. LOSO testing improves generalization at lower-skill sites, and adding time encodings further tightens per-site fits (R² ≈ 0.98). The contribution is a reproducible forecasting workflow that balances physical interpretability with predictive accuracy, a lean, transferable feature set, and a rigorous evaluation protocol that separates temporal from spatial generalization. Findings inform operational forecasting for wind assets and offer a practical blueprint for scaling predictive maintenance and dispatch decisions across diverse wind regimes.

View pdf

View pdf

Recently, artificial intelligence (AI) has shown its advantages in many fields, one of which is the modeling market in game design, as individual needs of each player can be customized by AI. Meanwhile, over the decades, 3D model games have proven to be a powerful tool to attract players to interact with the game environment to get more immersive experiences. Previous researchers have explored the possibilities of machine learning (ML) and Reinforcement Learning (RL) for generating game content and 3D models. To provide high-quality assets, researchers aim to improve game release efficiency by integrating ML and RL into Procedural Content Generation (PCG), allowing automatic creation of text and non-text files like levels, models, and 3D environments. However, challenges remain in algorithm development and creating high-quality 3D models. This article reviews the current state of AI-driven content generation for game 3D assets, discussing techniques, applications, limitations, and challenges, including natural language processing, RL, and ML algorithms. It also highlights future opportunities, such as developing complex models and exploring new AI applications in game design.

View pdf

View pdf

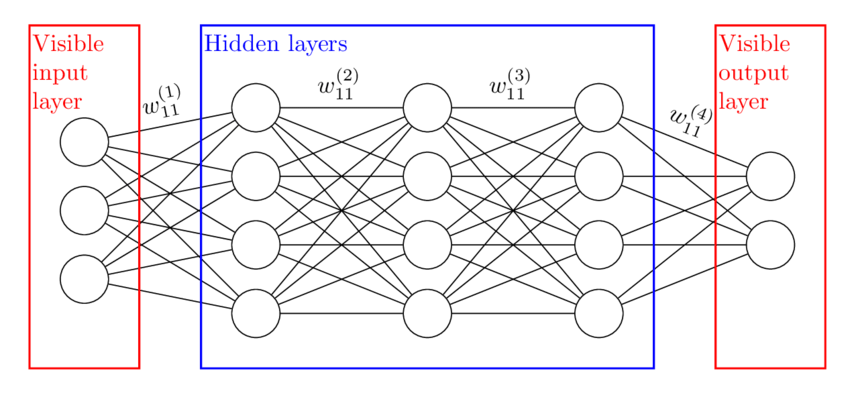

Deep learning techniques have gained significant traction in various domains, particularly in network security. This article discusses the fundamental principles of deep learning, including neural networks and important models like the Feed-Forward Neural Network (FNN), the Convolutional Neural Network (CNN), the Recurrent Neural Network (RNN), and Autodesk. Each model's unique architecture and functionality are discussed, with a focus on their applications in intrusion detection and network stream optimization. The challenges faced by deep learning in network security, such as increased model complexity and resource demands, are also examined. Finally, future trends indicate a push towards more lightweight models to enhance security in an increasingly interconnected digital landscape.

View pdf

View pdf

This paper explores the application of large language models (LLMs) in the gaming field. It begins by elaborating on the research background and significance, and reviews the current status of their application and research in the gaming domain. Subsequently, it introduces the characteristics, working principles, and development history of large language models. It then focuses on analyzing the application of large language models in games, taking LLM-controlled non-player characters (NPCs) and dynamic plot generation as examples to dissect their application methods and advantages in games. Finally, it discusses the challenges faced by large language models in gaming applications, such as high resource consumption and unstable interaction with players; at the same time, it looks forward to their impact on the development of the gaming field in the future, believing that with the continuous advancement of technology, they will promote the intelligent transformation of the gaming industry. This will play an important role in the future of the game industry.

View pdf

View pdf