1. Introduction

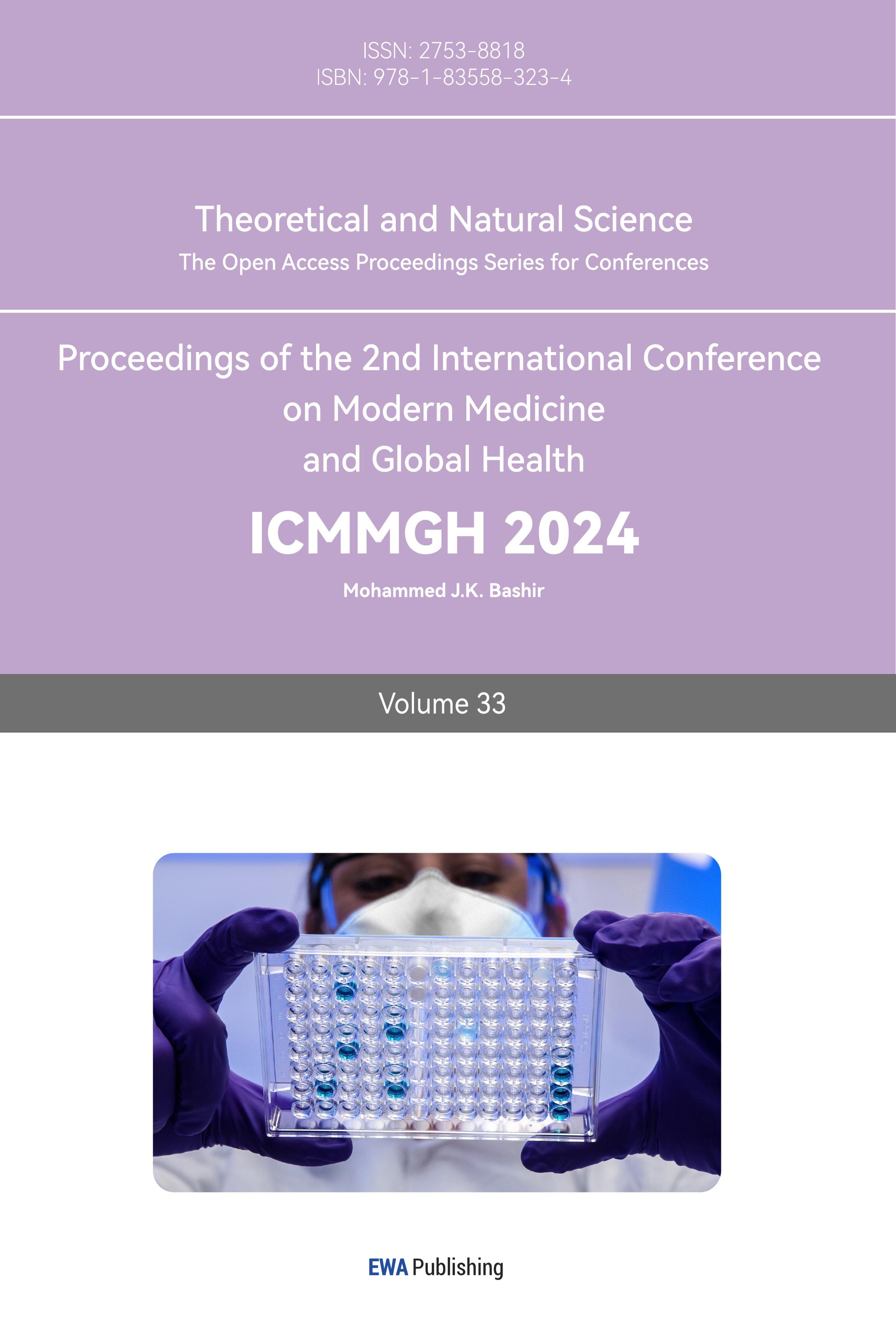

Breast cancer is a common malignant tumor which usually occurs in women but can also occur in men [1]. The seriousness of breast cancer is that it can spread quickly to other body parts such as lungs, liver, and bones, which can lead to serious health problems and life threatening situations [2]. The incidence of breast cancer is increasing every year and has become one of the major threats to women's health [3]. Symptoms of breast cancer mainly include breast lumps, skin dimpling, nipple inversion, redness and swelling of the skin, and nipple discharge [4]. The treatment options for breast cancer mainly include surgery, radiotherapy, chemotherapy, endocrine therapy, etc., of which surgery is the most commonly used treatment to remove breast cancer tissues so as to control the progression of the disease [5]. The current percentage of breast cancer among all cancers is shown in Figure 1.

Figure 1. The current percentage of breast cancer. (Photo credit: Original)

However, traditional breast cancer detection methods usually rely on doctors' experience and manual analysis, and this method involves subjectivity and the risk of misjudgment [6]. In contrast, machine learning can be used to automatically identify breast cancer lesions in patients by training algorithms, which reduces the subjective judgment of doctors and improves the accuracy and efficiency of detection [7]. The role of machine learning in breast cancer detection is huge [8]. Machine learning algorithms can learn from a large amount of data on breast cancer cases and non-cancer cases to discover specific patterns and features to determine whether a patient has breast cancer. This approach can help doctors detect breast cancer lesions earlier and improve the success rate and prognosis of treatment [9]. At the same time, machine learning can also help doctors better understand how breast cancer develops and how it is treated, so as to better guide patients' treatment programs [10].

Currently, the application of machine learning in breast cancer detection has made great progress. For example, a breast cancer detection system based on machine learning can automatically identify a patient's breast cancer lesions by analyzing a variety of medical imaging data such as mammograms, ultrasound images, and magnetic resonance images. Such a system can greatly improve the accuracy and efficiency of breast cancer detection, reduce the risk of missed diagnosis and misdiagnosis, and thus provide better treatment options for patients.

In addition to imaging data, machine learning can also be used to identify whether a patient has breast cancer by analyzing the patient's clinical data, genetic data, and many other data sources. For example, a machine learning-based breast cancer risk prediction system can predict whether a patient is at risk of developing breast cancer by analyzing a variety of clinical data such as the patient's age, family history, and lifestyle habits. Such systems can help doctors identify high-risk patients earlier so that more effective preventive measures can be taken to reduce the incidence of breast cancer.

The role of machine learning in breast cancer detection is very important as it can help doctors diagnose breast cancer more accurately and provide better treatment options for patients. With the continuous development and application of machine learning technology, it is believed that in the future, machine learning will play an increasingly important role in the prevention, diagnosis and treatment of breast cancer, and make greater contributions to the cause of human health. In this paper, a new convolutional neural network structural model is developed based on the existing breast cancer image database to provide a foundation for subsequent research.

2. Data set introduction

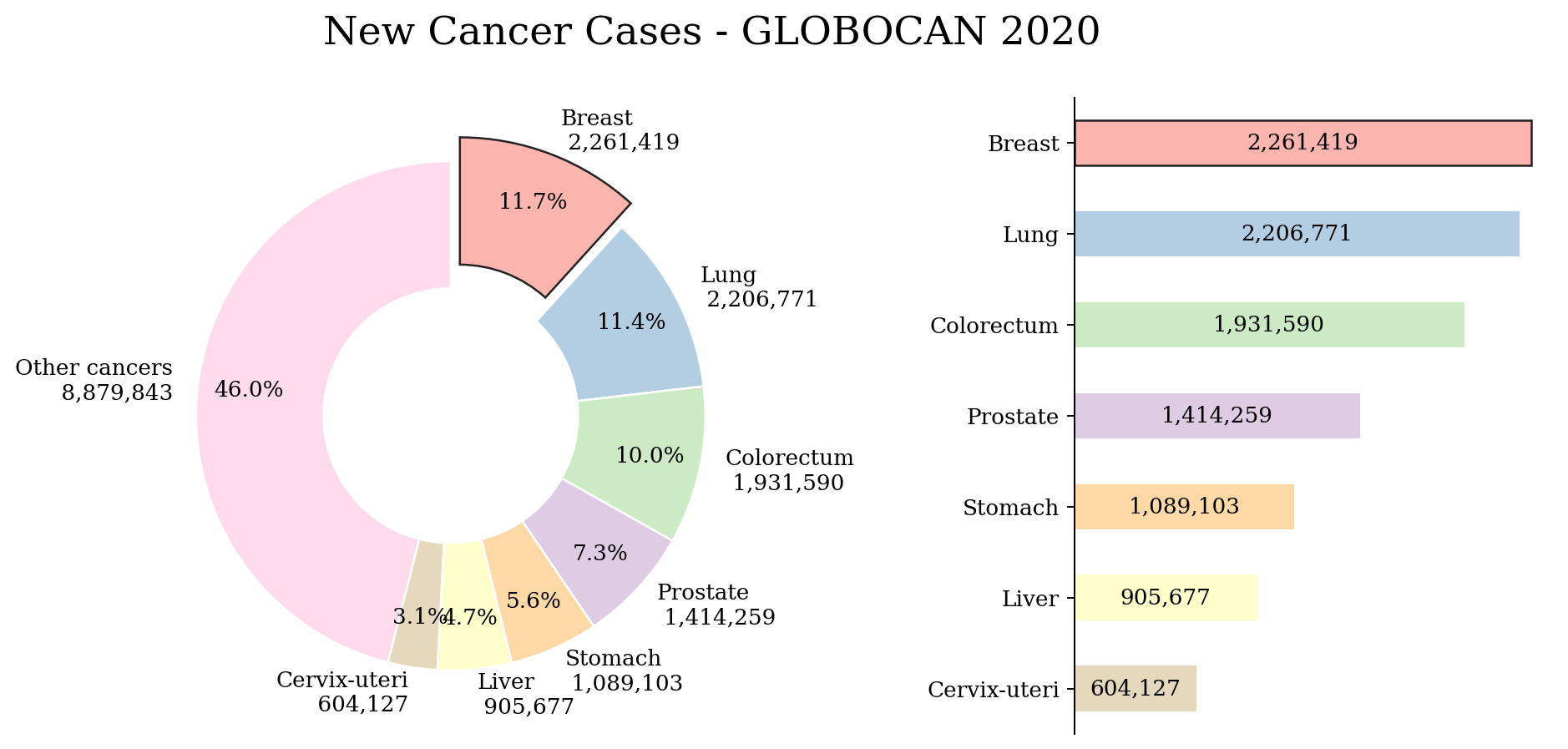

The dataset consisted of 7909 microscopic images of breast tumor tissues collected from 82 patients at different magnifications. Benign tumors were classified as adenomas (A), fibroadenomas (F), lobular tumors (PT), and tubular adenomas (TA), while malignant tumors were classified as carcinomas (DC), lobular carcinomas (LC), mucinous carcinomas (MC), and papillary carcinomas (PC). The dataset includes images at four magnifications such as 40x, 100x, 200x and 400x, and the number of each image category and the specific images are shown in Table 1 and Figure 2.

Table 1. Data set introduction. | |||

Magnification | Benign | Malignant | Total |

40X | 652 | 1370 | 1995 |

100X | 644 | 1437 | 2081 |

200X | 623 | 1390 | 2013 |

400X | 588 | 1232 | 1820 |

Total | 2480 | 5429 | 7909 |

Figure 2. Data set introduction. (Photo credit: Original)

3. Convolutional neural network

Convolutional Neural Network (CNN) is a deep learning model widely used in image recognition, speech recognition, natural language processing, etc. The CNN model extracts image features layer by layer through operations such as convolution, pooling, and full connectivity, and classifies the image with a softmax classifier. The various components of the CNN model and their roles are described in detail below.

The basic component of the CNN model is the convolutional layer. The convolutional layer is one of the most important layers in the CNN model, which extracts the features in the image through convolutional operations. Convolutional operation can be understood as applying a set of filters to the input image to generate a set of feature maps. A filter is a set of weight matrices which filter the input image to extract different features through convolutional operations. For example, one filter can extract edge features in an image and another filter can extract texture features. The convolution operation can effectively reduce the model parameters and improve the generalization ability of the model.

The CNN model also includes a pooling layer. The pooling layer can be understood as a downsampling operation on the feature map to reduce the size of the feature map and improve the computational efficiency of the model. Common pooling operations include maximum pooling and average pooling. Maximum pooling operation divides each feature map into several sub-regions and takes the maximum value in each sub-region as the output of the region. The average pooling operation takes the average value of each sub-region as the output of the region. The pooling operation can effectively reduce the size of the feature map and improve the computational efficiency of the model, as well as reduce the sensitivity of the model to the location of the image and improve the generalization ability of the model.

The CNN model also includes a fully connected layer and a softmax classifier. The fully connected layer expands the feature map output from the pooling layer into a one-dimensional vector, and then maps this vector to the classification result through the fully connected operation. The fully connected operation can be understood as mapping the feature vector to a high-dimensional space, which makes the distance between different categories larger. softmax classifier maps the output of the fully connected layer to a probability distribution, calculates the probability of each category, and selects the category with the largest probability as the classification result. the CNN model is a highly efficient and accurate image recognition model, which is widely used in computer vision, natural language processing, and other fields. processing and other fields. By continuously optimizing the model structure and algorithm, the performance and generalization ability of CNN model will be further improved.

Residual connection is a cross-layer connection method, which can effectively solve the problems of gradient vanishing and gradient explosion in deep neural networks, and at the same time improve the generalization ability and training speed of the model. Residual connectivity can be realized by adding cross-layer connections between convolutional layers. Suppose we have a CNN model containing multiple convolutional layers, where the input of the ith layer is xi and the output is yi, and the input of the i+1th layer is yi and the output is zi. then the residual connection can be defined as:

\( {f_{i}}(x)={x_{i}}+{g_{i}}({x_{i}})\ \ \ (1) \)

Where \( {f_{i}}(x) \) denotes the output of layer \( i \) , \( {x_{i}} \) denotes the input of layer \( i \) , and \( {g_{i}}({x_{i}}) \) denotes the residual function, which can be understood as a correction to the input of layer i. By adding xi and \( {g_{i}}({x_{i}}) \) , the output \( {f_{i}}(x) \) of layer i can be obtained and fed into layer i+1 for processing.

In the implementation, we define the residual function \( {g_{i}}({x_{i}}) \) as a sub-network containing several convolutional layers for correcting the input \( {x_{i}} \) . We define \( {g_{i}}({x_{i}}) \) as a sub-network containing two convolutional layers for extracting high-level features from the input \( {x_{i}} \) . By adding residual connections, the gradient vanishing and gradient explosion problems in deep neural networks can be effectively solved to improve the generalization ability and training speed of the model. At the same time, residual connections can also help us design deeper and more complex CNN models, and improve the expressive ability and classification performance of the model.

The model parameters are shown in Table 2:

Table 2. The model parameters. | ||

Layer | Output Shape | Param# |

random_brightness_1 | (None,224,224,3) | 0 |

Brightness) | ||

random_flip_1 | (None,224,224,3) | 0 |

random_rotation_1 | (None,224,224,3) | 0 |

rescaling | (None,224,224,3) | 0 |

batch_normalization | (None,224,224,3) | 12 |

conv2d | (None,222,222,32) | 896 |

max_pooling2d | (None,111,111,32) | 0 |

conv2d_1 | (None,109,109,64) | 18496 |

max_pooling2d_1 | (None,54,54,64) | 0 |

conv2d_2 | (None,52,52,128) | 73856 |

max_pooling2d_2 | (None,26,26,128) | 0 |

global_average_pooling2d | (None,128) | 0 |

dropout | (None,128) | 0 |

dense | (None,256) | 33024 |

dropout_1 | (None,256) | 0 |

dense_1 | (None,32) | 8224 |

dropout_2 | (None,32) | 0 |

dense_2 | (None,1) | 33 |

4. Result

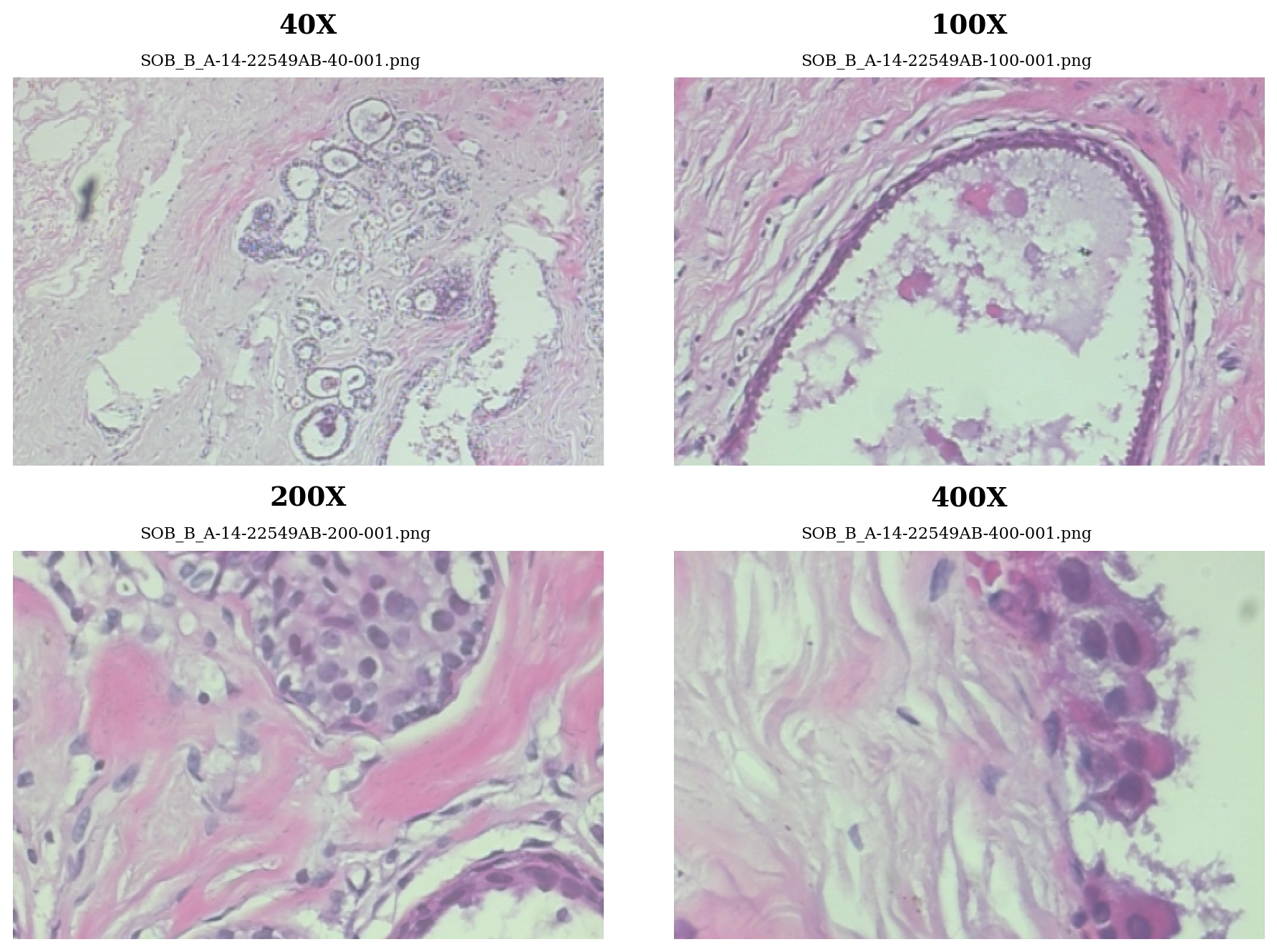

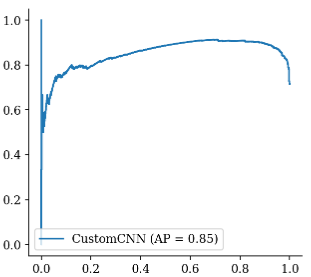

The training set, validation set and test set are divided according to 6:2:2, the training set is used for model training, the validation set is used for validation during model training, and the test set is used for model testing after the training is finished. The AUC and AP change curves during the training process are plotted and the results are shown in Figures 3 and 4.

Figure 3. AUC. (Photo credit: Original)

Figure 4. Accuracy. (Photo credit: Original)

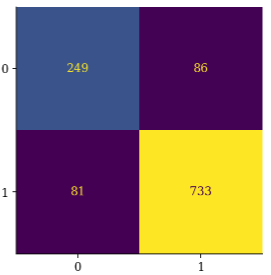

As can be seen from the AUC and AP change curves, the prediction of the model keeps getting better as the training proceeds. The model was tested by calculating the parameters such as AUC, loss and accuracy and plotting the confusion matrix and the results are shown in Fig. 5 and Table 3.

Figure 5. Confusion matrix. (Photo credit: Original)

Table 3. Model Evaluation. | |

Parameters | CNN |

Loss | 0.535100 |

ROC-AUC | 0.831802 |

Accuracy | 0.854656 |

From the results, it can be seen that the model's LOSS finally converges at 0.53, the AUC is 0.832, and the prediction accuracy reaches 85.5%, which achieves a very good prediction effect in breast cancer image detection and classification.

5. Conclusion

In this paper, a convolutional neural network model based on residual connectivity was designed to detect and classify breast cancer images. By training 7909 microscopic images of breast tumor tissues from 82 patients with different magnifications, this paper obtains a model with an accuracy of 85.5%, which demonstrates the good performance of convolutional neural networks for breast cancer image detection and classification.

The main contribution of this paper is the use of a convolutional neural network model based on residual connections, which can effectively solve the problems of gradient vanishing and gradient explosion in deep neural networks, and at the same time improve the model's generalization ability and training speed. By adding cross-layer connections between the convolutional layers, we can directly sum the inputs and outputs, which allows the model to better learn the differences between the inputs and outputs, and further improves the classification performance of the model.

The experimental results in this paper show that the convolutional neural network model based on residual connections has good performance in breast cancer image detection and classification. After training with images at four magnifications, including 40x, 100x, 200x and 400x, the model's loss finally converges at 0.53, with an AUC of 0.832 and a prediction accuracy of 85.5%. This indicates that our model can effectively identify and classify different types of breast tumors, and has good application prospects and promotion value.

Overall, the results of this paper show that the convolutional neural network model based on residual connection can effectively solve the problems of gradient vanishing and gradient explosion in deep neural networks, and at the same time improve the generalization ability and training speed of the model. The model has good performance in breast cancer image detection and classification, and can provide clinicians with more accurate and faster breast cancer diagnosis and treatment suggestions. In the future, we will further optimize the model structure and algorithms to improve the performance and generalization ability of the model, so as to make a greater contribution to the early diagnosis and treatment of breast cancer.

References

[1]. Arul A J J ,Karishma D .DIRXNet: A Hybrid Deep Network for Classification of Breast Histopathology Images[J].SN Computer Science,2023,5(1):

[2]. Alshehri M .Breast Cancer Detection and Classification Using Hybrid Feature Selection and DenseXtNet Approach[J].Mathematics,2023,11(23):

[3]. GE HealthCare launches MyBreastAI Suite for enhanced breast cancer detection with iCAD AI applications[J].Worldwide Computer Products News,2023,

[4]. Said P ,David O ,Érika S , et al.Saliency of breast lesions in breast cancer detection using artificial intelligence[J].Scientific Reports,2023,13(1):20545-20545.

[5]. Lunit AI breast cancer detection matches radiologists in large Danish study[J].Worldwide Computer Products News,2023,

[6]. Linda M D ,H A E ,M D J O , et al.Method of primary breast cancer detection and the disease-free interval, adjusting for lead time.[J].Journal of the National Cancer Institute,2023,

[7]. M. A F Y A .Comparative Evaluation of Data Mining Algorithms in Breast Cancer[J].Department of Computer Science, King Khalid University ,Muhayel Aseer ,Saudi Arabia,2023,77(1):633-645.

[8]. Jungtaek L .Effects of private health insurance on healthcare services during the MERS Pandemic: Evidence from Korea[J].Heliyon,2023,9(12):e22241-e22241.

[9]. Okensama L ,E B A ,A K H , et al.United States insurance coverage of immediate lymphatic reconstruction.[J].Journal of surgical oncology,2023,

[10]. HealthPartners Debuts 2024 Medicare Advantage Plans[J].Manufacturing Close - Up,2023,

Cite this article

Dai,C. (2024). Study on breast cancer image detection and classification based on residual connected convolutional neural network (CNN). Theoretical and Natural Science,33,224-230.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Modern Medicine and Global Health

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Arul A J J ,Karishma D .DIRXNet: A Hybrid Deep Network for Classification of Breast Histopathology Images[J].SN Computer Science,2023,5(1):

[2]. Alshehri M .Breast Cancer Detection and Classification Using Hybrid Feature Selection and DenseXtNet Approach[J].Mathematics,2023,11(23):

[3]. GE HealthCare launches MyBreastAI Suite for enhanced breast cancer detection with iCAD AI applications[J].Worldwide Computer Products News,2023,

[4]. Said P ,David O ,Érika S , et al.Saliency of breast lesions in breast cancer detection using artificial intelligence[J].Scientific Reports,2023,13(1):20545-20545.

[5]. Lunit AI breast cancer detection matches radiologists in large Danish study[J].Worldwide Computer Products News,2023,

[6]. Linda M D ,H A E ,M D J O , et al.Method of primary breast cancer detection and the disease-free interval, adjusting for lead time.[J].Journal of the National Cancer Institute,2023,

[7]. M. A F Y A .Comparative Evaluation of Data Mining Algorithms in Breast Cancer[J].Department of Computer Science, King Khalid University ,Muhayel Aseer ,Saudi Arabia,2023,77(1):633-645.

[8]. Jungtaek L .Effects of private health insurance on healthcare services during the MERS Pandemic: Evidence from Korea[J].Heliyon,2023,9(12):e22241-e22241.

[9]. Okensama L ,E B A ,A K H , et al.United States insurance coverage of immediate lymphatic reconstruction.[J].Journal of surgical oncology,2023,

[10]. HealthPartners Debuts 2024 Medicare Advantage Plans[J].Manufacturing Close - Up,2023,