1. Introduction

For those with visual impairments, ensuring personal safety while travelling is of paramount importance. When entering an unfamiliar environment, it is a significant challenge for visually impaired individuals to ensure their safety and to successfully navigate their way to their intended destination in a timely manner. For those with visual impairments, these challenges include recognising and avoiding obstacles, comprehending their spatial orientation, and consistently navigating securely. Conventional assistive technologies, such as canes and guide dogs, have been demonstrated to be effective for some individuals in certain contexts. However, they often prove less reliable when confronted with complex, unfamiliar, and dynamic environments that are influenced by a multitude of factors. The aforementioned limitations of the two tools in question, namely their inability to provide comprehensive real-time feedback about their surroundings, render it challenging to guarantee the safety of visually impaired individuals when travelling and to ensure the real-time accuracy of navigation.

The advent of vision-based SLAM technology offers a promising new avenue for assisting visually impaired individuals in navigating unfamiliar environments and avoiding obstacles. This technology enables devices to map these environments in real-time while also tracking and positioning themselves on the map. This addresses the necessity for visually impaired individuals to travel securely while facilitating obstacle avoidance and navigation. The integration of SLAM with wearable technology presents novel avenues for the advancement of devices designed to assist visually impaired individuals [1].

2. Traditional scenarios

2.1. Traditional aid methods for the visually impaired

It has been demonstrated that traditional aids for the visually impaired, such as canes and guide dogs, can be beneficial in certain situations for certain groups of people. However, the reliability of these assistive technologies is often constrained when confronted with complex, unfamiliar and dynamic environments that are influenced by multiple factors. The primary limitation of these devices is their inability to provide comprehensive and real-time feedback on the surrounding environment. This presents a significant challenge in ensuring the safety of visually impaired individuals on the road and in providing real-time and accurate navigation. Furthermore, there is a dearth of comprehensive legislation, regulations, and associated safeguards to guarantee that visually impaired individuals and guide dogs are not inconvenienced by other individuals or vehicles in their daily lives. These shortcomings not only impact the safety of visually impaired individuals while travelling but also restrict their overall quality of life and social integration [2].

2.2. The role of SLAM in the industry

Service robots, exemplified by cleaning robots, have become a ubiquitous feature of modern life. The autonomous movement ability and route planning of these robots serve as crucial performance indicators. The integration of Visual SlAM in cleaning robots allows for the comprehensive utilisation of visual information feedback, thereby enabling the robots to obtain the superior quality of environmental information, enhance perception to improve intelligent decision-making ability and incorporate odometry to address issues such as missing light points and weak ambient light [3].

The utilisation of ground robots, autonomous guided vehicles (AGVs) and aerial robots has been a gradual and widespread phenomenon in manufacturing centres for decades. The interior of a factory is a dynamic environment characterised by a high density of facilities, workers and robots. Various successful techniques have been proposed for vision inertial ranging and visual SLAM. The combination of visual SLAM with these robots can be adapted to various environments in the factory to improve efficiency and reduce personnel costs [4].

3. A System of wearable devices and visual SLAM combination

3.1. Visual SLAM on wearable devices

The market is now offering a range of wearable assistive devices for the visually impaired, which are receiving increasing attention. These wearable assistive devices for the visually impaired are of great practical significance, assisting the visually impaired in recognising textual information, and traffic signals and avoiding obstacles. Conventional wearable assistive devices for the visually impaired rely on ultrasound, GPS, inertial odometers and other positioning methods, which have inherent limitations and are challenging to align with the real-time, high-precision and accuracy requirements of visually impaired individuals for navigation and obstacle avoidance when traversing unfamiliar environments. In light of the difficulties, visually impaired individuals face in recognising unfamiliar and complex environments, it is imperative that these devices are able to determine their position, gait and trajectory in real-time. This enables them to assist visually impaired people in travelling safely and independently. Furthermore, the construction of a real-time map of the surrounding environment is essential for the purpose of navigation and assisted obstacle avoidance. Initial solutions to integrate with SLAM were based on the use of the simplest position sensors, but due to the large size of these devices, they lacked rationality and relevance.

Vision SLAM employs vision sensors, including monocular, binocular, stereo, and depth cameras, to obtain environmental data. It exhibits remarkable resilience and has made significant advancements in the domains of automated vehicle navigation and autonomous mobile robotics. Some SLAM schemes have reached a level of maturity, including Oriented FAST and Rotated BRIEF Simultaneous Localization and Mapping (ORB-SLAM), Large-Scale Direct Simultaneous Localization and Mapping (LSD-SLAM), Semi-Direct Visual Odometry (SVO), and others. Furthermore, the integration of vision SLAM devices with wearable technology offers enhanced adaptability and notable advantages [5].

3.2. System workflow

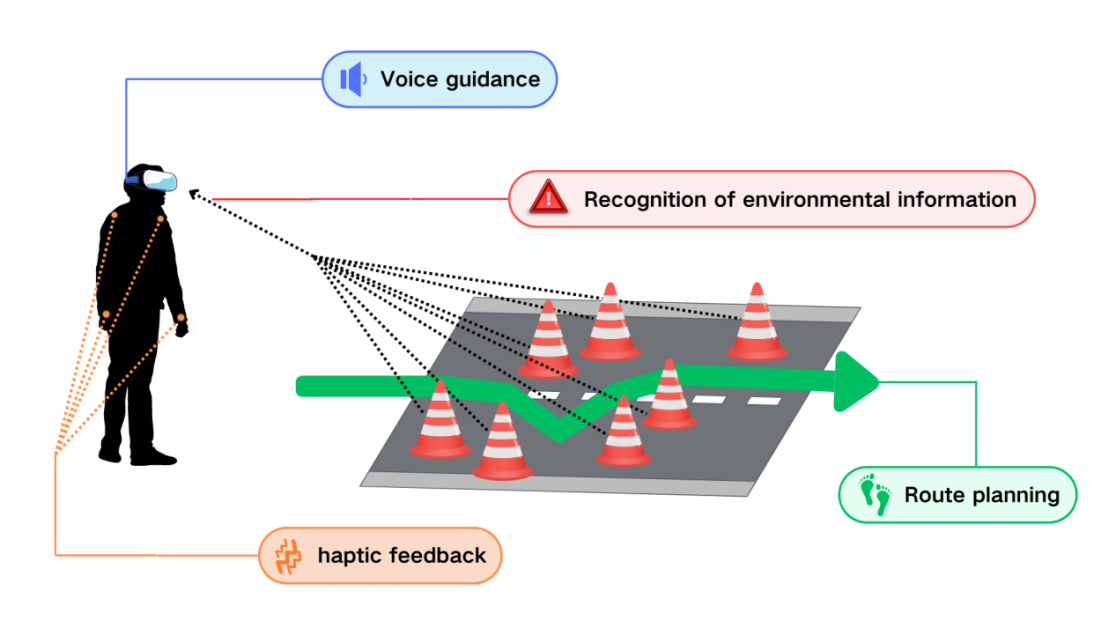

The combination of wearable devices and visual SLAM to help visually impaired people avoid obstacles consists of three parts: the visual SLAM system (the core), wearable devices (as a medium and carrier) and a user-friendly feedback system, see below Figure 1.

Figure 1. Wearable devices combined with visual assistance for visually impaired people system workflow diagram.

This obstacle avoidance system for the visually impaired works as follows. The visual SLAM module uses a camera (typically a monocular or stereo camera) to capture detailed images of the environment. These images are processed to identify key features and landmarks, which are then used to build a real-time map. The SLAM algorithm simultaneously tracks the device's position on this map, constantly updating the user's location [6]. Secondly, the integration of wearable devices ensures that SLAM modules are embedded in form factors that are comfortable and convenient for the user to wear. Such devices may include smart glasses, helmets, or other forms of wearable technology that do not impede the user's typical activities. The design must strike a balance between the necessity for sophisticated sensing and processing capabilities and the paramount importance of comfort and ease of use. Thirdly, the obstacle detection and avoidance component employ sophisticated algorithms to identify potential hazards within the surrounding environment. The algorithms process data from the visual SLAM module with the objective of detecting obstacles and predicting their movement. Subsequently, the system generates pertinent feedback to alert the user and assist them in safely navigating around the obstacle. The incorporation of user feedback mechanisms is of paramount importance for the efficacy of assistive devices. Vibration, for instance, is a form of haptic feedback that can be employed to provide users with immediate and direct feedback, thereby alerting them to potential hazards such as nearby obstacles. Auditory feedback can provide more detailed information, such as the location and distance of an obstacle. The use of visual displays, such as augmented reality overlays on smart glasses, allows for the provision of real-time visual cues without the obstruction of the user's ability to observe the surrounding environment [7].

3.3. System advantages

The field of wearable technology has witnessed a significant advancement in recent years, characterised by the miniaturisation, optimisation and energy efficiency of sensors and processing units. Wearable devices, including smart glasses, wristbands and even smart clothing, are now capable of integrating advanced computing and sensing capabilities. These devices are able to collect and process data about the user's environment and activities in real time, which makes them an ideal medium for implementing SLAM-based navigation aids. By integrating SLAM with wearable technology, it is possible to create assistive devices that provide continuous and real-time feedback about the user's environment, which enables safe, real-time navigation and localisation.

The primary advantage of the system that employs visual SLAM in conjunction with wearable devices is its capacity to detect and circumvent obstacles in real-time. The system is not only capable of recognizing a multitude of obstacles within the surrounding environment but also of prioritizing those that are in closer proximity. To illustrate, the utilisation of the lightweight Vision YOLOv5(You Only Look Once version 5) model enables the accurate detection of obstacles within a range of 20 metres, with the capacity to prioritise them according to their proximity to the user and the potential danger posed by the obstacle. Furthermore, the system is furnished with an audio feedback mechanism that is triggered when the system detects an obstacle and alerts the user in a timely manner, thus providing assistance to the visually impaired in safely avoiding potential collision hazards. The implementation of this system has the potential to markedly enhance the autonomy and security of visually impaired individuals in traversing unfamiliar and intricate environments, fostering greater confidence and tranquillity [7].

The second advantage is a notable enhancement in the mobility and independence of visually impaired individuals. The combination of visual SLAM technology with wearable device technology enables the detection of unfamiliar, complex environments in real-time and the generation of detailed maps of these environments in real-time. This not only provides visually impaired individuals with accurate and immediate navigation data, but also assists them in identifying potential obstacles and hazards in their surroundings, thereby enabling them to navigate with greater confidence in a range of environments. In both familiar and unfamiliar settings, this technology provides navigational assistance based on the routes planned by visually impaired individuals, avoiding obstacles and enhancing the safety and efficiency of their actions. The implementation of this technology markedly enhances the autonomy and dignity of visually impaired individuals, facilitating their participation in social activities and daily life with greater independence [8].

With regard to the third advantage, the integration of SLAM technology with multiple sensors markedly enhances a more comprehensive and accurate comprehension of the unfamiliar and complex environment in which it operates. To illustrate, the utilisation of a camera in conjunction with an ultrasonic sensor enables the system to more accurately detect and recognise obstacles within the user's environment. This fusion exploits the distinctive capabilities of the diverse sensors, enabling the system to adapt to a broader spectrum of environments and to perform optimally across a diverse range of settings. The camera captures detailed image information about the obstacle, while the ultrasonic sensor provides accurate distance measurements. The fusion of multiple sensors not only enhances the reliability and accuracy of the system but also increases data redundancy. The presence of data redundancy can effectively mitigate uncertainty and risk in the detection process. To illustrate, even in the event of a single sensor malfunction, the system is still capable of maintaining high-precision obstacle detection and environmental sensing through the utilisation of data from alternative sensors. This obviates the potential for the user to be placed in a situation of total equipment paralysis. The combination of these multiple sources of information enables the system to provide more reliable and accurate navigation guidance, thus assisting the visually impaired in avoiding obstacles in a safer manner and enhancing their autonomy of movement. The benefit of this technology is that it not only furnishes detailed environmental data in real-time, but also enhances the stability and reliability of the system through data fusion and redundancy mechanisms. This enables visually impaired individuals to receive reliable assistance and navigation support in a variety of complex environments [9].

3.4. System limitations and possible improvements

Although SLAM technology is capable of providing real-time environmental awareness, the computational power necessary to process intricate environmental data can result in delays that impact the real-time performance of the system. To illustrate, in a complex or dynamic environment, the system is required to process a substantial quantity of sensor data, which encompasses images, depth information, and inputs from additional sensors. The fusion and processing of this information necessitates the utilisation of robust computational resources. In the event that the processing velocity is unable to align with the rate of environmental alteration, the system's response time is prolonged, which subsequently impacts the real-time performance and user experience [7].

In particular, some systems may require longer response times to detect and prioritise obstacles. This is because the system must not only detect all potential obstacles, but also determine which obstacles pose the greatest threat to the user, based on factors such as their distance, size and direction of movement, and provide feedback accordingly [2]. This complex computational process is prone to latency when running on highly loaded processors, and the real-time performance requirements of these systems often require a trade-off between computational speed and battery life. High-performance computing devices can significantly increase power consumption, which in turn shortens the life of the device and affects its portability and usefulness [7].

Visual SLAM techniques may not perform well in certain complex or dynamically changing environments, such as crowded or dramatically changing lighting environments, where the accuracy and reliability of sensor data can be affected and fluctuate. In these environments, sensors may receive large amounts of noisy data or incomplete information, resulting in increased errors in map construction and localisation. For example, in crowded places, fast-moving people and other dynamic objects can interfere with the sensor's data collection, making it difficult for the visual SLAM system to accurately locate and map the environment. Scenes with drastic changes in lighting, such as moving from bright outdoor areas to dimly lit indoor areas, can also affect the performance of cameras and other optical sensors, leading to inaccuracies, omissions and loss of data [6].

While the integration of SLAM technology with wearable devices offers significant advantages, there are potential challenges associated with user adaptation. For visually impaired individuals, specialized training may be necessary to fully leverage the capabilities of these technologies. As the systems are complex to operate, users must invest time and receive instruction to master their use. Furthermore, these devices necessitate periodic updating and maintenance to ensure optimal performance. Consequently, these learning and adaptation processes may impede the extensive adoption of these systems by visually impaired users [9].

4. System optimisation and development trends

The future includes the development of more efficient algorithms and the use of more advanced hardware such as dedicated processing units (e.g. GPU) and low-power processors. These improvements can increase the computational speed of the system and reduce latency, thereby improving the performance and reliability of the system in a real-time environment [2,7].

Multi-sensor fusion methods, such as the combined use of cameras, Light Detection and Ranging (LIDAR) and ultrasonic sensors, should be used to improve the accuracy and reliability of the data. This can compensate for the shortcomings of a single sensor in different environmental conditions. For example, LiDAR can provide highly accurate distance measurements and work well in low-light conditions, while ultrasonic sensors are good at detecting nearby obstacles. It is also important to develop smarter algorithms - algorithms that are better able to dynamically adapt and correct sensor data to more complex environmental changes. For example, using deep learning techniques to process sensor data can significantly improve a system's performance in complex environments, enabling it to detect and filter noisy data more effectively and improve the reliability and accuracy of environmental sensing and obstacle detection [7, 10].

5. Conclusion

This paper examines the problems of combining a visual SLAM system with wearable devices to assist visually impaired people to avoid obstacles in unfamiliar, unknown and complex environments, and to assist in the correction and elimination of potential hazards based on their autonomous path planning combined with the analysis of data detected by the system. In order to be able to contribute to the design of a visually impaired travel and avoidance navigation system based on the combination of visual SLAM and wearable devices, the feasibility of the system combination is examined, the workflow of the system is simulated, its benefits in helping visually impaired people to travel and avoid obstacles are examined. Also, its limitations in terms of algorithms and sensors in the face of complex and changing environments are presented, and future development trends and corresponding areas of optimization are proposed.

References

[1]. Bai J, Liu Z, Lin Y, Li Y, Lian S, Liu D. Wearable Travel Aid for Environment Perception and Navigation of Visually Impaired People. Electronics. 2019; 8(6):697. https://doi.org/10.3390/electronics8060697

[2]. Chen Z, Liu X, Kojima M, Huang Q, Arai T. A Wearable Navigation Device for Visually Impaired People Based on the Real-Time Semantic Visual SLAM System. Sensors. 2021; 21(4):1536. https://doi.org/10.3390/s21041536

[3]. Z. Wang, H. Liao, Z. Jia and J. Wu, "Semantic Mapping Based on Visual SLAM with Object Model Replacement Visualization for Cleaning Robot, " 2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), Jinghong, China, 2022, pp. 569-575, doi: 10.1109/ROBIO55434.2022.10011717.

[4]. Francisco J. Perez-Grau, J. Ramiro Martinez-de Dios, Julio L. Paneque, J. Joaquin Acevedo, Arturo Torres-González, Antidio Viguria, Juan R. Astorga, Anibal Ollero, Introducing autonomous aerial robots in industrial manufacturing, Journal of Manufacturing Systems, Volume 60, 2021, Pages 312-324, ISSN 0278-6125, https://doi.org/10.1016/j.jmsy.2021.06.008.

[5]. Xu, P., Van Schyndel, R., & Song, A. (2023, June). Smart Head-Mount Obstacle Avoidance Wearable for the Vision Impaired. In International Conference on Computational Science (pp. 417-432). Cham: Springer Nature Switzerland.

[6]. Ou, W., Zhang, J., Peng, K., Yang, K., Jaworek, G., Müller, K., & Stiefelhagen, R. (2022, July). Indoor navigation assistance for visually impaired people via dynamic SLAM and panoptic segmentation with an RGB-D sensor. In International Conference on Computers Helping People with Special Needs (pp. 160-168). Cham: Springer International Publishing.

[7]. Asiedu Asante, B. K., & Imamura, H. (2023). Towards robust obstacle avoidance for the visually impaired person using stereo cameras. Technologies, 11(6), 168.

[8]. Rahman M, Khadem M, Siddiquee MM, et al. SLAM for Visually Impaired People: a Survey. arXiv. Published online December 9, 2022. Available at: https://arxiv.org/abs/2212.04745. Accessed July 27, 2024.

[9]. Joseph AM, Kian A, Begg R. State-of-the-Art Review on Wearable Obstacle Detection Systems Developed for Assistive Technologies and Footwear. Sensors. 2023; 23(5):2802. https://doi.org/10.3390/s23052802\

[10]. Zhang Z, Lin F, Wu T. Multi-Sensor Fusion for SLAM in Dynamic Environments: A Survey. Sensors. 2022;22(4):1356. doi:10.3390/s22041356.

Cite this article

Zhang,Y. (2024). Intelligent assistive obstacle avoidance device based on SLAM and wearable technology. Theoretical and Natural Science,41,98-103.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Mathematical Physics and Computational Simulation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Bai J, Liu Z, Lin Y, Li Y, Lian S, Liu D. Wearable Travel Aid for Environment Perception and Navigation of Visually Impaired People. Electronics. 2019; 8(6):697. https://doi.org/10.3390/electronics8060697

[2]. Chen Z, Liu X, Kojima M, Huang Q, Arai T. A Wearable Navigation Device for Visually Impaired People Based on the Real-Time Semantic Visual SLAM System. Sensors. 2021; 21(4):1536. https://doi.org/10.3390/s21041536

[3]. Z. Wang, H. Liao, Z. Jia and J. Wu, "Semantic Mapping Based on Visual SLAM with Object Model Replacement Visualization for Cleaning Robot, " 2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), Jinghong, China, 2022, pp. 569-575, doi: 10.1109/ROBIO55434.2022.10011717.

[4]. Francisco J. Perez-Grau, J. Ramiro Martinez-de Dios, Julio L. Paneque, J. Joaquin Acevedo, Arturo Torres-González, Antidio Viguria, Juan R. Astorga, Anibal Ollero, Introducing autonomous aerial robots in industrial manufacturing, Journal of Manufacturing Systems, Volume 60, 2021, Pages 312-324, ISSN 0278-6125, https://doi.org/10.1016/j.jmsy.2021.06.008.

[5]. Xu, P., Van Schyndel, R., & Song, A. (2023, June). Smart Head-Mount Obstacle Avoidance Wearable for the Vision Impaired. In International Conference on Computational Science (pp. 417-432). Cham: Springer Nature Switzerland.

[6]. Ou, W., Zhang, J., Peng, K., Yang, K., Jaworek, G., Müller, K., & Stiefelhagen, R. (2022, July). Indoor navigation assistance for visually impaired people via dynamic SLAM and panoptic segmentation with an RGB-D sensor. In International Conference on Computers Helping People with Special Needs (pp. 160-168). Cham: Springer International Publishing.

[7]. Asiedu Asante, B. K., & Imamura, H. (2023). Towards robust obstacle avoidance for the visually impaired person using stereo cameras. Technologies, 11(6), 168.

[8]. Rahman M, Khadem M, Siddiquee MM, et al. SLAM for Visually Impaired People: a Survey. arXiv. Published online December 9, 2022. Available at: https://arxiv.org/abs/2212.04745. Accessed July 27, 2024.

[9]. Joseph AM, Kian A, Begg R. State-of-the-Art Review on Wearable Obstacle Detection Systems Developed for Assistive Technologies and Footwear. Sensors. 2023; 23(5):2802. https://doi.org/10.3390/s23052802\

[10]. Zhang Z, Lin F, Wu T. Multi-Sensor Fusion for SLAM in Dynamic Environments: A Survey. Sensors. 2022;22(4):1356. doi:10.3390/s22041356.