1. Introduction

Brain-computer interfaces (BCIs) broadly refer to technologies that enable direct interaction between machines and brain neural signals [1]. Pursuing innovative human-machine interaction and assistive technologies has fueled the exploration and advancement of various interdisciplinary fields. Among these burgeoning areas, wearable BCI technology has emerged as a critical junction connecting neuroscience, material engineering, and electronic information engineering. The technological path toward wearable BCIs can be traced back to the fundamental desire to create more intimate and efficient human-machine communication channels. This desire has been sought after since the first capture of brain activity signals [2]. This aspiration is supported by societal demands for enhanced assistive devices for individuals with different abilities and the surge in industrial automation and artificial intelligence applications [3-5]. Global trends toward digitization and ubiquitous computing environments have intensified the need for wearable technologies that integrate seamlessly with daily life. Consequently, the conjunction of neural interfacing with wearable devices has become an attractive research area, significantly advancing fields such as rehabilitation, communication, and entertainment [6].

BCIs can process various brain signals to achieve interactive functions. BCIs facilitate direct communication between the human brain and external devices, capturing and transfer brain signals through electrodes or sensors. These signals are translated into executable commands. The signals can be divided into two categories: active and passive. Steady-state visual evoked potentials (SSVEP) and P300 signals are typical passive signals [3], induced by external stimulus signals and used in BCI systems. Without deliberate control from the user, these monitor brain activity for specific signals triggered by external stimuli. They can also be invasive or non-invasive, with corresponding benefits and limitations. Active signals include motor imagery and brain rhythm signals, generated by the user's intentional actions [7]. Intentionally controlled by the user, these interfaces are subdivided into invasive and non-invasive types. Invasive methods implant electrodes into the brain for precise readings but pose medical risks. Non-invasive methods capture signals externally, often less accurate but less risky. The selection between active/passive and invasive/non-invasive hinges on factors like intended application, precision, ethics, and feasibility. The evolution of these technologies continues to broaden human-computer interaction possibilities but poses challenges that must be addressed.

This article reviews research concerning the use of active and passive signals in BCI systems. It provides an overview of recent innovative works in BCIs, summarizing research's innovative methodologies, reporting on related work applications, and discussing the advantages and drawbacks of the studies. Furthermore, it points out potential future directions requiring further in-depth exploration.

2. Technological Breakthrough, Innovation, and Application

2.1. Passive BCIs

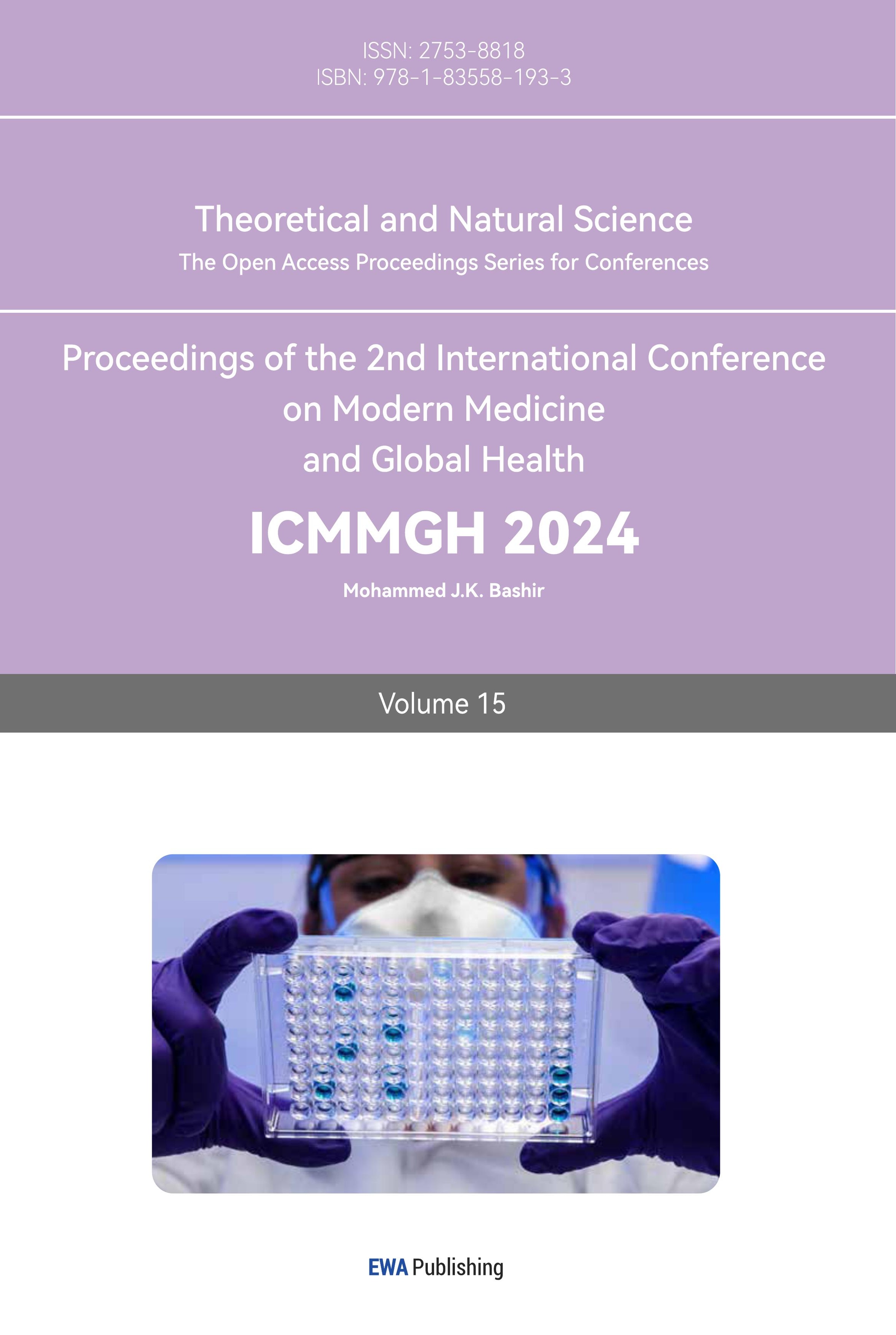

M. Wang et al. [8] explored a passive, noninvasive brain-computer interface (BCI) technology, utilizing a wearable system based on steady-state visual evoked potentials (SSVEP) for quadcopter control as shown in figure 1 a. This technology leverages a head-mounted device (HMD) to provide a 3D display space, aiming to overcome the limitations of traditional monitors in SSVEP BCI.

This approach offers a potential direction for the practical application of passive BCI systems, allowing for more immersive and portable control systems through Virtual Reality (VR). The BCI system developed in the study demonstrated the ability of subjects to control a quadcopter's flight, revealing the system's potential applicability in the real world. Implementing asynchronous control in the system transformed transitional states into hovering states, eliminating their impact, and a threshold was set to distinguish transitional states. In addition, the researchers introduced a modified ITR (Information Transfer Rate) metric for evaluating asynchronous navigation systems.

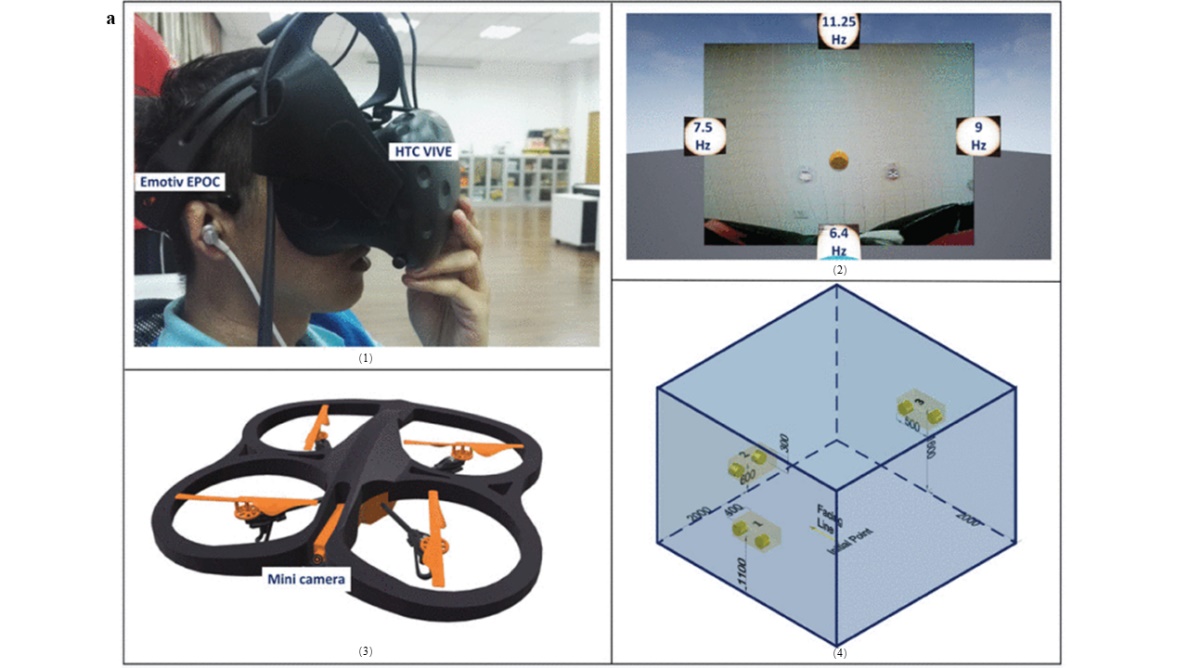

Mahmood et al further introduced an asynchronous stimulation mode embedded within the VR experience, presenting different stimuli to each eye [9], which is shown in figure 1 b. The authors investigated the integration of VR and brain-computer interfaces (BCIs) using wireless soft bioelectronics for text spelling. They attempted to overcome the limitations of traditional portable BCIs, including device rigidity, bulkiness, and sensitivity to noise and motion artifacts.

The authors proposed a novel approach using wireless soft bioelectronics and VR technology to overcome these limitations. Split-eye asynchronous stimulus (SEAS) showed great information throughput. This novel approach allowed for unique asynchronous stimulation patterns that could be measured using four brain electrodes. High-quality SSVEP is recorded by the combination of electrodes and flexible materials, and the use of dry needle electrodes and wireless wearable soft platform has excellent performance in decoding SSVEP.

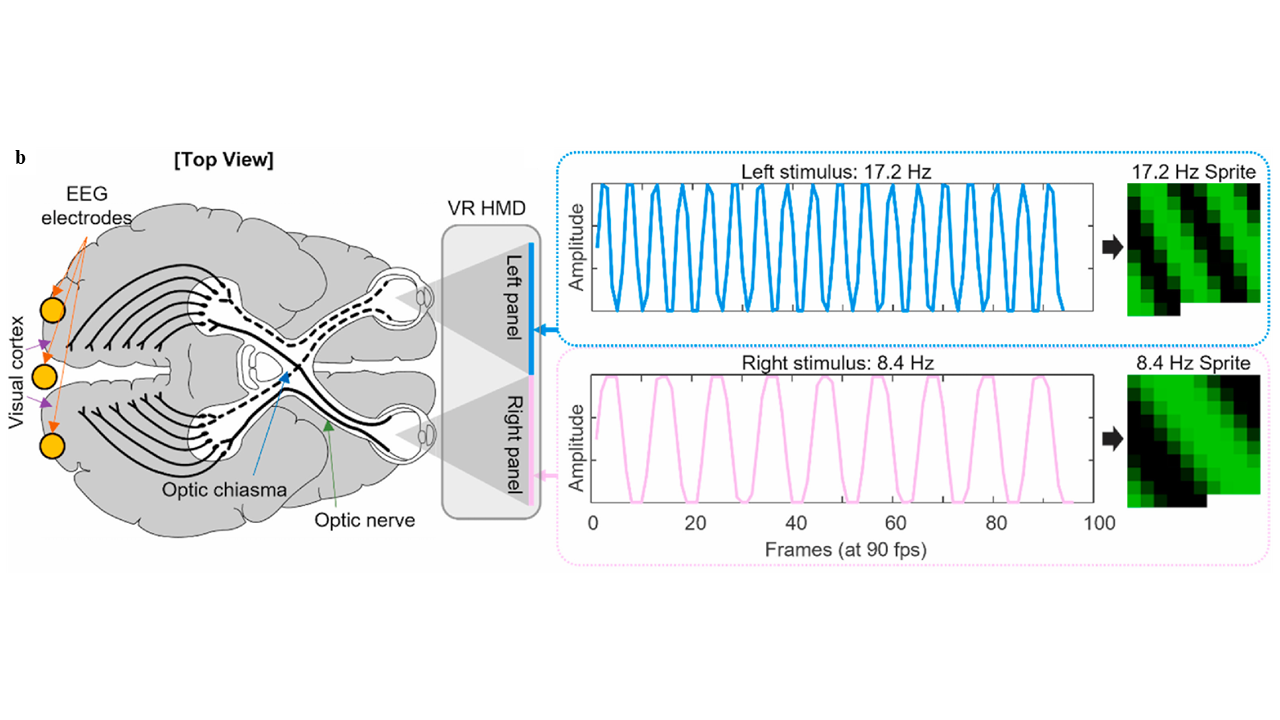

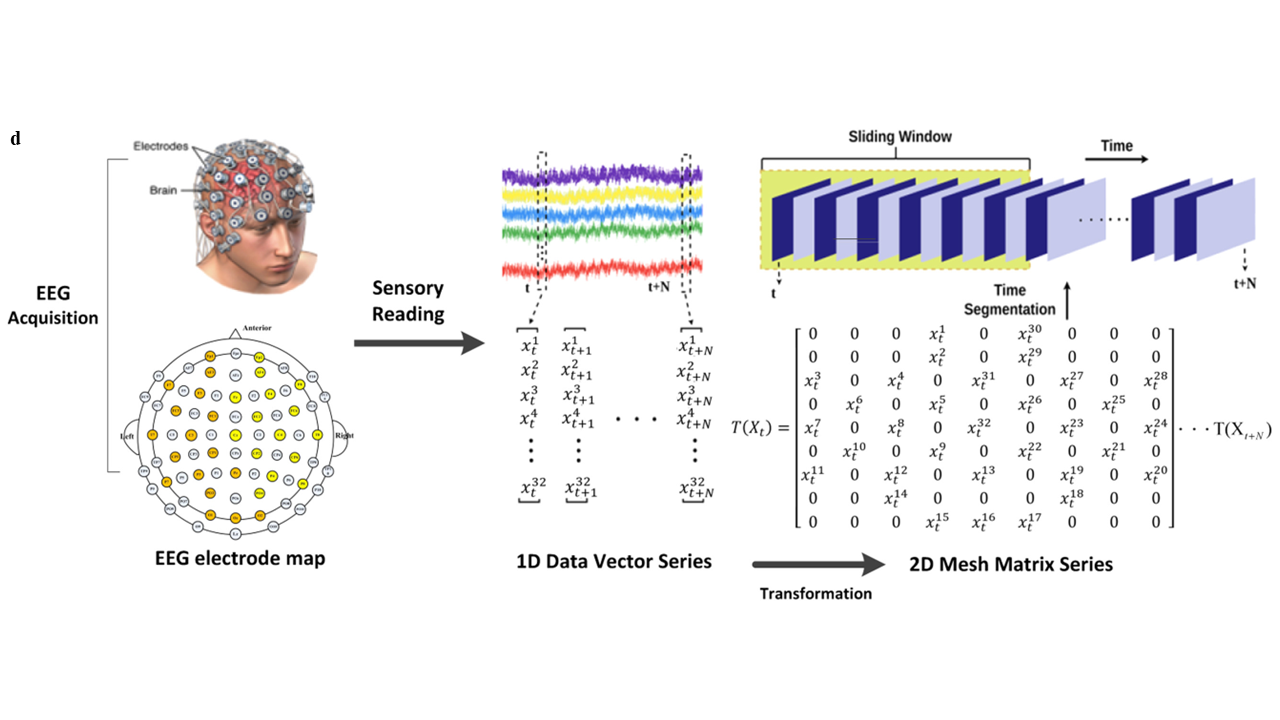

Figure 1. a. (1) Wearing examples, including head-mounted displays and EEG headsets. (2) Head-mounted display content, including four different frequency stimuli at the boundary and the first-person video returned by the aircraft. (3) Four-axis UAV (4) Schematic diagram of the experimental space. The yellow area is the flying target, guided by a pair of LEDs. b. Shows a cross-sectional top view of the brain and the head-mounted display. Different frequencies are generated on both sides of the head-mounted display to stimulate the optic nerve. c. Shows the wearing example of the BCI system in actual work and the display of the working interface. d. EEG signal collection and processing flow [8-11].

The experimental results demonstrated successful real-time classification and decoding of SSVEP signals, enabling tasks such as VR text spelling and navigation in real environments. The authors employed real-time data processing and classification techniques, combined with a spatial convolutional neural network algorithm to achieve high classification accuracy.

The study presented by L. Angrisani et al. introduces a wearable brain-computer interface (BCI) instrument for inspection tasks in the context of Industry 4.0, which is shown in figure 1 c [10]. They bring people from the closed virtual world to the junction of virtual and reality through augmented reality technology (AR). Traditional methods of performing inspection tasks using manual input interfaces have limitations in terms of complexity and convenience. To address these challenges, the researchers propose an innovative approach that combines BCI and augmented reality (AR) glasses to eliminate the need for manual input.

The proposed method utilizes a single-channel EEG to analyze brain signals and measure steady-state visually evoked potentials (SSVEP) as a response to visual stimuli. By integrating AR glasses, the instrument provides real-time monitoring and analysis of brain signals, enabling seamless interaction with the inspection information. Experimental measurements evaluated the system's performance in different stimulation and acquisition time windows. The results showed accuracies ranging from 81.1% to 98.9%, indicating the feasibility and effectiveness of the proposed method for inspection tasks in the Industry 4.0 environment.

However, the study encountered some limitations, as it was tested only on healthy subjects. Future research will focus on exploring the practicality and limitations of the system by targeting individuals with brain disorders or abnormalities. Additionally, efforts will be made to optimize visual stimulation parameters to reduce eye fatigue and improve user attention.

In all of the passive BCIs discussed above, stimuli are induced by customizing standards and corresponding signals are collected and analyzed for analysis. There may also be cases in passive BCI where standard inducing stimuli, such as emotional induction, cannot be set. As a result of emotion induction, the EEG signal pattern is also more complex. The study by J Chen et al. [11] highlights the importance of emotion recognition in affective brain-computer interactions and affective computing. They explore the advantages of electroencephalography (EEG) as a noninvasive brain imaging technique. However, previous methods for EEG-based emotion recognition have limitations. To address this, they propose a novel data representation method that combines convolutional and recurrent neural networks to learn spatiotemporal features from EEG signals, thus improving emotion classification performance. Improvements based on machine learning methods bolster their research.

The processing of EEG signals and the construction of classification models were improved. The authors present a novel approach that converts the 1D chain-like EEG vector sequences into 2D mesh-like matrix sequences and segments them using a sliding window technique, which is shown in figure 1 d. Spatial features are extracted using a convolutional neural network, while temporal dependencies are captured using a recurrent neural network. Emotion prediction is performed using fully connected and SoftMax layers. Researchers propose two model architectures based on hybrid EEG emotion classification: cascaded convolution recurrent neural network and parallel hybrid convolutional recurrent neural network. Experimental results on the DEAP dataset demonstrate the effectiveness of their proposed method in achieving high emotion classification accuracy. Notably, the approach outperforms several baseline models including 1D CNN, 2D CNN, 3D CNN, and LSTM models.

In conclusion, the above work showcases the progress of passive Brain-Computer Interface (BCI) and inspires future research endeavors. The study, by L. Angrisani et al, introduces a wearable BCI instrument that integrates with AR glasses for inspection tasks in Industry 4.0 [10]. Using SSVEP and AR technology provides a novel and efficient approach for hands-free information acquisition. The results demonstrate the potential of the proposed method in facilitating inspection tasks in the industry, while also highlighting areas for further improvement and research. VR also works well with BCI system. Mahmood et al. presented a novel approach for VR-enabled portable BCIs using wireless soft bioelectronics for word spelling [9]. Their research demonstrated improvements over traditional BCIs and showcased the potential of this system in enhancing information throughput and enabling various applications in VR and neurorehabilitation fields. The developed wireless soft electronics system exhibited significant advantages, including wireless and real-time monitoring of brain signals, making it suitable for portable BCIs, neurorehabilitation, and disease diagnosis. The authors aimed to advance their research by conducting large-scale human experiments, specifically targeting individuals with motor disabilities to enable them to control machines, communicate, and use internet applications within VR environments. M. Wang et al.'s research probes the application of passive BCI technology in quadcopter control [8]. By integrating SSVEP with HMD, the study opens new possibilities for practical applications. These results showcase the potential value of BCI technology in specific application scenarios. Moreover, the study also highlights the necessity to further explore depth perception and the fixed relationship with the coordinate system. It acknowledges substantial room for improvement in the proposed BCI system. With the improvement, it may be a good way to guide space coordinates for VR and AR in BCIs system. With innovative machine learning approaches, conducted by Chen et al, the research contributes to the field of emotion recognition by integrating spatiotemporal EEG representations with hybrid convolutional recurrent neural networks. The proposed method shows promising results in accurately classifying emotions, providing valuable insights for affective brain-computer interaction and emotional computing applications.

2.2. Active BCIs

Willett et al. [12] proposed an innovative approach to active brain-computer interface (BCI) technology as shown in fig. 2. The researchers developed a two-layer gated recurrent unit (GRU) recurrent neural network (RNN) that decodes neural activity from the motor cortex into attempted handwriting actions, which are then converted into text in real-time. The team successfully overcame the challenges of unknown time per letter and limited dataset size by adapting a neural network approach in automatic speech recognition.

This technological breakthrough holds profound implications for individuals who have lost mobility or speech capabilities. The BCI system developed in the study achieved a typing rate of 90 characters per minute with an online raw accuracy of 94.1%, surpassing previous BCI technologies. Implementing an autocorrect feature with a large-vocabulary language model further augmented the offline accuracy to exceed 99%. Researchers believe that time-varying motion patterns like handwriting are easier to perceive than dot-to-dot motion, which is one reason handwritten BCIs are faster than point-and-clicks. These results demonstrate a significant advancement in BCI technology and signify a potential leap in real-world applications, particularly for enhancing the quality of life for individuals with paralysis.

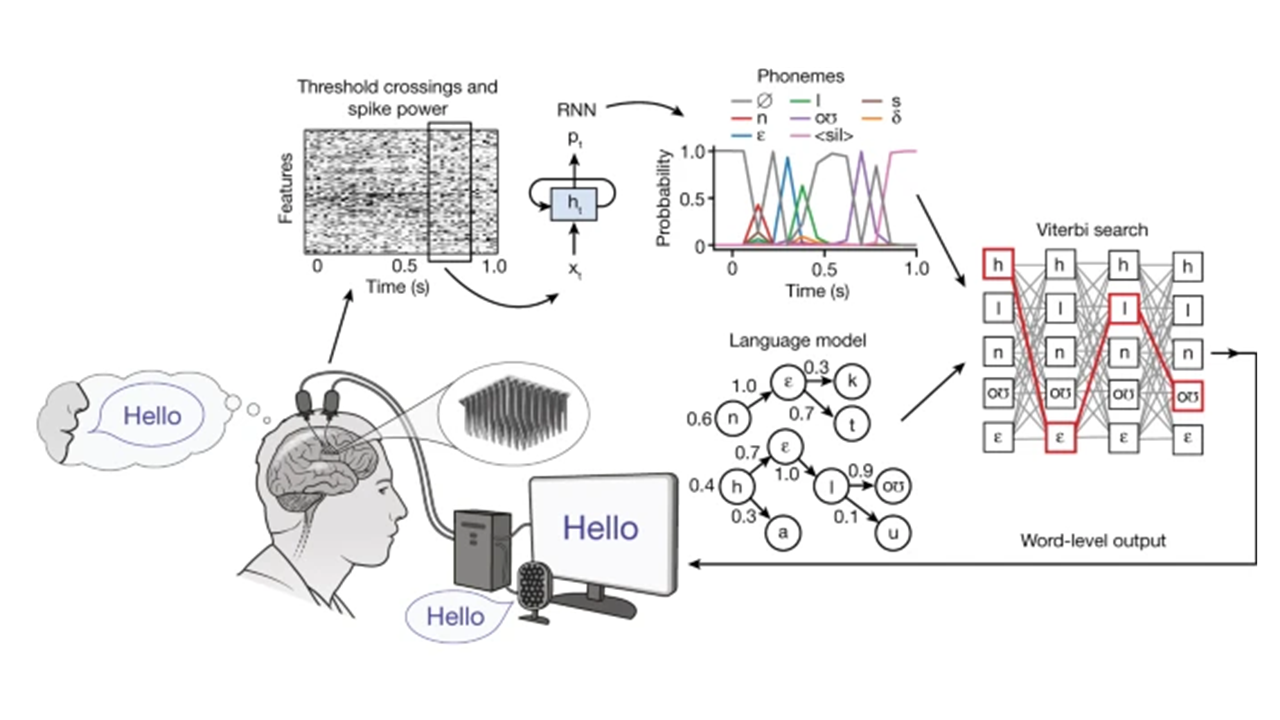

Figure 2. Voice decoding process. In the first step, the neural activity signals captured on the electrodes are collected, binned and smoothed. In the second step, the probability of each phoneme being spoken is derived from the time series of neural signals analyzed by RNN. Finally, language models are introduced to combine phoneme probabilities to decode sentences intended by humans [12].

Further research work on speech-to-text was explored by Willett et al [13], which is shown in fig. 2. This aligns with human communication habits, and the voice expresses human wishes more naturally and directly. The researchers present a groundbreaking study on a high-performance speech neuroprosthesis aimed at restoring rapid communication for individuals with amyotrophic lateral sclerosis (ALS). The objective was to decode neural activity patterns evoked by attempted speech into text. Previous attempts at speech brain-computer interfaces (BCIs) failed to achieve sufficient accuracy for unconstrained sentence communication. In this study, the researchers utilized intracortical microelectrode arrays to record spiking activity in the brain and decode speech into text. The study participant, suffering from ALS, achieved an impressive 9.1% error rate in a vocabulary consisting of 50 words with the BCI system. The results show that neural signals faithfully represent phoneme articulation details even after years of inability to speak clearly in humans. Furthermore, a vocabulary of 125,000 words yielded a 23.8% error rate, the first successful demonstration in the large vocabulary decoding domain. The decoding speed reached 62 words per minute, significantly improving compared to previous records and approaching natural conversation speed (160 words per minute).

The findings revealed that area 6v (ventral premotor cortex) made a significant contribution to the classification performance of facial movements, phonemes, and words, while even small regions contain rich vocal organ representations, whereas region 44 appears to contribute less information to most movements, phonemes, and words or not. In the 6V region, positional differences have an impact on output results. The ventral 6v array contributed more to decoding than the dorsal array. Nevertheless, the combination of the two regional signals contributes a lot to the low error rate, presumably there should be some correlation between the two neural activities, making it easier for RRN to decode phonemes when combined them together. Additionally, the study examined the impact of recurrent neural network (RNN) parameters and architecture selection on classification performance through offline parameter scans.

The potential of the BCI system in assisting able-bodied people cannot be underestimated. In the study by Zhu et al., a novel approach is proposed that utilizes wearable electroencephalographic (EEG) signals and convolutional neural networks (CNNs) for vehicle driver drowsiness detection [14]. Machine learning techniques were put to good use in this active wearable BCI study. Vehicle driver drowsiness detection is crucial for ensuring driving safety and preventing accidents caused by fatigued drivers. Traditional methods often involve using sensors to measure physiological signals, which can be costly, inconvenient, and intrusive for the drivers.

A wearable brain-computer interface (BCI) system was developed to collect the EEG data using the OpenBCI framework. Preprocessing techniques, including linear filtering, FastICA decomposition, and wavelet thresholding denoising, were applied to enhance the quality of the collected EEG signals. The processed EEG signals were then fed into CNNs for classification based on two different network architectures: one with Inception modules and another with a modified AlexNet module.

Challenges were encountered in optimizing the network architectures and training the CNN models. The researchers conducted iterative experimentation and parameter tuning to achieve satisfactory results in terms of classification accuracy. The CNN with Inception modules achieved a classification accuracy of 95.59% on one-second time window samples, while the CNN with the modified AlexNet module achieved an accuracy of 94.68%. Based on the classification results, an early warning strategy module was implemented to sound an alarm if a driver is identified as drowsy. The system demonstrated effective performance in detecting driver drowsiness using EEG signals.

Researchers Zhang et al. developed a novel multimodal human-machine interface (mHMI) system aimed at real-time control of a soft robot hand [15]. The researchers recognized the potential of brain-computer interface (BCI) technology in stroke rehabilitation to improve the quality of life for stroke survivors. However, they found that existing BCI systems could not provide multiple control commands required for real-time control of a soft robot hand.

To address this issue, the researchers integrated multiple modalities, including electroencephalogram (EEG), electromyogram (EMG), and electrooculogram (EOG), to develop the mHMI system. By combining these signals, they aimed to increase the number and accuracy of control commands. Their approach was motivated by the limitations of previous methods, which heavily relied on single-modal signals and did not fully leverage the advantages of multimodal signals. The research methodology involved integrating EEG, EMG, and EOG signals into a fully integrated system and applying appropriate signal processing and pattern recognition algorithms. The experimental setup required participants to imagine different hand gestures, perform eye movements, and use various gestures to control the soft robot hand in different modes. The researchers evaluated the performance of the mHMI system based on factors such as control speed, accuracy, and information transfer rate.

The results demonstrated the effectiveness of the mHMI system in recognizing and controlling different hand gestures. The system exhibited good control speed, accuracy, and a high information transfer rate. This performance indicates its potential for supporting rehabilitation therapies and improving the interaction between users and assistive devices.

In conclusion, active BCI constantly innovates in signal mode and signal processing. They are being combined more and more closely with machine learning because the signals may not have fixed feature patterns or feature patterns that are too complex, and also for the integration of multi-modal signals may be a trend. The study by Willett et al. represents a major advancement in active BCI technology, offering a promising path for decoding high-speed sequential behaviors like handwriting from neural activity. The high performance of the BCI when the user writes self-generated sentences, not just copying screen prompts, indicates the potential for real-world application of this technology. However, the study also highlights the need for further research to explore the adaptability and robustness of this technology in various contexts. The researchers further investigated the decoding of speech-to-text. When decoding speech, it is crucial to prioritize semantic accuracy rather than word accuracy. Even if the word accuracy is high, errors in key words can lead to communication breakdown, resulting in complete failure of speech decoding. Introducing the attention mechanism, a deep learning technique, may be a viable approach to address this issue. Additionally, the significance of emotions in speech assistance should not be underestimated. The same statement can carry entirely different meanings when expressed with different emotions. Shifting the evaluation criterion for speech decoding ability from word to semantic accuracy can provide valuable guidance for designing loss functions in deep learning models, thereby influencing the decoding process. Furthermore, research on speech decoding should encompass emotion recognition and the study of the relationship between emotions and speech semantics. Emotion recognition can potentially aid in distinguishing similar-sounding words during decoding. Further developed in machine learning, the study by Zhu, M et al. proposes a novel approach for vehicle driver drowsiness detection using wearable EEG signals and CNNs. The experimental results validate its effectiveness in achieving high classification accuracy. Future work can focus on real-time implementation and integration into driver assistance technologies for enhanced road safety. The use of multimodal signals aims to overcome the shortcomings and dependencies of individual modes, while increasing the class of available instructions. The mHMI system developed by Zhang et al. demonstrates significant advancements in multimodal human-machine interfaces for real-time control of soft robot hands. The integration of EEG, EMG, and EOG signals allows for enhanced control capabilities and shows promise in stroke rehabilitation and other applications requiring precise and intuitive control of assistive devices.

3. Conclusion

The comprehensive review of wearable brain-computer interfaces (BCIs) underscores a transformative juncture in human-machine communication. Dividing into passive and active BCIs, the review explores diverse applications:

Through integration with Virtual Reality (VR) and Augmented Reality (AR), passive BCIs are breaking new ground, facilitating tasks like quadcopter control and industrial inspections. Novel methods like Split-eye asynchronous stimulus (SEAS) and wireless soft platforms are overcoming limitations, enhancing real-time decoding, and creating immersive, hands-free control systems. Active BCIs have sparked innovations in handwriting decoding, speech decoding, and driver drowsiness detection. The application of machine learning, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), has greatly increased efficiency and accuracy of BCI signal feature recognition. The result is a new paradigm BCI with broad applications, from assisting individuals with paralysis to enhancing driver safety.

Despite advancements, challenges in algorithm optimization, adaptability, multimodal signal complexity, and ethical considerations remain. Deep learning such as residual neural network and recurrent neural network for feature pattern recognition and classification, may be a good research direction, and the output display of the pre-trained large model assisted BCI system results is also very meaningful. Research on deep learning methods combining multiple modal signals is a possible future direction. Future research requires a multidisciplinary approach, blending scientific inquiry and engineering innovation. Wearable BCI technology has evolved from a theoretical concept to a practical application. Its confluence with emerging technologies like VR, AR, and machine learning heralds a new era of interaction. The potential societal benefits, especially in rehabilitation, communication, and entertainment, are profound. This review provides a roadmap for future development, emphasizing the need for continued collaboration and innovation.

In summary, wearable BCIs present an exciting frontier with opportunities and challenges. The interplay of neuroscience, engineering, and information technology is crafting a future where machines and human brains interface seamlessly. The ongoing research and development of wearable BCIs are a technological endeavor and a pathway towards enhancing human capabilities and quality of life.

References

[1]. Gao S, Wang Y, Gao X and Hong B 2014 IEEE. Trans. Biomed. Eng. 61 1436

[2]. Jamil N, Belkacem AN, Ouhbi S and Lakas A 2021 Sensors 21 4754

[3]. Rashid M, Sulaiman N, P. P. Abdul Majeed A, Musa RM, Ab. Nasir AF, Bari BS and Khatun S 2020 Front. Neurorobot. 14 25

[4]. Craik A, He Y and Contreras-Vidal J L 2019 J Neural Eng 16 031001

[5]. Colucci A, Vermehren M, Cavallo A, Angerhöfer C, Peekhaus N, Zollo L, Kim WS, Paik NJ and Soekadar SR 2022 Neurorehabil Neural Repair 36 747

[6]. Saibene A, Caglioni M, Corchs S and Gasparini F 2023 Sensors 23 2798

[7]. Abiri R, Borhani S, Sellers EW, Jiang Y and Zhao X 2019 J Neural Eng 16 011001

[8]. Wang M, Li R, Zhang R, Li G and Zhang D 2018 IEEE Access 6 26789

[9]. Mahmood M, Kim N, Mahmood M, Kim H, Kim H, Rodeheaver N, Sang M, Yu KJ, Yeo WH 2022 Biosens. Bioelectron 210 114333

[10]. Angrisani L, Arpaia P, Esposito A and Moccaldi N 2019 IEEE Trans Instrum Meas 69 1530

[11]. Chen J, Jiang D, Zhang Y and Zhang P 2020 Comput Commun 154 58

[12]. Willett FR, Avansino DT, Hochberg LR, Henderson JM and Shenoy KV 2021 Nature 593 249

[13]. Willett FR et al. 2023 Nature 620 1031

[14]. Zhu M, Chen J, Li H, Liang F, Han L and Zhang Z 2021 Neural. Comput. Appl. 33 13965

[15]. Zhang J, Wang B, Zhang C, Xiao Y and Wang MY 2019 Front. Neurorobot. 13 7

Cite this article

Zhao,Y. (2023). Wearable brain-computer interface technology and its application. Theoretical and Natural Science,15,137-145.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Modern Medicine and Global Health

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Gao S, Wang Y, Gao X and Hong B 2014 IEEE. Trans. Biomed. Eng. 61 1436

[2]. Jamil N, Belkacem AN, Ouhbi S and Lakas A 2021 Sensors 21 4754

[3]. Rashid M, Sulaiman N, P. P. Abdul Majeed A, Musa RM, Ab. Nasir AF, Bari BS and Khatun S 2020 Front. Neurorobot. 14 25

[4]. Craik A, He Y and Contreras-Vidal J L 2019 J Neural Eng 16 031001

[5]. Colucci A, Vermehren M, Cavallo A, Angerhöfer C, Peekhaus N, Zollo L, Kim WS, Paik NJ and Soekadar SR 2022 Neurorehabil Neural Repair 36 747

[6]. Saibene A, Caglioni M, Corchs S and Gasparini F 2023 Sensors 23 2798

[7]. Abiri R, Borhani S, Sellers EW, Jiang Y and Zhao X 2019 J Neural Eng 16 011001

[8]. Wang M, Li R, Zhang R, Li G and Zhang D 2018 IEEE Access 6 26789

[9]. Mahmood M, Kim N, Mahmood M, Kim H, Kim H, Rodeheaver N, Sang M, Yu KJ, Yeo WH 2022 Biosens. Bioelectron 210 114333

[10]. Angrisani L, Arpaia P, Esposito A and Moccaldi N 2019 IEEE Trans Instrum Meas 69 1530

[11]. Chen J, Jiang D, Zhang Y and Zhang P 2020 Comput Commun 154 58

[12]. Willett FR, Avansino DT, Hochberg LR, Henderson JM and Shenoy KV 2021 Nature 593 249

[13]. Willett FR et al. 2023 Nature 620 1031

[14]. Zhu M, Chen J, Li H, Liang F, Han L and Zhang Z 2021 Neural. Comput. Appl. 33 13965

[15]. Zhang J, Wang B, Zhang C, Xiao Y and Wang MY 2019 Front. Neurorobot. 13 7