1. Introduction

VR is a computer system that create the virtual worlds. By using computer technology, it generates various realistic scenarios, and acts on the user's vision, hearing, smell, and touch to make users immerse themselves in it and enjoy a virtual but real experience. Simulation immerses the user in the environment. First of all, VR can bring deep immersion, visual immersion brought by display devices, and five-sense immersion brought by auxiliary devices; Secondly, VR improves content richness by expanding dimensions from the traditional 2D screen upgrade to three-dimensional space plus time dimension and a virtual world; Thirdly, VR can not only completely break through restrictions, realize enhanced interaction, but also can completely break through geographical restrictions, and users can achieve zero-distance contact in the virtual world [1-3]. As a computer simulation system, VR has started to evolve more complex, and the whole industry is also growing rapidly. VR games, with the investment of domestic and foreign game manufacturers in the VR industry, have increased the content richness of the game market. At present, more revenue of VR games is still in offline experience halls, but this is just the beginning. VR games have huge development space both online and offline. How to make the content more realistic, how to increase the sense of interaction with users, and how to use online social platforms to drain traffic can break through these points. Some people believe the future game market will be the world of VR games. Cross-field development, at present, VR technology has been widely used in urban planning, interior design, industrial simulation, monument restoration, bridge road design, real estate sales, tourism teaching, water conservancy and electricity, geological disasters, education and training, virtual concerts, etc, making some real hard-to-practice things under conditions can be achieved through VR technology, providing more possibilities for more industries [4].

This work, proposed a music learning application, drum practicing based on VR. Compare to traditional non-VR applications it takes three advantages:

Ease of use for whoever wants to practice drums.

More realistic audio and animation, bring a deeper academic experience.

Make the instrument play extremely simple, no need for various instruments, just one pair of VR glass and 2-Touch controllers.

Specifically, this is a VR-based shelf game learning, using Unity as the game engine, running the platform VR glasses, Oculus Quest2 of the Meta company.

2. Technical foundation

2.1. VR & Oculus quest

Virtual Reality, the term VR was proposed by Jaron Lanier, the father of virtual reality, in the early 1980s. Its principle is to combine computer technology with constant sensor technology to generate a three-dimensional virtual world and create a new sympathetic method, which can make people immersed in a virtual reality environment and experience the world. Feel the image and space changes. Now typical output devices on the markets are Oculus Rift, Oculus Quest, HTC Vive, Pico, and etc.Fig. 1 shows the device of Oculus Quest 2.

Figure 1. Oculus quest2 product.

2.2. Unity3D vs unreal engine

Unity 3D-Since the release of Unity software by Unity Technologies in 2005, video game development has become more accessible to a wider range of game developers. One of the reasons Unity has a large community of members and game development companies. Another one is because the language code C# is simple to learn and intuitive. Also, since Unity 3D offers a wide range of resources, many indie game developers prefer this game engine over other game engines.

Unreal Engine-known for its graphics and photorealistic quality, which is why it is considered as the AAA game engine, representing studios that truly create successful games all around the world. Epic Games released Unreal Engine in 1988, and it quickly became popular among many companies because it allows game developers to realize any vision, they have for a video game. In fact, Unreal Engine can implement almost anything that a developer can think of.

3. Proposed system

VR-DRUM application aimed to provide a music practice application for novice music lovers and music students with a certain foundation.

3.1. System requirements

User Research

According to the survey from iiMedia.cn, most preschool children learn music due to their parents want to develop children’s interest and spirit (More than 80%), about 67.5% of them is to be more confident and 31.2% of them is to release the stress in school. As for students major in art,that’s mostly are of their own true love. (76.3%) and the need for further education (60.0%). For adults, 82.5% of them love music themselves and 37.1% of them want to release work press. iiMedia Research consulting analysts believe that with the change of people's music learning concept and the increasing pursuit of rich spiritual world, there will be a huge adjustment to the music education industry. The learning needs of various consumers will also continue to stimulate the growth of the music education market.

3.1.1. Competitive analysis. Currently Oculus Store has launched popular music game apps including Beat Saber and Smash Drums.

Beat Saber is a VR rhythm game where user slash the beats of adrenaline-pumping music as they fly towards user, surrounded by a futuristic world, it is developed by Beat Games. It allows user to select and follow different songs. Each rounds' difficulty ranges from easy to hard. And beat saber is also available on platforms like Steams and PlayStation Store. Fig.2 is a snapshot of a user playing Beat Saber.

Figure 2. Beat saber user interface.

Smash Drums is a drum practicing VR application. More specifically, it is an epic VR drumming game featuring awesome rock music and environments destruction. Rock & roll has never been more alive in this game. Fig. 3 is a snapshot of a user playing Smash Drums.

Figure 3. Smash drums user interface.

Comparing these two applications, Smash Drums is more helpful for learning music but only to single instrument, the drums. Meanwhile, Beat Saber can be easier to play. It landed on Steam in May 2018 and became the highest rated game on Steam less than a week after its release. In May 2019, Beat Saber was launched as the first game on the Oculus Quest, and developer Beat Games was acquired by Facebook in the same year. As of February, this year, Rhythm Lightsaber has sold 4 million copies across all platforms, making it one of the most successful VR games in the world right now.

3.1.2. Functional requirements. Support drummers to practice the snare drum along with metronome.

First-person perspective.

3.1.3. Performance requirements. The application startup speed should be less than 10s. During game use, the sound, picture and action need to be synchronized within the range of human hearing, vision, and movement cognition.

3.1.4. Serviceability. The user interface should be clear. It needs to conform to the basic specifications of VR development, with simple operation and good interactivity. Ordinary users can quickly get started within a few minutes. When start to play the game, users can use it for more than 1 hour without dizziness.

3.2. System design

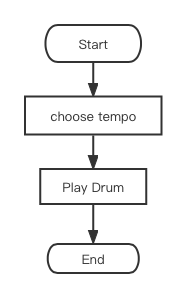

When user enters the XR-Drums game, user can see drum sticks and a snare drum, then play the drum. tempo speed setting is an option. Fig.4 shows the user process.

Figure 4. Flowchart of user play.

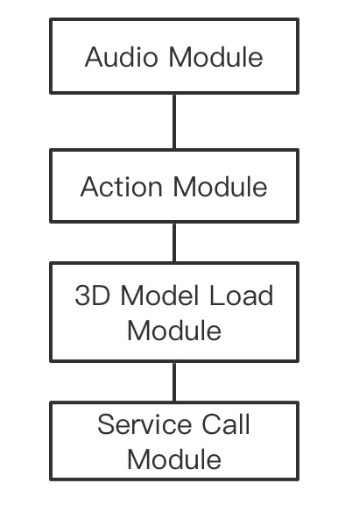

The whole application divides into 4 parts, the first module in charge of 3D module loading, the second and third module response to audio and action management. And the last module call all the service working on basic level. From Fig. 5, the four parts relation is clear.

This application chose framework and developing tools and languages as below after some research work and practice.

Developing language: C#

Engine Architecture: Unity

Development Device VR: Oculus Quest

Prototyping Tools: Maya / Blender

Debug Tool: ADB

Importing assets including the stage, drum set and the drumsticks into unity and writing C# scripts to add listener events. And the 2-Touch controller of Oculus Quest can be played as virtual hands holding drumsticks.

Figure 5. Application module design.

3.3. System implementation

The whole system imported Oculus Integration package from Unity Asset Store. It includes:

• VR

• Audio Manager

• Platform

• Sample framework

• Spatializer

• LipSync

Table 1. VR folder structure.

Folder | Description |

AudioClips | Collection of audio clips |

Materials | Materials used for graphical components within the package, such as the main GUI display and some. |

Meshes | Meshes required by some OVR scripts, such as TrackerBounds. |

Prefabs | Collection of prefabs that provide the VR support in a scene, such as OVRCameraRig, OVRHandPrefab and OVRPlayerController. |

Resources | Collection of shaders use at runtime. |

Scenes | Main scenes illustrating common concepts. |

Scripts | C# files that tie the VR framework and Unity components together. |

Textures | Image assets required by playing script components. |

ThirdParty | Collection of third-party APIs. |

XR-drum application finally chose similar structure with Oculus Integration Package as it is elegant and proved good work in practice.

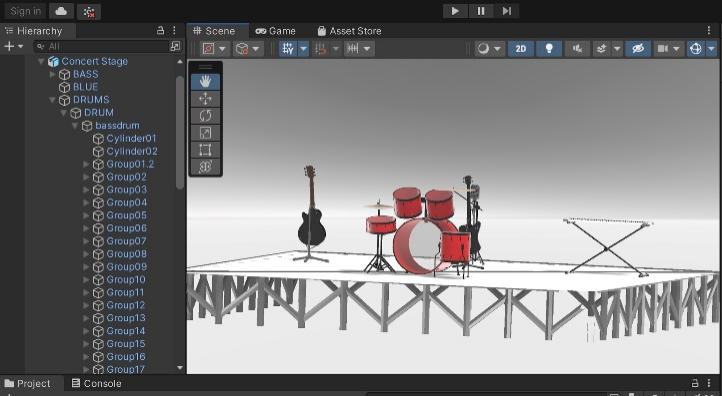

Unity recommends using XR Management to load and manage target platform SDKs, which is Oculus XR Plugin, who provides display and input support for Oculus devices. So, this VR-drum application also integrated this plugin. Fig. 6 is a developing picture in Unity.

Figure6. Snapshot of VR-drum in unity editor.

3.4. Experience evaluation

After all the set in Unity Editor project settings, which including player and package manager, build settings, click the build and run button in Unity Editor, connect Oculus Quest and laptop with USB-C cable, then upload the final Apk to Oculus Quest through Oculus Hub. Then VR-Drum is running XR-Drum application on Quest.

Compared to 2D software, VR-Drum provides three main experience that 2D application can never possess, they are Ultra HD visual experience, Immersive interactive experience and Stereoscopic visual experience; Moreover, With Oculus screen of 1832 x 1920 pixels per eye, the graphics is incredible and with 6-DoF interaction, also with spatial audio, the whole user experience turn out to be excellent, much better than expected. But there are also some Obvious shortcomings:

• The accuracy of force sensing and gesture direction sensing needs to be improved; The results is that when practicing only user’s sense of rhythm can be trained;

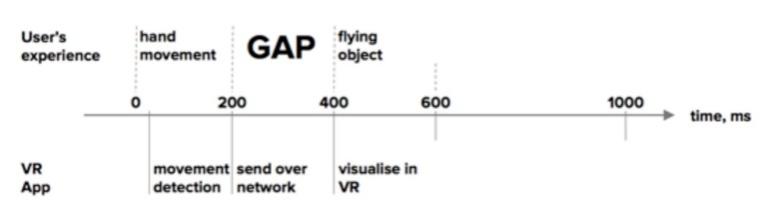

• Cannot accurately simulate the tactile force and the delay will cause dizziness. Fig. 7 shows the delay of VR APP.

• Drums have limited timbre variation; Real drumming requires both hands and feet, and cannot be practiced [5];

• It is impossible to simulate the slight deformation of the drum surface vibration and the reverse force of the drum to the hand.

Figure7. User experience with VR App.

4. Conclusion and future work

4.1. Conclusions

Basically speaking, VR-Drum app can help drummers solve some issues in real life, and add some fun to practice. But this VR-Drum app can be improved by many ways. It is still a long way to go.

4.2. Future work

Considering the interactivity of the VR world, multi-person interactions can be added to the drum game in the future, including interactions such as voice and action, and even support for band ensembles.

Music learning can be based on etudes, so the follow-up can be supported to practice according to the selected songs, and the freedom of practice and interest of the practitioners will be improved [6].

Learning requires feedback. If the practice recording, playback and scoring are supported in the follow-up, it will greatly promote the learner's learning.

Introducing AI algorithm to make this whole application smarter, and help learners make progress in music learning more efficiently.

Supporting drummer to practice the whole drum set instead of only snare drums.

Acknowledgment

This work is guided by CIS IEMP project, so thanks to all the teacher and workmates on your useful advice for making this work better. Special thanks to RA Yisa Lan, for the effort that you put into this work to help schedule for this essay and encourage me for choosing this topic.

References

[1]. Settachai Chaisanit and Napatwadee Sangboonnum Hongthong, “Traditional Musical Virtual Reality on M-Leaming ” The 7th International Conference for Internet Technology and Secured Transactions (ICITST-2012).

[2]. JT. Weissker, P. Bimberg, A. Kodanda and B. Froehlich, "Holding Hands for Short-Term Group Navigation in Social Virtual Reality," 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2022, pp. 728-729, doi: 10.1109/VRW55335.2022.00215.

[3]. B. Hentschel, M. Wolter and T. Kuhlen, "Virtual Reality-Based Multi-View Visualization of Time-Dependent Simulation Data," 2009 IEEE Virtual Reality Conference, 2009, pp. 253-254, doi: 10.1109/VR.2009.4811041.

[4]. C. Oikonomou, A. Lioret, M. Santorineos and S. Zoi, "Experimentation with the human body in virtual reality space: Body, bacteria, life-cycle," 2017 9th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), 2017, pp. 185-186, doi: 10.1109/VS-GAMES.2017.8056598.

[5]. S. W. Lee, J. Hyuk Heo and S. T. Lee, "A Comparative Study on the Drum Sound Recognition Algorithms Based Deep Learning," 2021 21st ACIS International Winter Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD-Winter), 2021, pp. 282-283, doi: 10.1109/SNPDWinter52325.2021.00074.

[6]. David L Page. 2019. Music & Sound-tracks of our everyday lives: Music & Sound-making, Meaning-making, Self-making. In Proceedings of the 14th International Audio Mostly Conference: A Journey in Sound (AM'19). Association for Computing Machinery, New York, NY, USA, 147–153.

Cite this article

Liao,S. (2023). A VR Music Learning Application VR-DRUM. Theoretical and Natural Science,2,37-43.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the International Conference on Computing Innovation and Applied Physics (CONF-CIAP 2022)

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Settachai Chaisanit and Napatwadee Sangboonnum Hongthong, “Traditional Musical Virtual Reality on M-Leaming ” The 7th International Conference for Internet Technology and Secured Transactions (ICITST-2012).

[2]. JT. Weissker, P. Bimberg, A. Kodanda and B. Froehlich, "Holding Hands for Short-Term Group Navigation in Social Virtual Reality," 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2022, pp. 728-729, doi: 10.1109/VRW55335.2022.00215.

[3]. B. Hentschel, M. Wolter and T. Kuhlen, "Virtual Reality-Based Multi-View Visualization of Time-Dependent Simulation Data," 2009 IEEE Virtual Reality Conference, 2009, pp. 253-254, doi: 10.1109/VR.2009.4811041.

[4]. C. Oikonomou, A. Lioret, M. Santorineos and S. Zoi, "Experimentation with the human body in virtual reality space: Body, bacteria, life-cycle," 2017 9th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), 2017, pp. 185-186, doi: 10.1109/VS-GAMES.2017.8056598.

[5]. S. W. Lee, J. Hyuk Heo and S. T. Lee, "A Comparative Study on the Drum Sound Recognition Algorithms Based Deep Learning," 2021 21st ACIS International Winter Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD-Winter), 2021, pp. 282-283, doi: 10.1109/SNPDWinter52325.2021.00074.

[6]. David L Page. 2019. Music & Sound-tracks of our everyday lives: Music & Sound-making, Meaning-making, Self-making. In Proceedings of the 14th International Audio Mostly Conference: A Journey in Sound (AM'19). Association for Computing Machinery, New York, NY, USA, 147–153.