1. Introduction

Hypothesis testing as it known today has its origins in the early 1900s, when statisticians were working in an attempt to improve the rigor of science and experimentation. One of the earliest pioneers was Ronald A. Fisher, who introduced the ideas underlying hypothesis testing in his book Statistical Methods for Researchers in 1925 [1]. Fischer introduced the concept of the “null hypothesis” that researchers try to disprove, as well as the idea of using p-values to measure how likely certain data are to occur if the null hypothesis is valid. As the 20th century progressed, the concepts originally developed by Fisher were further developed by other statisticians into a formal framework. Researchers could systematically assess whether observed effects were real or simply happened by chance. This approach not only advanced scientific research and data analysis, but was likewise a major milestone in statistics. Because of its ability to address uncertainty so well, hypothesis testing now occupies a crucial place in a variety of practical applications [2].

Imagine an entrepreneur who has just launched a bold new marketing strategy. Now the question arises whether the increase in sales is the result of true innovation or just a fluke. In a data-rich environment, relying on personal intuition alone creates significant risk, which is why a structured, evidence-based approach is essential. Hypothesis testing, on the other hand, is a systematic way to measure whether an observed phenomenon is truly significant or a random occurrence. This is done by creating a null hypothesis that assumes no impact and an alternative hypothesis that suggests something noteworthy is happening. Then after collecting and analyzing the data, it can be determined if the evidence is sufficient to disprove the initial hypothesis. This process not only reduces uncertainty, but also leads to better decision-making and ensures that the conclusion-generating theory is rooted in fact. Regardless of the field, hypothesis testing can serve as a reliable filter that separates real signals from random, leading to more accurate decisions.

Following this introduction, the paper is organized into three main sections. Section 2 reviews the evolution and core principles of hypothesis testing, including the development of null hypotheses and p-values. Section 3 examines practical applications through case studies in marketing, automotive engineering and financial auditing. The balance between Type I and Type II errors is also discussed, and Section 4 outlines the future prospects for real-time analysis and emerging statistical methods.

2. Introduction of hypothesis testing

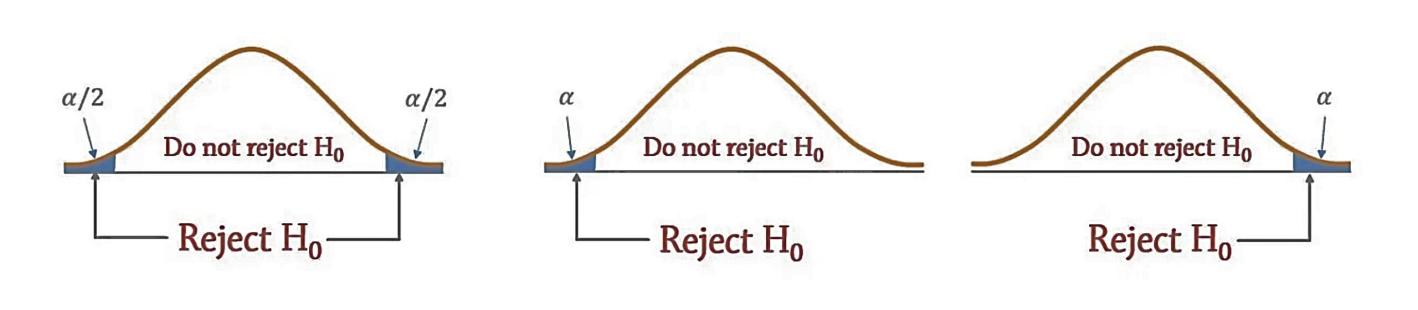

In an era of abundant data, people are relying more than ever on quantitative methods to guide their decisions. It is often not enough to simply look at differences or impacts in a data set. What really matters is whether the variance reliably reflects reality or is simply due to random variation. However, hypothesis testing has become a central method for resolving these uncertainties, and by setting up 'null hypotheses' and 'alternative hypotheses', researchers can systematically assess whether the available evidence is sufficient to challenge a view [3]. Typically, the null hypothesis ( \( {H_{0}} \) ) is a starting point that assumes no effect or relationship, while the alternative hypothesis ( \( {H_{1}} \) ) posits that something noteworthy has occurred. Researchers typically set a significance level ( \( α \) ), commonly 0.05, and use a p-value to determine the level of compatibility between the data and \( {H_{0}} \) . Depending on the research question, the test may be one-tailed, e.g., “above” or “below”, or two-tailed, i.e., considering differences in either direction. The result is shown in Figure 1.

Figure 1: Two-tailed and one-tailed (left- and right-tailed) hypothesis tests

If the observed test statistic falls into the extreme tails of the correlation distribution so that the corresponding p-value is below \( α \) , the evidence is considered sufficient to reject the null hypothesis, suggesting the existence of a meaningful effect or relationship.

This approach can be applied to a myriad of areas. For example, marketers can compare two advertising strategies through hypothesis testing to see which one actually increases the rate of purchase; policy analysts can determine whether newly introduced legislation has a real impact on the population; and scientists can determine whether an experimental drug produces a greater effect than a placebo [4]. In most cases, hypothesis testing prevents people from jumping to hasty conclusions and preventing the negative effects of wrong conclusions. Moreover, hypothesis testing reflects a central tenet of scientific thinking: the need to continually test hypotheses against concrete evidence. By developing clear statements and having evidence support or refute them through this method, the impact of personal bias can be effectively minimized, basing conclusions on observable facts. Hypothesis testing becomes increasingly important as the amount of available data increases. In the face of such data, it provides a systematic way to transform unstructured information into meaningful insights to ensure that decisions are rooted in demonstrable evidence rather than unsupported conjecture.

Although hypothesis testing provides a clear way to determine when to reject or retain the null hypothesis, no statistical method is foolproof. There are two main types of these potential pitfalls, often referred to as Type I errors and Type II errors. Type I errors occur when a researcher rejects a null hypothesis that is actually correct and can simply be understood as crying wolf when there is no wolf. In contrast, Type II errors occur when the null hypothesis is not rejected even though it is false and go completely unnoticed when the wolf is actually at the door. By setting the significance level (α), researchers determined what the probability of a Type I error was that they were willing to tolerate, such that if the p-value was lower than α, they rejected the original hypothesis. However, lowering α too much may increase the risk of Type II errors, meaning that the true impact may not be detected. Balancing these errors usually requires considering the real consequences of each error. In medicine, for example, missing the true effect may be worse than misreporting it, so researchers may tolerate a slightly higher probability of false positives. In other fields, false positives are costly, so lowering alpha is a better choice.

3. Practical applications

3.1. Discuss the practical applications of hypothesis testing

The most critical advantage of hypothesis testing is its ability to answer a very common question: “Is this result real, or is it by chance?” Whether in product design or manufacturing, people can often observe some differences in performance or reliability. There are not always sure if these differences really matter. This is where hypothesis testing comes into play, with a structured approach to confirming whether what is observed important or accidental. Feng Yu emphasizes the critical role of hypothesis testing in decision making in his on hypothesis testing in automotive engineering [5]. For example, early in new product development. Whether it's an automotive engine, software, or consumer electronics product, the number of prototypes or user tests available is very limited. With such a small sample size, it is easy to jump to the conclusion that a new feature or tweak is “sure to work” simply because it performs well in a few trials. However, as Feng points out, without a robust testing framework, it's impossible to separate real improvements from random variation [6].

Feng argued that hypothesis testing is so valuable because it allows one to determine conclusions when there is a lack of samples, and emphasized that in many real-world scenarios (especially in engineering environments), teams are not able to collect a large dataset, either due to cost constraints or limited resources. In such cases, the t-distribution provides an effective way to draw meaningful conclusions from a small number of observations. t-distributions do not presuppose that the overall variance is known (as in the case of the z-test) or require large sample sizes, but instead introduce built-in corrections to increase uncertainty. Mathematically, the test statistic is usually calculated

\( t=\frac{\overline{X}-{μ_{0}}}{s/\sqrt[]{n}}\ \ \ (1) \)

where \( \bar{X} \) is the sample mean, \( {μ_{0}} \) is the hypothesized (or benchmark) mean, s is the sample standard deviation, and n is the number of data points [7]. The critical insight, according to Feng, is that for a small n, the extra variability in s—itself only an estimate from minimal data—needs to be reflected in how the test statistic is judged. The t-distribution table (or software) then provides “cutoff” values based on the degrees of freedom \( (n-1) \) and the chosen significance level. If the absolute value of t from the data exceeds that threshold, it can be concluded that there is a statistically meaningful difference or improvement, even though relatively few measurements were relied upon. This t-based approach is indispensable when the production of new prototypes or experimental units is costly and time-consuming. In automotive research, for example, it is impractical to build dozens of prototype engines just for exhaustive testing [8]. Engineers collect a modest set of performance measurements (such as horsepower, torque, or fuel efficiency) and use the t-distribution to infer whether performance actually exceeds the benchmark. Feng emphasizes that this not only maintains a rigorous statistical framework but also ensures that the decision to adopt or abandon a design direction is not based on mere intuition.

3.2. Another practical example

Feng Na's analysis of financial statement audits highlights one of the key reasons why hypothesis testing is so important in practical applications, which is that it provides a standardized, data-driven perspective [6]. Complex, high-risk information can be assessed through hypothesis testing. Unlike many scenarios, audits typically have to deal with limited data and a large number of potential irregularities. Feng illustrates through the logic of hypothesis testing how professionals can systematically determine whether observed discrepancies in financial statements are serious enough to warrant a change or an in-depth investigation [9]. Hypothesis testing maintains an unparalleled level of rigor while ensuring that neither personal judgment nor external pressures obscure the evidence.

First, the auditor's choice of materiality level ( \( α \) ) helps to limit Type I errors. In this case, a Type I error means incorrectly alleging that a compliant set of records is materially misstated. This is particularly important in audits involving large multinational corporations, where incorrectly labeling “clean” accounts as suspicious, even in the short term, can lead to unnecessary investigative costs and even reputational damage. Conversely, the same framework can help minimize the Type II error, where real red flags are ignored. The types of errors I and II are shown in Table 1.

Table 1: Type I and Type II errors in financial statement auditing

\( {H_{0}} \) is actually true | \( {H_{0}} \) is actually false | |

Auditor Rejects \( {H_{0}} \) | Type I Error | Correct Decision |

Auditor Retains \( {H_{0}} \) | Correct Decision | Type II Error |

According to Feng, this approach is particularly effective because it is structured to ensure that oversights and overreactions don't occur, so auditors can make the right decisions. Audit teams also have pre-defined thresholds for “acceptable” deviations, so they can more confidently determine when further review and work is really needed - whether it's more in-depth analysis, reviewing additional documentation or re-examining the entire transaction [10].

In a wide range of practical areas, hypothesis testing continues to prove its value by transforming raw observations into actionable solutions. Whether constrained by small sample sizes, faced with high-stakes decisions, or confronted with rapidly changing industry conditions, hypothesis testing can make the right decisions. In hypothesis testing, the process of constructing null and alternative hypotheses helps to think clearly about what constitutes evidence and when that evidence is compelling enough to draw conclusions. It also helps to find an optimal balance between avoiding premature conclusions (Type I error) and ignoring real opportunities or challenges (Type II error). These are precisely the reasons why hypothesis testing is becoming, or has become, a fundamental pillar of scientific and professional practice and of any realistic challenge.

4. Conclusion

Today hypothesis testing has become a necessary tool to transform uncertainty into certainty. It is not a relic of early 20th century statistics, but a methodology that is highly adaptable to any situation. What makes hypothesis testing truly unique is its ability to balance rigor and flexibility. Rather than simply comparing the raw differences or effects of data, it asks a deeper question and then solves that question through rigorous computational means. This is why hypothesis testing is so important in real-world applications, whether in engineering, medicine, finance, or any field where uncertainty exists.

The future of hypothesis testing is both exciting and promising. As the world becomes more connected and the flow of data becomes more continuous, there is a growing trend to combine hypothesis testing with real-time analytics. This trend will enhance people’s ability to address challenges and people’s understanding. Another important trend is the push to make research more transparent and reproducible. In an era when the reproducibility of scientific findings is under intense scrutiny, hypothesis testing is likely to be accompanied by stricter standards for data reporting and analysis. Researchers, in order to ensure that conclusions can be independently verified, are increasingly encouraged to document in detail every step of the process, from data collection to final testing. This greater degree of transparency will increase confidence in the results and help create a more collaborative environment where research methods and findings can be shared openly. Additionally, alternative methods such as Bayesian inference are beginning to complement traditional hypothesis testing, providing new perspectives on uncertainty. By integrating prior knowledge and continually updating conclusions as new evidence emerges, they add an extra layer of insight to the analysis. They promise to make hypothesis testing not just a static snapshot of a moment in time, but a dynamic process that evolves with the understanding of complex phenomena.

Ultimately, the enduring importance of hypothesis testing lies in its ability to transform raw data into meaningful insights and informed decisions. It allows the distinction between chance events and significant impacts, and ensures that actions are based on reality rather than assumptions. As these techniques continue to improve and are integrated with modern technology, hypothesis testing will undoubtedly continue to be a cornerstone of scientific research and practical decision-making, helping navigate an increasingly complex world with confidence and accuracy.

References

[1]. Fisher, R. A. (1925). Statistical methods for research workers. Oliver and Boyd.

[2]. amanatulla1606. (2023). A comprehensive guide to hypothesis testing: Understanding methods and applications. Medium. Retrieved March 17, 2025, from https://medium.com/@amanatulla1606/a-comprehensive-guide-to-hypothesis-testing-understanding-methods-and-applications-5e20dffce791.

[3]. Wasserstein, R. L., Schirm, A. L., & Lazar, N. A. (2019). Moving to a world beyond “p < 0.05”. The American Statistician, 73(sup1), 1–19.

[4]. McShane, B. B., Gal, D., Gelman, A., Robert, C., & Tackett, J. L. (2019). Abandon statistical significance. The American Statistician, 73(2), 235–245.

[5]. Feng Yu. (2013). Hypothesis testing in automotive engineering. (eds.) Proceedings of the seventh academic conference on “GAC-Toyota Cup” in Guangdong automobile industry (pp. 54-57)...

[6]. Feng Na. (2023). A pedagogical approach to financial statement audit objectives based on statistical hypothesis testing. Business Accounting (13), 118-121.

[7]. Amrhein, V., Greenland, S., & McShane, B. (2019). Scientists rise up against statistical significance. Nature, 567(7748), 305–307.

[8]. Montgomery, D. C., & Runger, G. C. (2014). Applied statistics and probability for engineers (6th ed.). Wiley.

[9]. Freund, J. E., & Perles, B. M. (2017). Modern elementary statistics (13th ed.). Pearson.

[10]. Gelman, A., & Loken, E. (2014). The statistical crisis in science. American Scientist, 102(6), 460–465.

Cite this article

Su,J. (2025). Application and Role of Hypothesis Testing in Practice. Theoretical and Natural Science,106,10-14.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Mathematical Physics and Computational Simulation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Fisher, R. A. (1925). Statistical methods for research workers. Oliver and Boyd.

[2]. amanatulla1606. (2023). A comprehensive guide to hypothesis testing: Understanding methods and applications. Medium. Retrieved March 17, 2025, from https://medium.com/@amanatulla1606/a-comprehensive-guide-to-hypothesis-testing-understanding-methods-and-applications-5e20dffce791.

[3]. Wasserstein, R. L., Schirm, A. L., & Lazar, N. A. (2019). Moving to a world beyond “p < 0.05”. The American Statistician, 73(sup1), 1–19.

[4]. McShane, B. B., Gal, D., Gelman, A., Robert, C., & Tackett, J. L. (2019). Abandon statistical significance. The American Statistician, 73(2), 235–245.

[5]. Feng Yu. (2013). Hypothesis testing in automotive engineering. (eds.) Proceedings of the seventh academic conference on “GAC-Toyota Cup” in Guangdong automobile industry (pp. 54-57)...

[6]. Feng Na. (2023). A pedagogical approach to financial statement audit objectives based on statistical hypothesis testing. Business Accounting (13), 118-121.

[7]. Amrhein, V., Greenland, S., & McShane, B. (2019). Scientists rise up against statistical significance. Nature, 567(7748), 305–307.

[8]. Montgomery, D. C., & Runger, G. C. (2014). Applied statistics and probability for engineers (6th ed.). Wiley.

[9]. Freund, J. E., & Perles, B. M. (2017). Modern elementary statistics (13th ed.). Pearson.

[10]. Gelman, A., & Loken, E. (2014). The statistical crisis in science. American Scientist, 102(6), 460–465.