Volume 178

Published on August 2025Volume title: Proceedings of CONF-CDS 2025 Symposium: Data Visualization Methods for Evaluation

Image style transfer has emerged as a fundamental technique in computer graphics and computer vision, enabling the transformation of visual content while preserving semantic information. The integration of transfer learning methodologies with style transfer frameworks has demonstrated significant improvements in computational efficiency, generalization capability, and quality enhancement across diverse application domains. This comprehensive review systematically analyzes the application of transfer learning techniques in image style transfer through three critical domains: artistic style transfer, photo-to-anime stylization, and medical image harmonization. Drawing upon a comprehensive review of key publications from 2016 to 2024, this paper establishes a taxonomy of transfer learning approaches in image style transfer. It evaluates their effectiveness across different application contexts and identifies fundamental principles underlying successful implementations. The analysis reveals that pre-trained feature representations reduce training time by 65-80% while maintaining comparable or superior quality metrics across all examined domains. The author proposes a unified evaluation framework for assessing transfer learning effectiveness and identifying critical research gaps requiring immediate attention. The findings provide actionable insights for researchers and practitioners, establishing clear guidelines for optimal transfer learning strategy selection based on domain characteristics, data availability, and computational constraints.

View pdf

View pdf

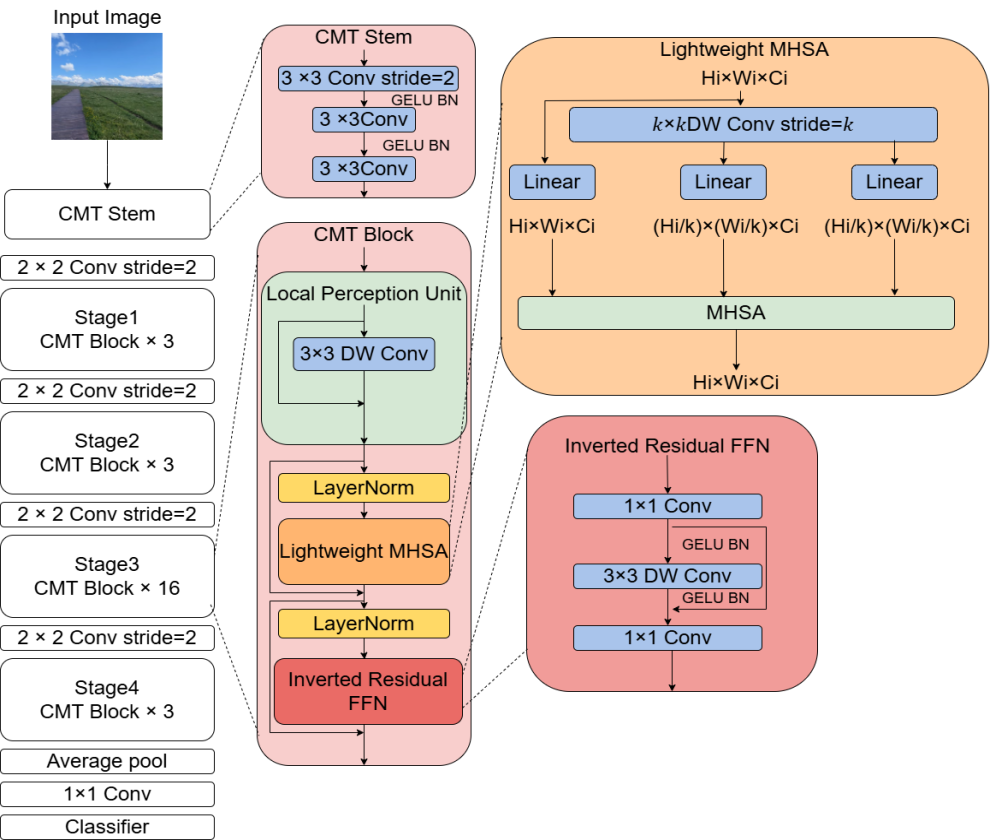

Lung cancer, a high-mortality malignancy, suffers from delayed primary diagnosis. To address limitations in traditional pathological diagnosis—specifically, insufficient local perceptual ability in medical image analysis and the high computational load of classical Transformers—we propose CMT, a hybrid CNN-Transformer model. CMT employs a convolutional encoder to extract multi-scale local features from input images. These features are transformed into global representations via Cross-Scale Feature Aggregation (CSFA) and processed by a Transformer decoder for final classification. The model is optimized using a weighted combination of cross-entropy and Dice loss functions, enhancing both accuracy and localization capability. Evaluated on the TCIA dataset, CMT achieved an accuracy of 92.8%, outperforming comparative methods.

View pdf

View pdf

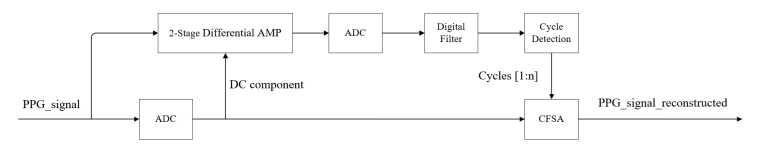

Pulse oximeters need to collect light signals at red and infrared (IR) wavelengths to measure how much oxygen is in a patient's blood. Patient motion induces motion artifacts in PPG signals, introducing significant computational errors. Cycle-by-Cycle Fourier Series Analysis (CFSA) has been demonstrated as an effective method for eliminating motion artifact interference in Photoplethysmography (PPG) signals. This paper explores methods to obtain sufficiently accurate cycle periods to reduce CFSA result errors and proposes an approach combining high-pass filtering with differential amplifier circuitry for preprocessing raw PPG signals to enhance cycle period detection. The experimental results confirm the effectiveness of the proposed method, demonstrating that the Signal-to-Total Noise Band Ratio (SNBR_dB) of PPG signals was increased from 0.53 dB to 5.90 dB, while the linearity improved from 1.13 to 4.39. Furthermore, this study extends the utilization of analog integrated circuits for motion artifact mitigation in biomedical signal processing.

View pdf

View pdf

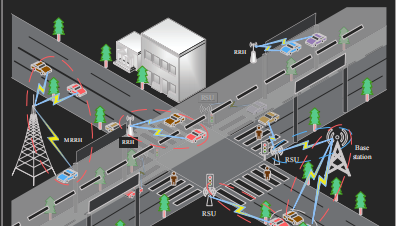

In recent years, intelligent transportation systems (ITS) and vehicle to vehicle (V2X) communication have experienced rapid development. For example, Cisco released that the global mobile network traffic showed a growth by 74% in 2016 compared with that of the previous year. The sharp increase in the number of users has intensified the demand for efficient spectrum resource allocation in high-density urban traffic scenarios, which is evident at urban intersections. Recent studies, such as, highlights the challenges currently faced by intelligent driving systems. Traditional static channel allocation methods may not be able to meet the dynamic vehicle distribution and real-time communication requirements at this time, resulting in suboptimal resource allocation, increased latency, and potential security risks. To further mitigate the aforementioned risks, this study proposes a dynamic channel allocation algorithm based on cooperative game theory and simulates it in urban intersection scenarios. This algorithm reduces latency by dynamically allocating resource blocks (RBs), optimizing system throughput while implementing fairness constraints and interference control. This study utilized a joint simulation of Prescan and MATLAB, and the framework was rigorously tested under different vehicle densities (5-25 vehicles). The results show that compared with traditional static methods, the dynamic channel allocation algorithm based on cooperative game theory exhibits significantly reduced latency, and the fairness index remains above 0.8. The algorithm greatly alleviates the "Matthew effect" in resource allocation by integrating real-time vehicle behavior adjustment and resource monopoly punishment mechanisms, ensuring fair access for edge vehicles. This work not only provides a theoretical basis for dynamic spectrum management in vehicle networks, but also offers actionable insights for deploying intelligent transportation systems in urban environments. Efficient communication is crucial for collision avoidance, traffic coordination, and autonomous driving in urban environments. The future directions of this research include applying reinforcement learning to achieve adaptive optimization, and extending the framework to multi base station scenarios to support large-scale smart city applications.

View pdf

View pdf

This paper offers an in-depth look at divide-and-conquer algorithms, especially in big data sorting and retrieval, with a particular focus on how these techniques are implemented in Python. As data sizes grow and become more complex, having scalable algorithms that are both efficient and flexible is more important than ever. Divide-and-conquer approaches are naturally suited for this task because they break down problems into smaller parts, making it easier to run tasks in parallel or across distributed systems—something that's incredibly useful in big data projects. In our review, we explore recent Python implementations of popular sorting algorithms like merge sort and quicksort. We compare how they perform when using multiprocessing or hybrid methods. While Python makes development straightforward and flexible, it also comes with some challenges—things like the Global Interpreter Lock (GIL), recursion limits, and the overhead of managing inter-process communication can impact performance. Our findings indicate that merge sort tends to perform better than quicksort when it comes to using Python’s parallel processing capabilities. Besides, tools like PySpark or Dask can help overcome certain language-specific obstacles, making large-scale data processing more manageable. Overall, this review provides practical guidance for researchers and engineers aiming to strike a good balance between algorithm design and efficient implementation in systems that handle massive amounts of data.

View pdf

View pdf

HeLa cells, the first successfully cultured human cancer cell line, are pivotal in cancer research, virology, and drug screening. However, their multi-omics heterogeneity and complex cancer-related cascades challenge traditional bulk sequencing, which fails to capture dynamic cell-cell interactions and resolve pathway crosstalk. This review systematically examines single-cell multi-omics technologies (transcriptomics, proteomics, and data integration) and AI-driven network modeling (graph neural networks, deep learning) for decoding HeLa cells' core pathways and metastasis mechanisms. It reveals single-cell-level lipid metabolic heterogeneity in cell cycle regulation, dynamic coupling of Ras/NF-κB and PI3K/AKT pathways, and HPV protein-accelerated cell cycles. Glycolysis and oxidative phosphorylation synergize to meet energy demands during metabolic reprogramming, while lactate promotes invasion. Apoptosis resistance involves high anti-apoptotic gene expression and endoplasmic reticulum stress proteins. AI models address data sparsity, predicting metabolic pathways, drug responses, and cell communication networks. Future research should develop high-sensitivity spatial multi-omics, HeLa-specific AI models, and organoid platforms to advance precision medicine.

View pdf

View pdf

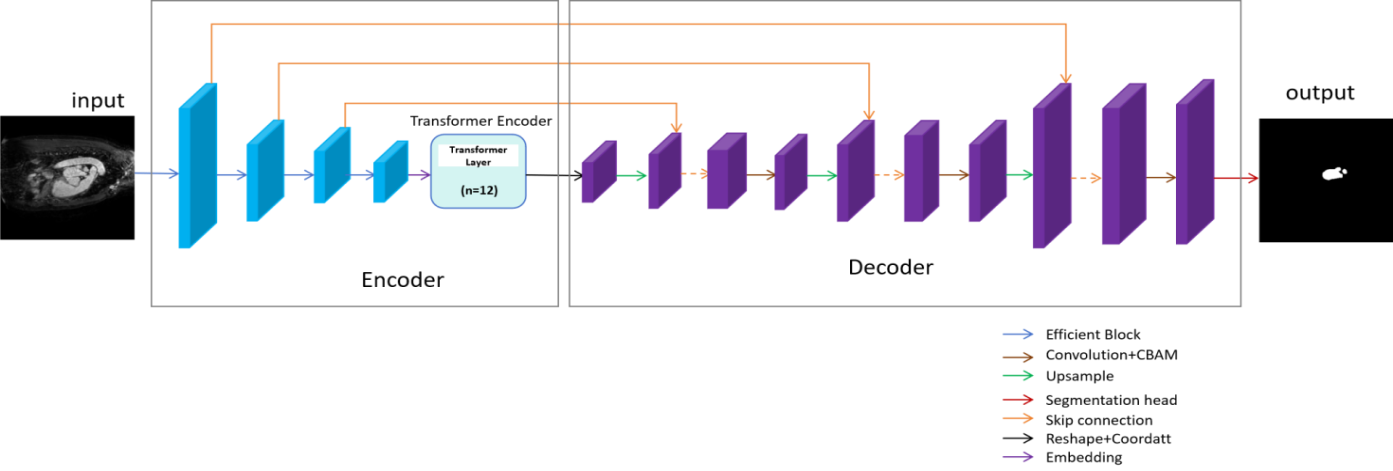

With the rapid advancement of deep learning technologies, their applications have expanded across various fields, particularly in medical image analysis, where segmentation remains a critical task. This study proposes a modified version of the TransUNet model, named EffTransUNet, to enhance segmentation performance on the Synapse multi-organ segmentation dataset and the LeftAtrium dataset. Additionally, the model is applied to the Brain Tumor dataset for image classification. Experimental results show that EffTransUNet achieves an accuracy of 91.33% on the LeftAtrium dataset and a classification accuracy of 99.69% on the Brain Tumor dataset. These findings demonstrate that the proposed model effectively improves segmentation performance and accurately classifies brain tumour MRI images, indicating good generalization ability.

View pdf

View pdf

with the continuous growth of the global population and the increasing severity of environmental issues, traditional agriculture is facing unprecedented challenges. Precision agriculture, as an innovative solution to address these challenges, aims to optimize agricultural resource use, improve production efficiency, and achieve sustainable development through the integration of Internet of Things (IoT) and Artificial Intelligence (AI) technologies. This paper reviews the applications of IoT and AI in precision agriculture, exploring technological advancements and practical applications in areas such as crop monitoring, pest prediction, and resource optimization. It also analyzes the research gaps and challenges in this field. The study demonstrates that IoT can provide essential support for precision agriculture through real-time data collection and transmission, while AI optimizes decision-making through intelligent algorithms, assisting farmers in achieving precise management. However, despite significant technological advancements, the deep integration of IoT and AI still faces challenges such as device compatibility, data privacy, and system integration. This paper also suggests that future research should focus on overcoming these technical bottlenecks, especially in reducing costs, improving system integration, and promoting interdisciplinary collaboration. The integration of IoT and AI will play an increasingly vital role in precision agriculture, contributing to global food security and sustainable agricultural development.

View pdf

View pdf

With the rapid development of big data and artificial intelligence technologies, data security has become a critical bottleneck restricting the development of data science. This study systematically explores the innovative applications and implementation challenges of modern cryptographic techniques in the field of data science. The paper first reviews the fundamental theories of cryptography, such as symmetric encryption, asymmetric encryption, and hash functions. It then focuses on the cutting-edge applications of homomorphic encryption in privacy-preserving machine learning, differential privacy in user data analysis, and blockchain in data integrity verification. Through an in-depth analysis of typical cases such as medical data sharing and user behavior modeling, the study reveals the effectiveness and limitations of cryptographic techniques in practical deployment. The study further identifies the main challenges currently faced, including algorithmic computational efficiency, the transition to post-quantum cryptography, and the balance between data privacy and usability. Finally, this paper proposes future development directions for the deep integration of cryptography and data science from both technical evolution and policy-making perspectives. This study provides important theoretical references and methodological guidance for secure computing practices in the field of data science.

View pdf

View pdf

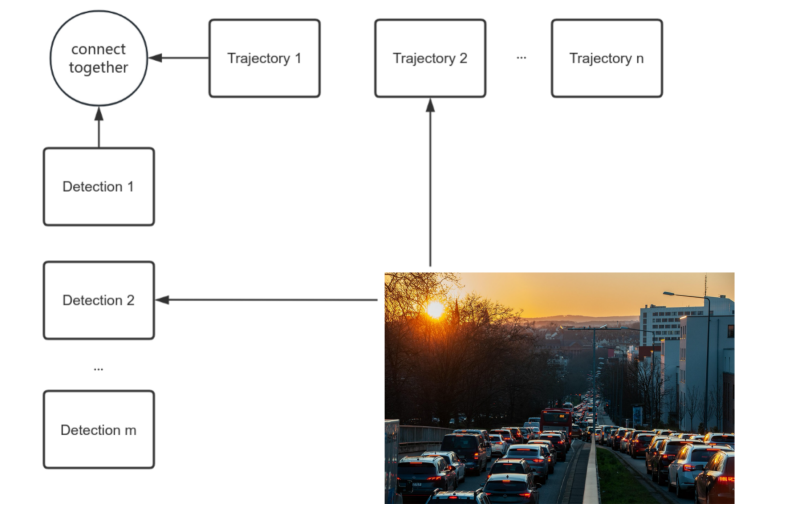

With the popularity of indoor service robots in public and private environments, the demand for accurate and real-time multi-object tracking (MOT) systems has become increasingly urgent. This study explores the development and optimization of an MOT algorithm for indoor service robots based on a modified TransTrack model. To address challenges such as occlusion, low light, and real-time performance, this study makes several improvements to the original TransTrack architecture, including a lightweight backbone network, an occlusion handling module, and an attention mechanism pruning strategy, which improves accuracy and computational efficiency to a certain extent. The system is trained and evaluated on the Indoor-MOT dataset, and some preprocessing techniques are applied to make it adaptable to some complex indoor environments. Based on standard MOT metrics such as MOTA, IDF1, FPS, and HOTA, the improved system outperforms DeepSORT, FairMOT, and the original TransTrack in terms of accuracy and speed. In spite of its limitations in tracking fast-moving targets, the system still has certain application prospects in the field of indoor robotics.

View pdf

View pdf