1. Introduction

Financial risk management is a critical discipline within the realm of finance, indispensable for the stability and prosperity of institutions operating in today’s complex and interconnected global markets. As financial markets continue to evolve and become increasingly sophisticated, the ability to effectively identify, assess, and mitigate risks has become paramount for financial institutions seeking to safeguard their assets, protect their stakeholders, and sustain long-term growth. At the heart of modern risk management practices lies quantitative analysis, a multidisciplinary approach that harnesses mathematical and statistical techniques to model, analyze, and quantify various forms of financial risk. Quantitative analysis provides financial institutions with the tools and methodologies necessary to navigate the intricate landscape of financial risk. By leveraging concepts from probability theory, statistical inference, and advanced computational algorithms, institutions can gain deeper insights into the probabilistic nature of financial markets, anticipate potential risks, and devise robust risk management strategies. Probability theory, for instance, offers a formal framework for quantifying the likelihood of uncertain events, providing a basis for assessing the probability of adverse outcomes and their potential impact on financial portfolios. Moreover, statistical inference methods such as hypothesis testing and confidence intervals enable analysts to draw meaningful conclusions from empirical data, facilitating informed decision-making processes. These techniques allow risk managers to assess the significance of observed phenomena, validate model assumptions, and quantify uncertainties inherent in financial markets. In parallel, advancements in computational techniques, including Monte Carlo simulations and machine learning models, have revolutionized risk management practices by enabling institutions to simulate complex scenarios, analyze vast datasets, and extract actionable insights in real-time. However, the effective application of quantitative analysis in financial risk management is not without its challenges. Model risk, data quality, and regulatory compliance pose significant hurdles that must be addressed to ensure the reliability and accuracy of risk management practices. Model risk arises from the inherent limitations and uncertainties associated with mathematical models, while data quality concerns encompass issues related to data accuracy, completeness, and timeliness. Regulatory compliance, on the other hand, necessitates adherence to a complex and ever-evolving regulatory landscape governing financial markets, necessitating robust governance frameworks and ethical considerations [1]. In light of these challenges, this paper aims to provide a comprehensive exploration of quantitative analysis in financial risk management. By examining the theoretical foundations, practical applications, and emerging trends in quantitative risk management, this paper seeks to equip financial professionals with the knowledge and insights necessary to navigate the complexities of modern financial markets effectively. Through a nuanced understanding of quantitative techniques and their implications for risk management practices, institutions can enhance their resilience to market volatility, optimize investment decisions, and foster greater financial stability and sustainability in an uncertain world.

2. Theoretical Foundations

2.1. Probability Theory in Risk Analysis

Probability theory serves as the cornerstone of risk analysis by providing a mathematical framework for quantifying the likelihood of various financial events and their potential impacts. Key probability distributions such as the Normal, Poisson, and Binomial distributions play crucial roles in this context. The Normal distribution, often referred to as the bell curve, is instrumental in modeling continuous data that cluster around a mean. In financial risk management, it is used to model asset returns, assuming that most outcomes will fall within a certain range of the mean:

\( f(x)=\frac{1}{\sqrt[]{2π{σ^{2}}}}{e^{-\frac{{(x-μ)^{2}}}{2{σ^{2}}}}}\ \ \ (1) \)

Where x is the random variable. μ is the mean of the distribution. 2σ2 is the variance of the distribution. e is the base of the natural logarithm. π is the mathematical constant pi (approximately 3.14159).

This formula describes the shape of the bell curve, where the highest point of the curve occurs at the mean (μ), and the spread of the curve is determined by the standard deviation (σ).

For example, the value-at-risk (VaR) metric, a standard measure of market risk, relies on the Normal distribution to estimate the maximum potential loss over a given time frame with a specified confidence level [2]. The Poisson distribution is particularly useful for modeling the number of events happening within a fixed interval of time or space when these events occur with a known constant mean rate and independently of the time since the last event. In finance, the Poisson distribution can model the arrival rate of orders in a stock exchange or the occurrence of default events in a credit portfolio, helping analysts to quantify operational and credit risks respectively. The Binomial distribution applies to scenarios with two possible outcomes: success or failure, win or loss. It is commonly used in risk management for evaluating the probability of a given number of successes over a series of independent trials or events. For example, it can quantify the risk of default in a loan portfolio by calculating the probability of a certain number of defaults out of a total number of loans.

2.2. Statistical Inference for Risk Decision Making

Statistical inference methods, such as hypothesis testing and confidence intervals, enable analysts to make informed decisions based on data analysis, offering insights into market behaviors and risk factors. Hypothesis Testing allows risk managers to test assumptions about the financial market’s behavior. For instance, by using hypothesis testing, one could assess whether the introduction of a new financial regulation significantly affects the volatility of the stock market. It involves setting up a null hypothesis (no effect) against an alternative hypothesis (significant effect) and using statistical tests to determine the likelihood of observing the sample data if the null hypothesis were true [3]. Confidence Intervals provide a range of values within which the true value of a parameter (e.g., mean, variance) is expected to fall with a certain level of confidence. In the context of financial risk management, confidence intervals are used to estimate parameters such as the expected return on an investment or the potential loss in a portfolio, taking into account the uncertainty inherent in these estimates. This aids in making more robust risk assessments and investment decisions.

2.3. Algorithms and Computational Techniques

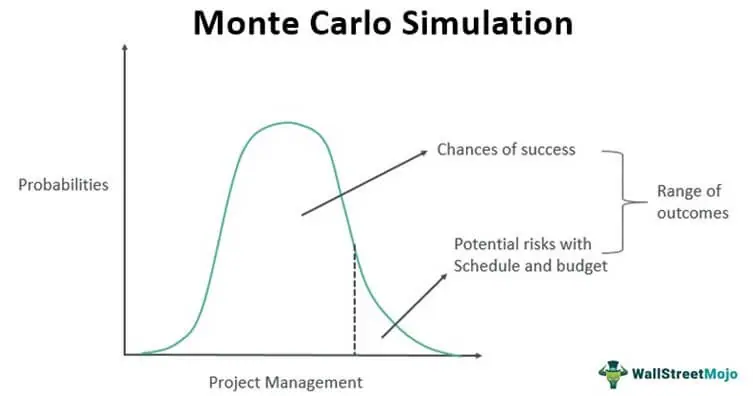

Advanced algorithms and computational techniques, including Monte Carlo simulations and machine learning models, enhance the precision of risk assessment models and support more informed decision-making processes. Monte Carlo Simulations use randomness to solve problems that might be deterministic in principle. They are particularly useful in financial risk management for modeling the probability of different outcomes in processes that are not easily predictable due to the intervention of random variables. For example, Monte Carlo simulations can estimate the risk of complex derivatives or portfolios by simulating thousands of possible price paths for the underlying assets and calculating the distribution of returns, as shown in Figure 1. Machine Learning Models, a subset of artificial intelligence, involve training algorithms to identify patterns and make decisions with minimal human intervention. In risk management, machine learning models are employed to predict credit defaults by analyzing vast datasets containing borrowers’ historical data. These models can capture complex nonlinear relationships between various risk factors and outcomes, offering a nuanced understanding of risk that traditional models might miss [4]. Furthermore, machine learning algorithms can enhance market risk management by analyzing market conditions and predicting asset price movements, thereby facilitating more dynamic hedging strategies. By integrating these sophisticated mathematical and computational techniques, financial institutions can better understand and manage the myriad risks they face in an increasingly complex and interconnected global market.

Figure 1. Monte Carlo Simulation (Source: WallStreetMojo.com)

3. Application in Financial Risk Management

3.1. Portfolio Optimization Models

Portfolio optimization models play a crucial role in financial risk management by providing frameworks to construct investment portfolios that maximize returns while minimizing risks. Quantitative models such as the Markowitz portfolio theory and the Capital Asset Pricing Model (CAPM) are widely utilized in this domain. The Markowitz model, introduced by Harry Markowitz in 1952, revolutionized portfolio management by emphasizing the importance of diversification:

\( E({R_{p}})=\sum _{i=1}^{n}{w_{i}}∙E({R_{i}}) \) (2)

In this formula, \( E({R_{p}}) \) represents the expected return of the portfolio, \( {w_{i}} \) represents the weight of asset i in the portfolio, \( E({R_{i}}) \) represents the expected return of asset i, n represents the total number of assets in the portfolio.

It proposes that investors should construct portfolios that offer the highest expected return for a given level of risk, or conversely, the lowest risk for a given level of return. By mathematically formalizing the concept of diversification, the Markowitz model enables investors to allocate assets optimally based on their risk preferences. Similarly, the Capital Asset Pricing Model (CAPM) provides insights into the relationship between risk and expected returns [5]. According to CAPM, the expected return on an asset is determined by its beta, which measures its sensitivity to market risk (systematic risk). Assets with higher betas are expected to yield higher returns to compensate investors for bearing additional risk. CAPM aids in estimating the expected return on individual assets and assessing their contributions to the overall risk and return profile of a portfolio. These models are applied in practice by financial analysts and portfolio managers to construct diversified portfolios that strike a balance between risk and return objectives. By optimizing asset allocation based on quantitative principles, portfolio optimization models facilitate more efficient risk management strategies in investment decision-making processes.

3.2. Credit Risk Evaluation

Credit risk evaluation is paramount in financial risk management, especially for institutions involved in lending activities. Statistical models such as the Altman Z-score and logistic regression are instrumental in assessing credit risk by quantifying default probabilities and evaluating the impact of credit risk on financial stability. The Altman Z-score, developed by Edward Altman in the 1960s, is a predictive model that measures the financial health of companies and predicts the likelihood of bankruptcy within a specified time frame. The model incorporates financial ratios such as liquidity, profitability, leverage, solvency, and activity to calculate a composite score. A lower Z-score indicates a higher probability of bankruptcy, signaling heightened credit risk. Financial institutions use the Altman Z-score to assess the creditworthiness of borrowers and monitor the health of their loan portfolios. Logistic regression is another statistical technique commonly employed in credit risk evaluation. It is a predictive modeling method used to model the probability of a binary outcome, such as default or non-default. By analyzing historical data on borrower characteristics, financial metrics, and economic indicators, logistic regression models can estimate the likelihood of default for individual borrowers or segments of a loan portfolio [6]. This enables lenders to quantify credit risk exposure and implement risk mitigation strategies such as setting appropriate interest rates, imposing collateral requirements, or diversifying loan portfolios. Overall, credit risk evaluation models provide valuable insights into the probability of default and the potential impact on financial institutions’ profitability and stability. By leveraging statistical techniques, financial institutions can make informed lending decisions, manage credit risk effectively, and safeguard against financial losses arising from defaults.

3.3. Market Risk Assessment

Market risk assessment is essential for financial institutions and investors to quantify and manage exposure to fluctuations in asset prices and market conditions. Techniques such as Value at Risk (VaR) and Conditional Value at Risk (CVaR) are employed to measure and manage market risk effectively. Value at Risk (VaR) is a widely used metric that quantifies the maximum potential loss of a portfolio within a specified confidence level over a given time horizon. It provides a single, summary statistic that captures the downside risk of a portfolio based on historical or simulated market data. VaR enables investors to understand the potential downside exposure of their portfolios under normal market conditions and set risk limits accordingly. Conditional Value at Risk (CVaR), also known as expected shortfall, complements VaR by providing insights into the tail risk beyond the VaR threshold. CVaR represents the expected loss exceeding the VaR threshold, conditional on the portfolio experiencing a loss greater than VaR. It offers a more comprehensive measure of downside risk and provides additional information about the severity of potential losses in extreme market scenarios. These models are essential tools for risk managers and investors to quantify and manage market risk effectively. By incorporating VaR and CVaR into risk management frameworks, financial institutions can identify potential vulnerabilities in their portfolios, implement appropriate risk mitigation strategies, and enhance overall risk-adjusted returns.

4. Quantitative Analysis and Modeling Challenges

4.1. Model Risk and Assumptions

Model risk and assumptions pose significant challenges in financial risk management, as they introduce uncertainty and potential errors into decision-making processes. This section delves into the inherent risks associated with model assumptions and emphasizes the critical need for rigorous validation and backtesting procedures to ensure the reliability and accuracy of models. Model assumptions serve as the foundation upon which risk assessment models are built. However, these assumptions may not always align perfectly with the real-world dynamics of financial markets, leading to discrepancies between predicted and actual outcomes. For example, assumptions regarding asset price movements, correlations between different financial instruments, or the behavior of market participants may not accurately reflect the complexities of market behavior. Furthermore, model risk arises from the possibility of model error, which can result from various factors such as oversimplification of underlying processes, data limitations, or unforeseen market events. For instance, a model calibrated based on historical data may fail to capture the impact of unprecedented events such as financial crises or geopolitical shocks, as shown in Table 1 [7]. To mitigate model risk and ensure the robustness of risk assessment frameworks, rigorous validation and backtesting procedures are essential. Validation involves assessing the accuracy and reliability of models against historical data or alternative methodologies. Backtesting, on the other hand, involves testing the performance of models using out-of-sample data to evaluate their predictive power and consistency over time. Additionally, sensitivity analysis techniques can be employed to assess the impact of variations in model assumptions on risk metrics and outcomes. By identifying and quantifying sources of model risk, financial institutions can enhance their understanding of potential vulnerabilities and make more informed decisions in managing and mitigating risks.

Table 1. Effects of Unprecedented Events on Financial Model Calibration

Year | Asset Price Movement (%) | Correlation Between Assets | Market Participant Behavior |

2015 | 10 | 0.8 | Rational |

2016 | 8 | 0.7 | Rational |

2017 | 12 | 0.6 | Rational |

2018 | -20 | 0.5 | Panic Selling |

2019 | 15 | 0.4 | Rational |

4.2. Regulatory and Ethical Considerations

The regulatory landscape governing financial risk management is characterized by a complex web of regulations and standards aimed at safeguarding financial stability and protecting investors. This section examines the implications of regulatory requirements on risk management practices, with a focus on the ethical considerations surrounding the use of algorithms and data analytics. Financial institutions are subject to stringent regulatory requirements governing the use of risk management techniques and the disclosure of risk-related information. Compliance with regulatory standards such as Basel III, Solvency II, or the Dodd-Frank Act requires institutions to adopt robust risk management frameworks and adhere to specified capital adequacy and reporting requirements. Failure to comply with these regulations can result in severe penalties and reputational damage. In addition to regulatory requirements, ethical considerations surrounding the use of algorithms and data analytics in risk management are of paramount importance. The use of algorithmic trading strategies and machine learning models raises concerns regarding transparency, fairness, and accountability. For example, algorithmic trading algorithms may exacerbate market volatility or lead to unintended consequences such as flash crashes if not properly calibrated or monitored. Moreover, the use of customer data in risk assessment processes raises privacy and data protection concerns. Financial institutions must ensure compliance with data privacy regulations such as the General Data Protection Regulation (GDPR) and safeguard sensitive customer information from unauthorized access or misuse. Addressing regulatory and ethical considerations requires a proactive approach that emphasizes transparency, accountability, and responsible use of data and algorithms. This may involve implementing robust governance frameworks to oversee risk management practices, conducting regular audits and reviews to ensure compliance with regulatory requirements, and engaging with stakeholders to address ethical concerns and build trust in risk management processes. By adopting a principled approach to risk management, financial institutions can navigate the regulatory landscape effectively while upholding the highest standards of integrity and ethics.

5. Conclusion

In conclusion, this paper underscores the critical importance of quantitative analysis in financial risk management and its role in enabling financial institutions to make more informed and effective decisions in the face of uncertainty. By leveraging probabilistic models, statistical inference methods, and advanced algorithms, institutions can gain deeper insights into market dynamics, assess risk exposures more accurately, and implement robust risk management strategies. However, the challenges posed by model risk, data quality, and regulatory compliance underscore the need for continuous refinement and improvement in risk management practices. By addressing these challenges and embracing the principles of transparency, accountability, and ethical conduct, financial institutions can enhance their resilience to market volatility, safeguard against potential losses, and sustain long-term growth and stability in an ever-changing financial landscape.

References

[1]. Curti, Filippo, et al. “Cyber risk definition and classification for financial risk management.” Journal of Operational Risk 18.2 (2023).

[2]. Ihyak, Muhammad, Segaf Segaf, and Eko Suprayitno. “Risk management in Islamic financial institutions (literature review).” Enrichment: Journal of Management 13.2 (2023): 1560-1567.

[3]. Wahyuni, Sandiani Sri, et al. “Mapping Research Topics on Risk Management in Sharia and Conventional Financial Institutions: VOSviewer Bibliometric Study and Literature Review.” (2023).

[4]. Nugrahanti, Trinandari Prasetyo. “Analyzing the evolution of auditing and financial insurance: tracking developments, identifying research frontiers, and charting the future of accountability and risk management.” West Science Accounting and Finance 1.02 (2023): 59-68.

[5]. El Khatib, Mounir, Humaid Al Shehhi, and Mohammed Al Nuaimi. “How Big Data and Big Data Analytics Mediate Organizational Risk Management.” Journal of Financial Risk Management 12.1 (2023): 1-14.

[6]. Arslon o’g’li, Yuldashev Sanjarbek. “The Solution of Economic Tasks with the Help of Probability Theory.” Texas Journal of Engineering and Technology 26 (2023): 26-29.

[7]. Ross, Sheldon M., and Erol A. Peköz. A second course in probability. Cambridge University Press, 2023.

Cite this article

Liu,W. (2024). Leveraging probability and statistical algorithms for enhanced financial risk management. Theoretical and Natural Science,38,32-38.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Mathematical Physics and Computational Simulation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Curti, Filippo, et al. “Cyber risk definition and classification for financial risk management.” Journal of Operational Risk 18.2 (2023).

[2]. Ihyak, Muhammad, Segaf Segaf, and Eko Suprayitno. “Risk management in Islamic financial institutions (literature review).” Enrichment: Journal of Management 13.2 (2023): 1560-1567.

[3]. Wahyuni, Sandiani Sri, et al. “Mapping Research Topics on Risk Management in Sharia and Conventional Financial Institutions: VOSviewer Bibliometric Study and Literature Review.” (2023).

[4]. Nugrahanti, Trinandari Prasetyo. “Analyzing the evolution of auditing and financial insurance: tracking developments, identifying research frontiers, and charting the future of accountability and risk management.” West Science Accounting and Finance 1.02 (2023): 59-68.

[5]. El Khatib, Mounir, Humaid Al Shehhi, and Mohammed Al Nuaimi. “How Big Data and Big Data Analytics Mediate Organizational Risk Management.” Journal of Financial Risk Management 12.1 (2023): 1-14.

[6]. Arslon o’g’li, Yuldashev Sanjarbek. “The Solution of Economic Tasks with the Help of Probability Theory.” Texas Journal of Engineering and Technology 26 (2023): 26-29.

[7]. Ross, Sheldon M., and Erol A. Peköz. A second course in probability. Cambridge University Press, 2023.