Volume 16 Issue 10

Published on November 2025

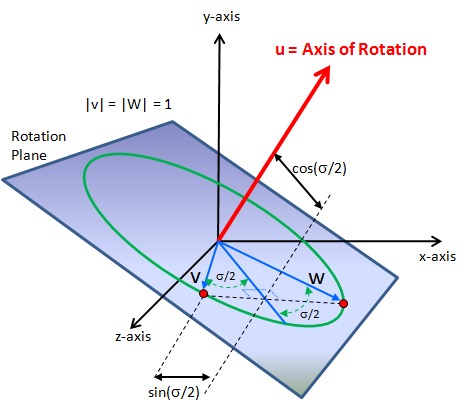

Satellite attitude dynamics are inherently nonlinear, and modelling becomes particularly challenging during large-angle manoeuvres or actuator saturation. Traditional first-principles modelling methods often require precise system parameters, yet even then, many complex behaviours may not be captured. To address this, this paper proposes a data-driven modelling method based on Koopman operator theory, which learns a global linear representation of satellite attitude dynamics from simulated trajectory data. Specifically, the model constructs a high-dimensional enhanced state space using a set of observable values, enabling the Koopman model to approximate nonlinear dynamics through linear systems. This approach achieves a relatively accurate prediction of angular velocity and attitude trajectories. Simulation results show that the Koopman-based model is effective and can be applied for real-time attitude prediction and satellite control.

View pdf

View pdf

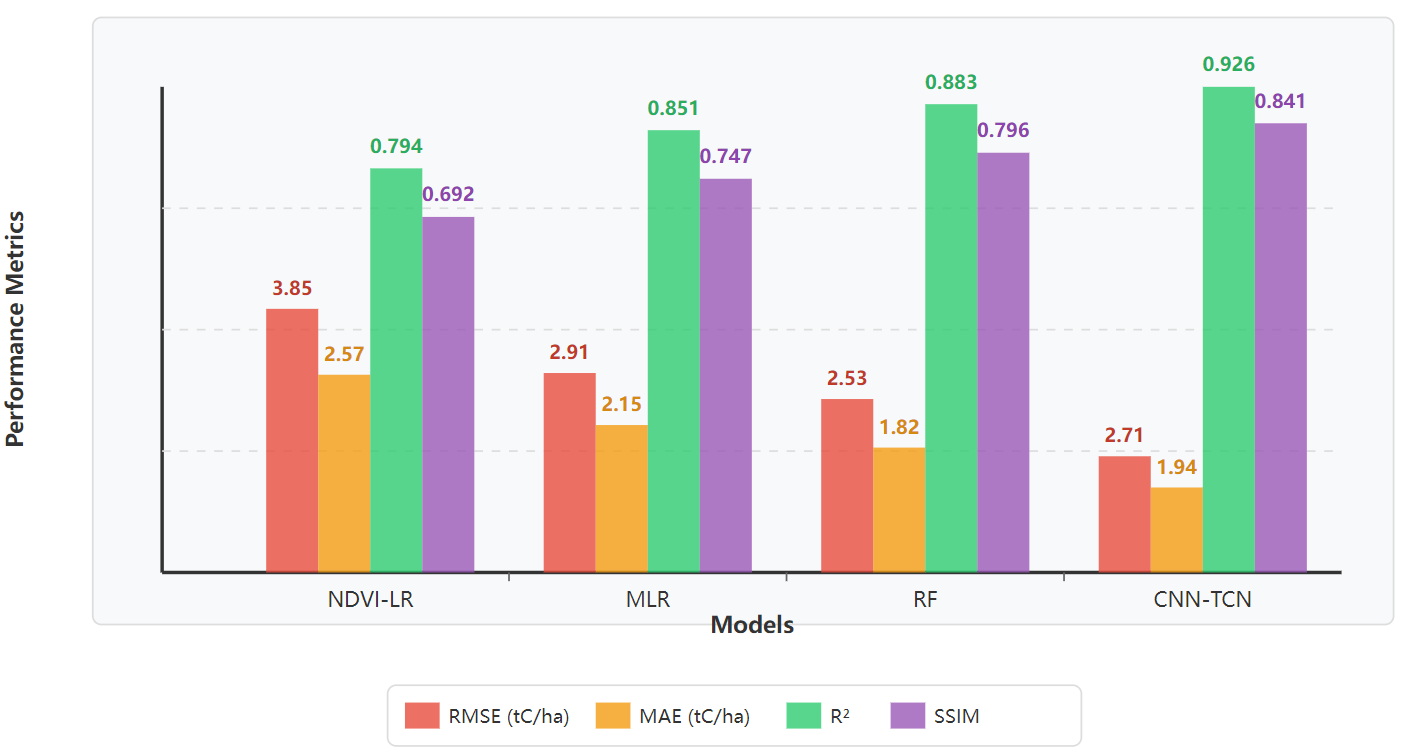

As urban carbon neutrality initiatives accelerate, green spaces in cities are playing an increasingly critical role as natural carbon sinks in mitigating greenhouse gas emissions. However, conventional carbon estimation approaches struggle with spatial fragmentation and temporal variability in urban green areas, resulting in limited accuracy and poor adaptability. To address this challenge, this study proposes a deep spatiotemporal modeling framework combining Convolutional Neural Networks (CNN) and Temporal Convolutional Networks (TCN), integrating multi-source remote sensing data from Landsat-8, Sentinel-2, and MODIS to estimate carbon storage in Guangzhou’s green spaces from 2018 to 2023. Experimental results demonstrate that the model achieves robust performance across diverse land types and seasonal conditions, with an overall RMSE of 2.71 tC/ha, R² of 0.926, and SSIM of 0.841, significantly outperforming traditional statistical and machine learning methods. The study confirms the effectiveness of deep fusion modeling in urban carbon sink estimation and offers a scalable technical pathway to support carbon asset management, green space planning, and low-carbon policy development in complex urban contexts.

View pdf

View pdf

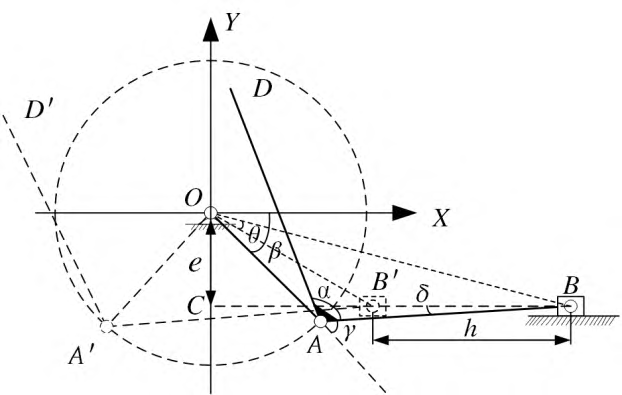

The global trend of population aging continues to intensify, leading to a growing demand for rehabilitation among the elderly. This is particularly evident in the need to address motor dysfunction resulting from disease or injury, which has become increasingly pressing. While traditional manual rehabilitation methods are often inefficient and costly, advanced rehabilitation robots though offering high precision and quantifiable training remain too expensive and structurally complex for widespread use in community and primary care settings. In response, this research focuses on the development of a rehabilitation robot utilizing the crank-slider mechanism. Its feasibility and kinematic properties have been verified through theoretical modeling and simulation. The design emphasizes structural simplicity, low cost, and high adaptability, making it well-suited for upper limb rehabilitation training and offering a practical reference for improving the accessibility of rehabilitation resources at the grassroots level. Future work will prioritize the optimization of control algorithms and ergonomic design to facilitate real-world application and broader dissemination of the device.

View pdf

View pdf

Large Language Models (LLMs) show immense potential in Chinese digital psychological counseling services. However, their training alignment techniques, such as Reinforcement Learning from Human Feedback (RLHF), face challenges including implementation complexity, high computational cost, and training instability. These issues are particularly critical in the high-safety-requirement context of psychological counseling, where model Hallucination and ethical risks urgently need to be addressed.Guided by the safety-first principle, this paper proposes the Safety-Gated Variational Alignment (VAR-Safe) method, built upon the foundation of the Variational Alignment (VAR) technique. VAR-Safe introduces a safety-gated reward transformation mechanism that converts the professional ethics and harmlessness constraints encoded in the reward model into hard penalty terms, thereby more effectively suppressing harmful or unprofessional hallucinated responses.From the perspective of variational inference, VAR-Safe transforms the complex objective of RLHF into an offline, safety-driven, re-weighted Supervised Fine-Tuning (SFT) format. This ensures that all weights during the optimization process remain positive, fundamentally enhancing the robustness and convergence stability of the alignment training.We trained a Chinese digital psychological counselor based on the Chinese SoulChat corpus. Experimental results show that while significantly improving the model's empathy and professionalism, VAR-Safe reduces the critical safety metric—the rate of professional knowledge hallucination—to a level much lower than that of the baseline models, demonstrating its superior applicability in high-safety applications.

View pdf

View pdf

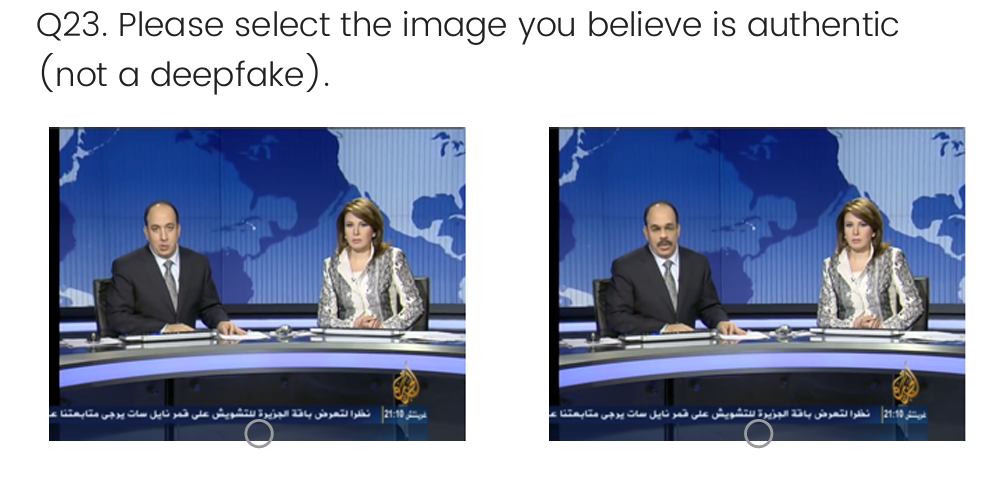

This research explores university students’ ability to identify deepfake images generated by artificial intelligence, and their criminological implications when faced with manipulated content. Through an online questionnaire with 129 participants, the study designed deepfake identification tasks and attitude scales combining demographic, cultural, and experiential factors. The results indicate an overall deepfake identification accuracy of 55.8%, consistent with previous meta-analyses. Regression analysis showed that the education level and AI tool usage experience positively predicted performance, while age and gender had no significant effect. Students who received prior deepfake training had significantly improved identification accuracy; cultural background failed to reach statistical significance. More importantly, exposure to deepfakes reduced trust in digital images and self-judgement confidence. These findings indicate that despite university students’ high reliance on digital platforms, they are not naturally more resilient to deepfakes and remain susceptible to crimes such as fraud, identity theft, and forged evidence. Overall, this paper demonstrates that deepfakes present novel victimisation risks within criminology while undermining the credibility of digital evidence, highlighting the importance of advancing relevant education and prevention strategies within higher education and criminal sciences.

View pdf

View pdf

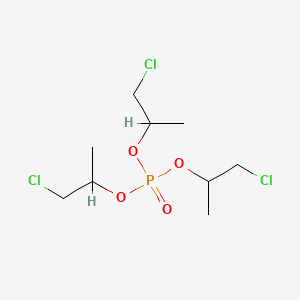

To investigate the endocrine disrupting effect and toxicity mechanism of OPFRs, tris (1-chloro-2-propyl) phosphate (TCIPP), one of the most frequently detected substances in the environment, was selected as a ligand in this study, and AutoDock,a molecular docking software, was used to bind it to eight endocrine nuclear receptors, including estrogen receptor (ER), thyroid hormone receptor (TR), androgen receptor (AR), mineralocorticoid receptor (MR), and glucocorticoid receptor (GR) , retinoic acid receptors (RAR), peroxisome proliferator-activated receptor (PPAR) were molecularly docked and simulated. The results showed that interacts with TR, ER, AR, MR, GR, RAR, PR, and PPAR mainly through hydrophobic forces and hydrogen or halogen bonds, but the optimal binding sites and binding energies are different. The lowest binding energies of TCIPP with ER, TR, AR, MR, GR, RAR, PR, and PPAR are -4.36, -4.76, -4.48, -3.06 ,-4.14,-3.76,-4.40 and -4.12kcal/mol Binding affinity order is TR>AR>PR>ER>GR>PPAR>RAR>MR. The results indicate that the binding affinity of TCIPP to the nuclear receptor is mainly provided by hydrophobic interactions with non-polar residues of the receptor, and the formation of hydrogen or halogen bonds with key residues. Among them, TR, AR, ER, and PR have higher affinity, which may lead to more significant endocrine disrupting effects.

View pdf

View pdf

Ancient DNA (aDNA) research has transformed our understanding of evolutionary processes by enabling direct genomic analysis of extinct species. This study explores the potential of aDNA to decipher genetic adaptations through two key approaches: the genomic evolution of woolly mammoths and the underutilized resource of plant macrofossils. Through high-throughput sequencing and comparative genomics, we identified 3,097 genes with unique derived mutations in woolly mammoths, highlighting adaptations in hair development (e.g., AHNAK2), lipid metabolism (e.g., ACADM), immunity, and thermosensation [1]. Temporal genomic comparisons revealed that while most adaptive changes originated early, recent evolution refined traits such as fur quality and body size. Additionally, we demonstrated that plant macrofossils from calcareous lake sediments preserve endogenous DNA, enabling detailed studies of past flora and their responses to climate shifts [2]. These findings underscore the value of aDNA in revealing polygenic adaptation mechanisms and provide insights for modern conservation and crop improvement strategies. This research emphasizes the interdisciplinary nature of aDNA studies and their critical role in linking past evolutionary processes to present-day biodiversity challenges.

View pdf

View pdf

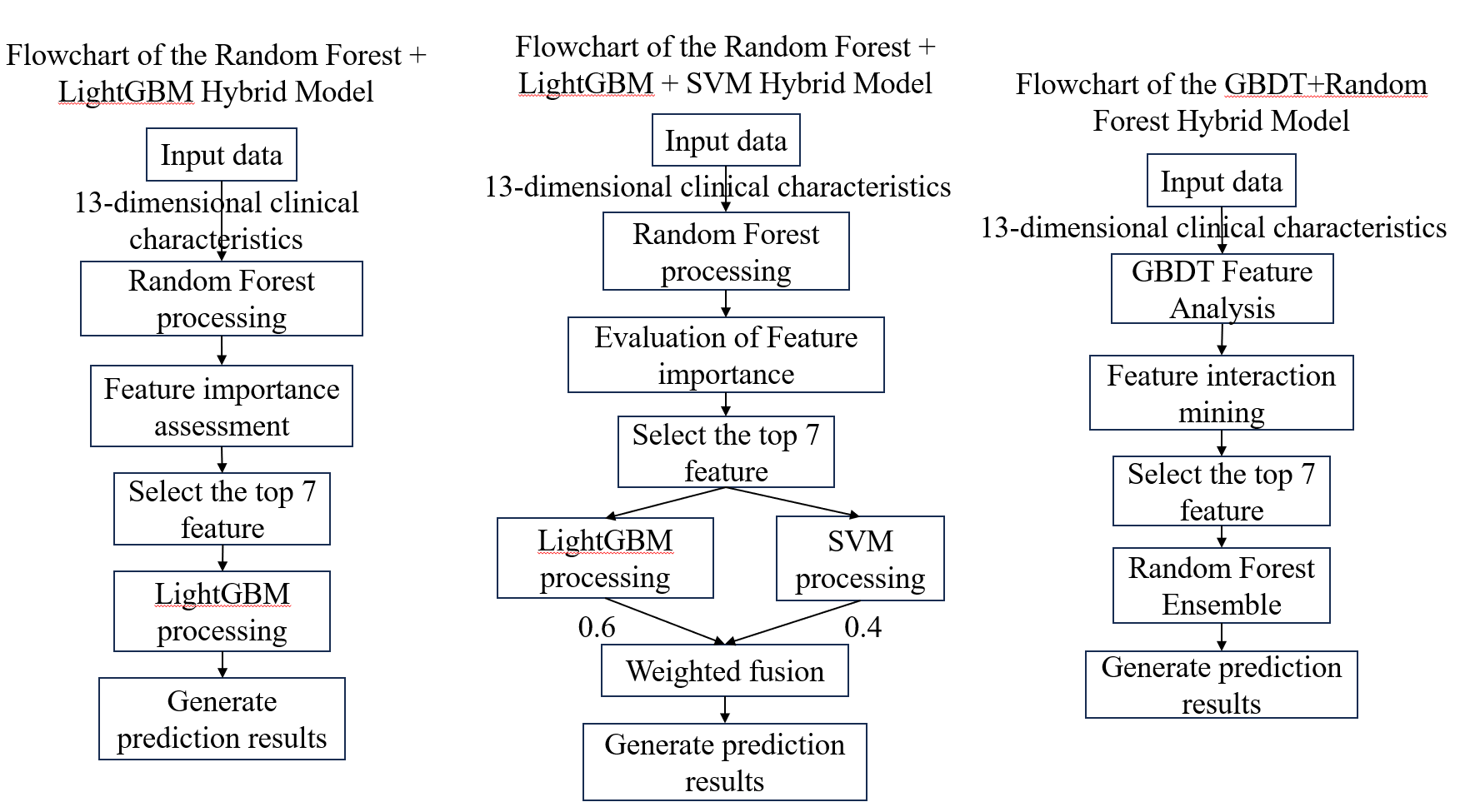

Using a clinical dataset pertaining to heart illness, this study systematically assesses the generalization ability of many machine learning models. The outcomes show that gradient boosting tree models do exceptionally well in terms of generalization in this challenge. With an accuracy of 98.54%, the LightGBM model specifically performed the best because of its strong capacity to extract features from continuous physiological signs. With a 97.56% accuracy rate, the XGBoost model demonstrated distinct generalization benefits in recognizing particular disease characteristics. Additionally, the study discovered that by integrating complementary characteristics between the models, model fusion improved decision stability. One example of this is the hybrid model that combines Random Forest and LightGBM (accuracy 98.05%). Conversely, because of the constraints of their model assumptions, traditional linear models like logistic regression (accuracy 79.51%) showed noticeably poorer generalization capacity. The comparative research emphasizes how crucial it is to choose models for intricate clinical prediction tasks that have solid feature representation and excellent nonlinear fitting capabilities. The results offer insightful information about hybrid methodologies and model selection for enhancing the accuracy and dependability of machine learning-based heart disease diagnosis.

View pdf

View pdf

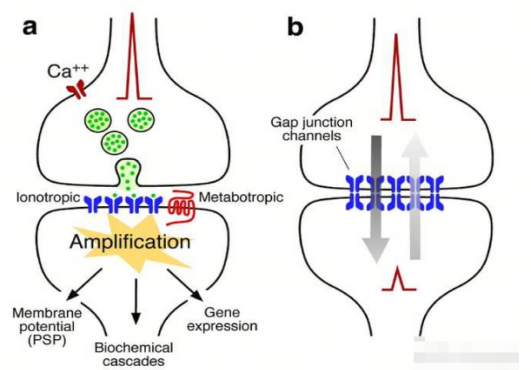

The traditional von Neumann computing architecture is facing bottlenecks such as high power consumption and weak parallel processing capabilities, making it difficult to meet the demands of efficient computing for artificial intelligence and complex information processing. Neuromorphic devices that imitate the structure and function of biological neural systems have become a breakthrough direction. Semiconductor nanomaterials, with their quantum size effect, high specific surface area, and excellent electrical tunability, provide core support for building more efficient and smaller brain-like devices. This paper systematically expounds the characteristics of typical semiconductor nanomaterials such as quantum dots, nanowires, and two-dimensional semiconductors, and deeply analyzes their mechanism of enhancing device performance by simulating neuronal signal transmission and synaptic plasticity (such as conductance regulation based on surface effects). It reveals the key value of the small size effect of materials in improving device integration and reducing energy consumption. Combined with application cases such as neuromorphic vision sensors, neuromorphic computing chips, and biomedical neural interfaces, it verifies the significant advantages of semiconductor nanomaterial-based neuromorphic devices in response speed (microsecond level), energy consumption (nanowatt level), and integration density. Research shows that semiconductor nanomaterials, through structural design and functional regulation, can break through the performance limitations of traditional devices, providing an important technical path for the development of the next generation of low-power, high-integration brain-like computing systems, and are of great significance for promoting innovation in fields such as artificial intelligence and brain-computer interfaces.

View pdf

View pdf

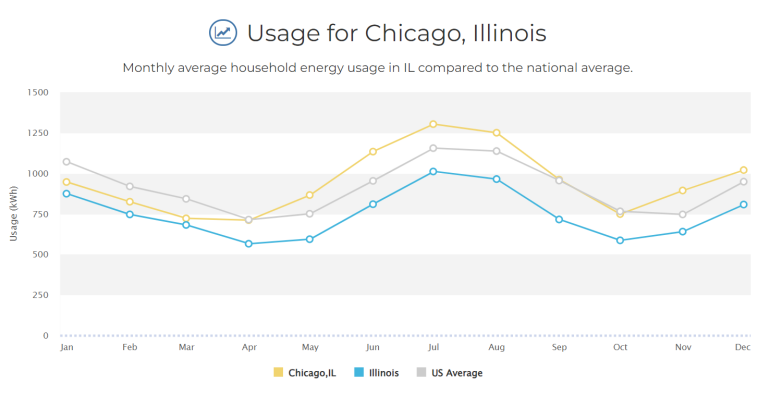

The building sector, especially residential buildings, accounts for a significant proportion of global energy consumption. Therefore, improving the energy efficiency of buildings is thus crucial. This research utilized EnergyPlus to perform simulation analysis on standard residential prototype models and adopted the multi-dimensional comparative study to evaluate the optimization effect under different cases, using Chicago as the simulation location. This research included both active design and passive design dimensions and conducted simulation analysis on energy consumption of heating, cooling, and the whole building. The active design involved a temperature setpoint schedule comprehensively considering occupant activities, comfort, and energy-saving performance. Passive design of the thickness and thermal conductivity of different wall layers were clustered, comparing positive and negative aspects through ±30% variations to ensure the effectiveness of the optimization plan. This indicates that among the various design factors, optimizing temperature setpoint can yield a larger energy-saving outcome compared with optimizing thermal conductivity and thickness. Compared to the baseline, changing the temperature setpoint in the active design based on occupant habits significantly reduces annual energy consumption by about 16%. In passive design, optimizing the wall console layer has a more significant effect when simulating changes in thermal conductivity and thickness. This conclusion can help building architects develop the most appropriate and effective solutions when designing and optimizing buildings to achieve the energy sustainability goal.

View pdf

View pdf