Volume 203

Published on November 2025Volume title: Proceedings of CONF-SPML 2026 Symposium: The 2nd Neural Computing and Applications Workshop 2025

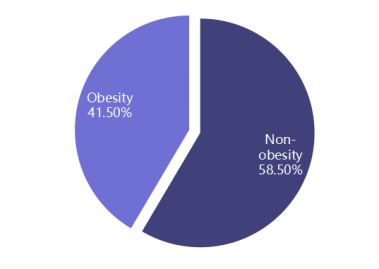

The relationship between socioeconomic status (SES) and obesity is well-established, its multidimensional nature and racial heterogeneity remain underexplored and inadequately quantified. This study investigates the race-specific gradients of obesity across three SES dimensions-income, education, and occupation-using data from 62,350 adults in the National Health and Nutrition Examination Survey (NHANES) 2021-2023. Survey-weighted logistic regression with race-SES interaction terms was employed to model obesity risk. Results indicate that education is the most robust SES predictor, with higher attainment significantly reducing the odds of obesity. Although income showed no significant overall association, analysis of predicted marginal means revealed profound racial disparities: Non-Hispanic Black adults exhibited the highest obesity prevalence at low-income levels, with these disparities persisting even at high income, indicating diminishing returns. Employment status exerted race-specific effects, with unemployment drastically elevating obesity probability among Non-Hispanic Black adults. These findings underscore the need for multidimensional, race-conscious public health interventions to effectively address obesity disparities.

View pdf

View pdf

The emergence of Large Language Model (LLM)-based agents marks a significant step towards more capable Artificial Intelligence. However, the effectiveness of these agents is fundamentally constrained by the static nature of their internal knowledge. Tool use has become a critical paradigm to overcome these limitations, enabling agents to interact with dynamic data, execute complex computations, and act upon the world. This paper provides a comprehensive survey of the methods, challenges, and future directions in empowering LLM-based agents with tool-use capabilities. Through a systematic literature review, we synthesized the current state of the art, charting the evolution from foundational agent architectures and core invocation mechanisms like function calling to advanced strategies such as dynamic tool retrieval and autonomous tool creation. Our analysis revealed several critical challenges that impede the deployment of robust agents, including knowledge conflicts between internal priors and external evidence, significant performance degradation in long-context scenarios, non-monotonic scaling behaviors in compound systems, and novel security vulnerabilities. By mapping the current research landscape and identifying these key obstacles, this survey proposes a research agenda to guide future efforts in building more capable, secure, and reliable AI agents.

View pdf

View pdf

Among the most significant issues societies are air pollution and environmental change. They impact natural ecosystems and agriculture in addition to human health. Previous studies frequently relied on data from a small number of nearby stations, which constrained the scope of the analysis. Recent investigations integrate expansive datasets derived from satellite observations, meteorological sources, and monitoring infrastructures. Furthermore, the application of big data analytics has been instrumental in diverse environmental domains, spanning ecosystem conservation, climatological research, and water quality assessment, with a specific emphasis on air quality forecasting and allied ecological inquiries documented between 2015 and 2025. Linear models, tree-based models, deep learning techniques, and more sophisticated statistical tools are the primary categories of methods. Applications include monitoring biodiversity, predicting air pollution, and assessing climate risk. Deep learning is helpful when spatial and temporal patterns are complex, statistical methods assist in displaying degrees of certainty or uncertainty, and tree models are frequently dependable baselines. A lack of interpretability and inadequate testing techniques are obstacles. Future research should aim to strengthen validation, provide a explanation of uncertainty, and link data-driven methodologies to accepted scientific principles.

View pdf

View pdf

Virtual Reality (VR) motion sickness is a physiological discomfort that users experience when using virtual reality devices, and it is one of the main reasons that limit the development and application scenarios of virtual reality technology. To enhance user experience and broaden the application prospects of VR technology, it is crucial to study the causes and preventive measures of motion sickness. This article primarily examines the causes and manifestations of VR motion sickness by integrating the visual generation principle of VR. Understand subjective assessment indicators such as Simulator Sickness Questionnaire (SSQ) and Vestibular Function Measurement (FMS), as well as objective indicators, and discuss the relief methods of related symptoms from multiple aspects including technical optimization, content design, and user adaptation. Future research can further integrate physiological signal monitoring and behavioral data analysis to build a more comprehensive assessment and intervention system for motion sickness, thereby promoting the wider application of virtual reality technology in fields such as education, healthcare, and entertainment.

View pdf

View pdf

As one of the core tasks in the field of computer vision, object detection has made significant progress over the last few years, driven by deep learning technology. This paper systematically reviews the development process of object detection algorithms, from traditional methods to modern deep learning methods, focusing on the technical characteristics and performance of representative algorithms such as the You Only Look Once (YOLO) series, Region-Based Convolutional Neural Networks (R-CNN) series, and the Single-Shot MultiBox Detector (SSD). In this paper, the lightweight optimization strategy is discussed in depth to deal with difficulties of computing resource limitations in practical applications of object detection, including network structure simplification, computing efficiency optimization, and hardware adaptation. In addition, this paper further combines typical cases, such as a two-stage efficient parking space detection method, to expound the application status and challenges of object detection technology in cross-field fields such as autonomous driving, intelligent transportation, and industrial testing. Finally, this paper looks forward to the future development direction of object detection technology, and puts forward potential research hotspots such as multimodal fusion and edge computing adaptation.

View pdf

View pdf

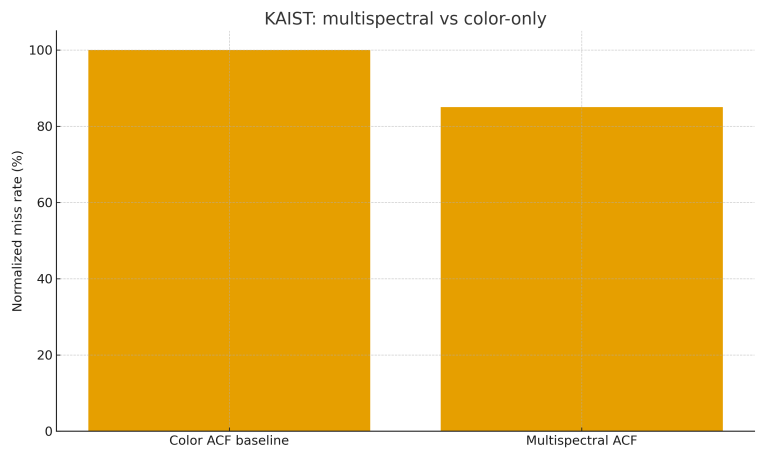

Multimodal perception enhances robustness in industrial inspection and mobile robotics by fusing complementary signals when individual modalities falter due to insufficient lighting, specular reflections, motion-induced blurring, or limited textural information. This article synthesizes evidence from peer-reviewed studies and normalizes metrics across representative datasets to characterize what RGB, depth, thermal, LiDAR, radar, and IMU achieve alone and what they achieve in combination. Using MVTec AD, KAIST, TUM VI, and nuScenes as anchors, the synthesis compares miss rate, trajectory error, 3D detection quality, and bird’s-eye-view map fidelity while considering latency, power integrity, electromagnetic compatibility, bandwidth, and maintainability. The concordant findings reveal that color-thermal integration significantly diminishes failures in pedestrian detection under low-illumination conditions, while tightly integrated visual-inertial systems curtail drift compared to purely visual odometry. Furthermore, bird’s-eye-view integration enhances 3D detection and mapping performance relative to camera-only or LiDAR-only benchmarks. The analysis also identifies system prerequisites that enable reproducible gains—precise timing, disciplined calibration, robust power and electromagnetic practice, and sufficient bandwidth—and concludes with implementation guidelines to help transfer benchmark-reported benefits to factory floors and field robots.

View pdf

View pdf

Large Language Models (LLMs) produce fluent but sometimes unfounded outputs, a phenomenon commonly called hallucination. On the XiaoHongShu (RED) platform, when users input kaomoji—ASCII or Unicode emoticons—the translation tool often returns a stable, seemingly meaningful Chinese phrase even though the input lacks explicit semantic content. This paper examines why LLMs generate such fixed translations. Building on the concept of hallucination snowballing, classifications of hallucination types, and methods for reducing knowledge hallucinations, through case analysis, literature synthesis, and mechanistic review, this paper mainly discusses: (1) LLMs produce consistent translations for semantically null inputs. (2) Which hallucination category best fits this case? (3) How do prompt framing, pretraining co-occurrence patterns, and autoregressive decoding contribute? This study argues that the kaomoji fixed translation is primarily a form of knowledge hallucination reinforced by prefix consistency and statistical co-occurrence. This paper concludes by recommending uncertainty-aware behaviors, prompt-level checks, and data interventions to reduce such errors.

View pdf

View pdf

With the rapid development of multimodal human-computer interaction, generating high-fidelity, emotionally rich, and naturally coordinated human head animation based on multi-source inputs such as language, images, and text has become a core issue in virtual human research. This article systematically reviews the representative methods in this field in the past five years, and conducts a classified analysis around Transformer-like sequence modeling, the gradual generation mechanism of diffusion models, the NeRF implicit three-dimensional modeling path, and other specialized architectures. Through vertical technological evolution and horizontal performance comparison, the advantages and bottlenecks of various methods in semantic understanding, emotion driving, perspective consistency, and style expression are revealed. It is further pointed out that the fine-grained control of the emotion-driven mechanism, the balance between 3D modeling efficiency and authenticity, and the high degree of customization of personalized appearance and behavioral style will determine the expressive boundaries of the virtual human image. Future research needs to continue to make breakthroughs in cross-modal feature fusion, semantic consistency modeling, and long sequence generation stability, so as to build a virtual human system with human-like interaction capabilities and provide theoretical support and technical reference for application scenarios such as digital human social interaction, education, and entertainment.

View pdf

View pdf

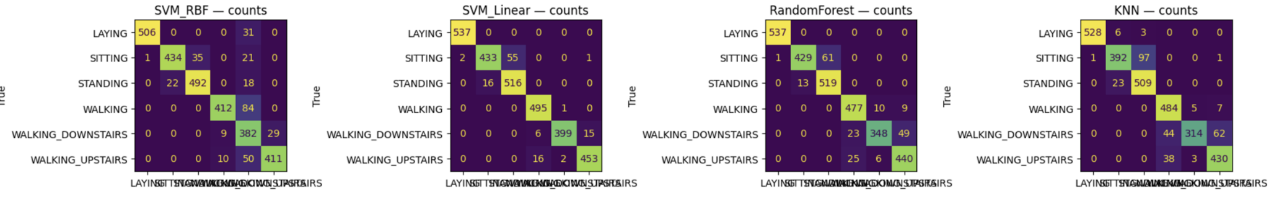

Human Activity Recognition (HAR) is vital in pattern recognition and AI, with applications in smart health and security, but faces challenges like diverse activities and sensor noise, making model selection critical. In this work, we compare classical and deep learning models for HAR using the UCI HAR dataset—including inertial sensor data from 30 volunteers performing six activities. Classical models (linear/RBF SVM, Random Forest, KNN, AdaBoost, Stacking) and deep learning models (CNN, RNN-LSTM) are evaluated on accuracy, macro-F1, and resource metrics. A leakage-free workflow is adopted: GridSearchCV (3-fold cross-validation) tunes hyperparameters, models are retrained on the full training set, and tested independently. Results show linear SVM achieves the best single-model accuracy (96.13%), while Stacking (combining linear SVM, KNN, RF) performs best overall (96.61%). CNN (92.60% accuracy) slightly outperforms RNN-LSTM (90.91%), and KNN uses the least memory. This work provides key insights for HAR model selection (linear SVM as baseline, Stacking for accuracy) and guides future work to reduce false positives, advancing HAR technology.

View pdf

View pdf

In recent years, Brain-Computer Interface (BCI) technology has advanced rapidly, emerging as a critical bridge between the human brain and external devices with promising applications in medical rehabilitation, intelligent control, and other fields. However, the accurate parsing of neural signals and efficient prediction of user intent remain key challenges that hinder the widespread practical implementation of BCI systems. This study focuses on the deep analysis of BCI neural signals based on AI intent prediction, aiming to address two core research questions: how to enhance the accuracy of AI-based intent prediction through in-depth neural signal analysis, and which AI algorithms are most suitable for processing BCI neural signal data. First, relevant research progress was systematically summarized through a literature review. Then, the characteristics of BCI neural signals were analyzed using data analysis techniques. Finally, the effectiveness of different AI algorithms was verified via algorithm simulation. The research results demonstrate that integrating advanced AI algorithms with deep neural signal analysis technology can significantly improve the accuracy of intent prediction. This finding not only provides a new approach to overcoming the existing bottlenecks in BCI technology but also lays a theoretical and practical foundation for the further development and application of BCI systems.

View pdf

View pdf