Volume 90

Published on October 2024Volume title: Proceedings of the 6th International Conference on Computing and Data Science

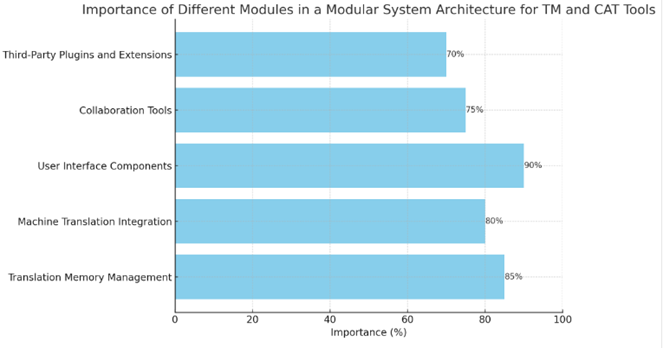

This paper explores the innovative integration of Translation Memory (TM) and Computer-Assisted Translation (CAT) tools to enhance translation efficiency, consistency, and quality in multinational organizations. By adopting a user-centric interface design, modular system architecture, and cloud-based deployment, the integrated system addresses diverse translation needs while ensuring data security and privacy. Key features include real-time translation suggestions powered by machine learning algorithms, seamless access to TM databases, and real-time collaboration tools. The paper discusses implementation strategies, challenges, and solutions, highlighting the importance of user training and continuous improvement. A case study demonstrates significant improvements in translation speed, accuracy, and user satisfaction, underscoring the potential of advanced translation technologies to transform translation workflows. The findings provide valuable insights into best practices for successful implementation and optimization of TM and CAT tools in complex, large-scale environments.

View pdf

View pdf

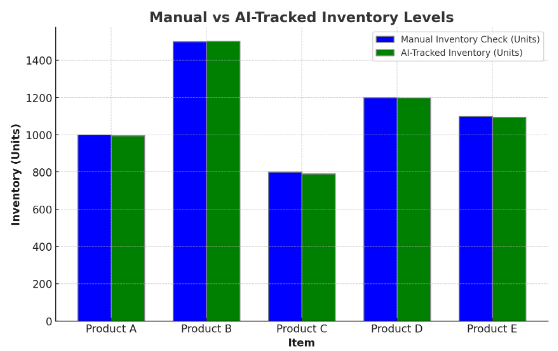

Artificial intelligence (AI) has become a transformative force in supply chain and operations management, offering significant enhancements in efficiency and resilience. This paper examines the integration of AI technologies such as machine learning, predictive analytics, and real-time data processing in demand forecasting, inventory management, logistics, and risk mitigation. By analyzing diverse data sources, AI improves demand forecasting accuracy, reduces inventory costs, optimizes logistics routes, and enhances supply chain visibility. Case studies and data-driven insights demonstrate how AI-driven systems enable companies to adapt to market dynamics, prevent disruptions, and achieve substantial cost savings. The findings suggest that embracing AI is essential for businesses aiming to optimize their supply chain operations and build robust, resilient frameworks capable of withstanding future challenges.

View pdf

View pdf

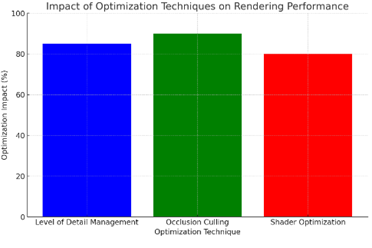

Real-time rendering is a cornerstone of modern interactive media, enabling the creation of immersive and dynamic visual experiences. This paper explores advanced techniques and high-performance computing (HPC) optimization in real-time rendering, focusing on the use of game engines like Unity and Unreal Engine. It delves into mathematical models and algorithms that enhance rendering performance and visual quality, including Level of Detail (LOD) management, occlusion culling, and shader optimization. The study also examines the impact of GPU acceleration, parallel processing, and compute shaders on rendering efficiency. Furthermore, the paper discusses the integration of ray tracing, global illumination, and temporal rendering techniques, and addresses the challenges of balancing quality and performance, particularly in virtual and augmented reality applications. The future role of artificial intelligence and machine learning in optimizing real-time rendering pipelines is also considered. By providing a comprehensive overview of current methodologies and identifying key areas for future research, this paper aims to contribute to the ongoing advancement of real-time rendering technologies.

View pdf

View pdf

In today's increasingly frequent human-computer interaction, the accurate recognition and response of intelligent systems to human emotions has become the key to improving the user experience. This paper aims to explore the application of physiological signals and facial expression synchronization analysis in emotion recognition. This paper adopts the method of literature review, and summarizes the acquisition techniques of physiological signals and facial expressions, synchronous analysis methods, and their application cases in emotion recognition. At the same time, the limitations and challenges of the existing technology are discussed. The review shows that the synchronous analysis of physiological signals and facial expressions has important application value in the field of emotion recognition, especially in mental health monitoring, educational feedback, and customer service automation. However, the practical application of the technology still faces many challenges, including the real-time nature of data collection, the adaptability of individual differences, and other identification problems. Therefore, future research needs to further optimize data acquisition technology and develop more accurate and personalized analysis algorithms.

View pdf

View pdf

This paper mainly introduces the application and assistance of artificial intelligence in architecture and construction cost, as well as the future prospects and development trends. The study adopts a quantitative research design of survey research and data collection method of literature analysis. In addition, it can further explore the innovative applications of artificial intelligence in architectural design, such as spatial planning optimization based on big data analysis and simulation experience through virtual reality technology. At the same time, it can also deeply explore the role of artificial intelligence in the automated management of construction processes, material selection optimization, etc., and give detailed explanations with specific cases. In addition, when discussing the future prospects and development trends, it can consider the prediction of the impact of the continuous evolution of artificial intelligence technology on the construction industry, such as the wider application of machine learning algorithms in project management, the gradual maturity of intelligent sensor systems in building equipment monitoring. At the same time, it can also analyze the profound impact of artificial intelligence on the future development of the construction industry from economic, social and environmental angles, and put forward corresponding solutions.

View pdf

View pdf

In today’s society, with the progress of science and technology, robots are increasingly being applied in all walks of life. As a result, there is an increasing need for robots to accurately measure distances in different aspects of life and work. This paper explores the principles, applications, and methods of camera-based robot ranging technology through reference and research. The research shows that different ranging principles and various methods have their advantages and disadvantages, and their applicable scenarios are also very different. This article can promote the optimization of robot ranging technology and provide valuable insights for its application in more diverse scenarios.

View pdf

View pdf

As the gap between rich and poor in China has become increasingly prominent, various charity platforms have emerged. However, due to information asymmetry, many recipients cannot receive effective donations, and the emergence of fraudulent donations also makes donors unable to fulfill their intentions. We designed some improvements to the delayed acceptance (DA) algorithm and applied it flexibly in the donation platform. This can improve the efficiency of matching between donors and recipients. The variant of the DA algorithm is designed to carry out multiple rounds of matching, give donors and recipients the opportunity to select the matching priority list based on various factors and use a reasonable matching mechanism to achieve Pareto efficient, strategy-proof, and stable matching. Therefore, the application of the variation of the DA algorithm in the donation problem can better match the needs, so that the platform can complete a more reasonable and efficient donation matching.

View pdf

View pdf

Air travel is a popular choice for people who seek to travel outside in the present. It greatly promotes communication and economic development. However, flight delays can have seriously disappointing effects on various aspects. For passengers, they not only need a long time to wait and additional expenses, but they also experience more psychological stress. And airlines face economic losses, reduced customer satisfaction, and disruptions in flight scheduling, which further affect operational efficiency. Therefore, this paper aims to explore potential methods that are combined with the Internet of Things to improve the punctuality of air transportation in order to ease the hard situation of flight delays caused by bad weather. And based on the previous related papers, the author of this paper thinks a kind of meteorological monitoring and early warning system can be built based on the Internet of Things to improve real-time weather monitoring and flight scheduling, as well as ground service optimization. Hence, people can improve the punctuality of air transportation by optimizing resource utilization and cost management so that the whole industry can bring significant economic benefits to airline companies and improved travel experiences to passengers.

View pdf

View pdf

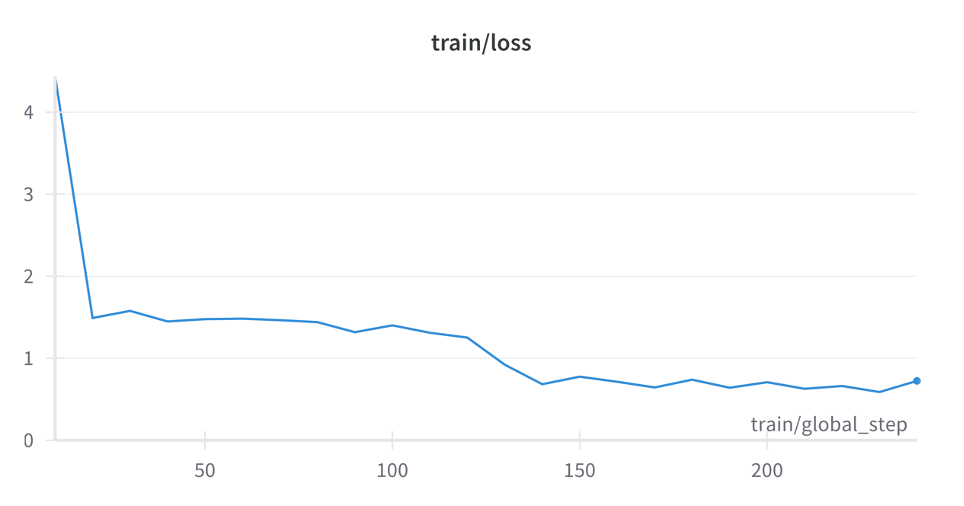

This paper presents the development and evaluation of “Cura-LLaMA,” an open-source large language model (LLM) tailored for the medical domain. Based on the TinyLlama model, Cura-LLaMA was fine-tuned using the PubMedQA dataset to enhance its ability to address complex medical queries. The model’s performance was compared with the original TinyLlama, focusing on its accuracy and relevance in medical question-answering tasks. Despite improvements, the study highlights challenges in using keyword detection methods for evaluation and the limitations of omitting non-essential columns during fine-tuning. The findings underscore the potential of fine-tuning open-source models for specialized applications, particularly in resource-limited settings, while pointing to the need for more sophisticated evaluation metrics and comprehensive datasets to further enhance accuracy and

View pdf

View pdf

High-frequency trading (HFT) has transformed financial markets by enabling rapid trade execution and exploiting minute market inefficiencies. This study explores the application of machine learning (ML) techniques to predictive modeling in HFT. Four ensemble boosting methods—Adaptive Boosting, Logic Boosting, Robust Boosting, and Random Under-Sampling (RUS) Boosting—were evaluated using order book data from Euronext Paris. The models were trained and validated on data from a single trading day, with performance assessed using precision, recall, ROC curves, and feature importance analysis. Results indicate that Robust Boosting achieves the highest precision (90%), while Adaptive Boosting and RUS Boosting demonstrate higher recall (94% and 93%, respectively). This research highlights the potential of ML in enhancing HFT strategies, with implications for future trading system developments.

View pdf

View pdf