Volume 145

Published on April 2025Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

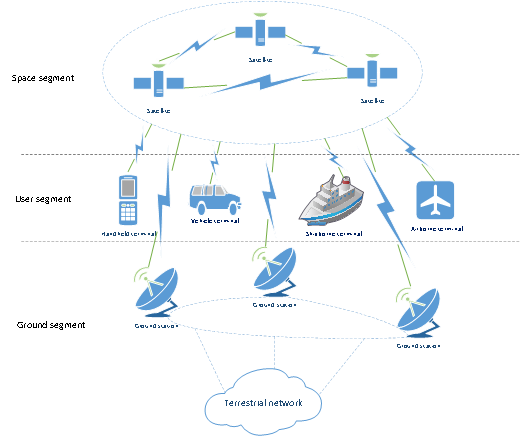

Low Earth Orbit (LEO) satellite communication systems have emerged as a critical solution to address the growing demand for global, seamless, and low-latency connectivity. Compared with Geosynchronous Earth Orbit (GEO) satellites, LEO satellites enable reduced signal delays and broad coverage. Therefore, they are particularly suitable for remote and underserved regions. This paper provides a comprehensive review of LEO satellite communication systems, focusing on three major aspects: frequency band division, system architecture, and case analysis. The study explores the utilization of various frequency bands, including L, S, C, X, Ku, Ka, Q, and V bands, highlighting their characteristics and applications. The system architecture, including the space, user, and ground segments, is analyzed in detail. Additionally, the paper examines the Starlink constellation as a typical example and discusses its architecture, performance, and coverage capabilities. However, LEO satellite communication systems face significant challenges, such as limited resources, high costs, and problems due to the high speed of satellites. Therefore, advancements in artificial intelligence, ultra-narrow beamforming, and 5G and 6G networks present promising opportunities for future development.

View pdf

View pdf

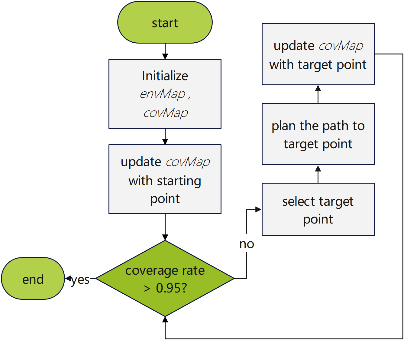

This paper proposes a novel coverage path planning algorithm for autonomous robots in grid-based environments, focusing on search and rescue missions. Efficient exploration and mapping of disaster areas are crucial for locating survivors and guiding rescue operations. The algorithm addresses complex, dynamic environments by dynamically selecting target points using a weighted scoring function that balances exploration and exploitation, minimizing travel distance and energy consumption. It incorporates visibility constraints to simulate real-world sensor limitations, ensuring accurate coverage updates and thorough exploration. The integration of the A* algorithm enables optimal navigation around obstacles, enhancing efficiency in cluttered spaces. This approach is vital for mission success and survival rates in time-sensitive rescue scenarios.

View pdf

View pdf

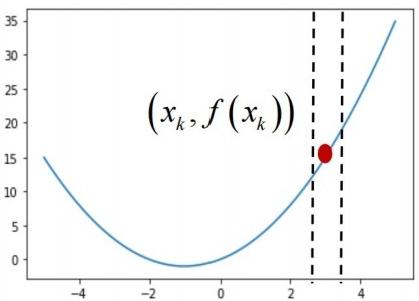

Descent algorithms, particularly gradient-based methods are very important in optimization of deep learning models. However, their application is often accompanied by significant mathematical challenges, including convergence guarantees, avoidance of local minima, and the trade-offs between computational efficiency and accuracy. This article begins by establishing the theoretical underpinnings of descent algorithms, linking them to dynamical systems and extending their applicability to broader scenarios. It then delves into the limitations of first-order methods, highlighting the need for advanced techniques to ensure robust optimization.The discussion focuses on the convergence analysis of descent algorithms, emphasizing both asymptotic and finite-time convergence properties. Strategies to prevent convergence to local minima and saddle points, such as leveraging the strict saddle property and perturbation methods, are thoroughly examined. The article also evaluates the performance of descent algorithms through the lens of structural optimization, offering insights into their practical effectiveness. The conclusion reflects on the theoretical advancements and practical implications of these algorithms, while also addressing the ethical considerations in their deployment. By bridging theory and practice, this article aims to provide a deeper understanding of descent algorithms and their role in advancing artificial intelligence.

View pdf

View pdf

Recommendation algorithms are a crucial research direction in the fields of artificial intelligence and data science, with widespread applications in e-commerce, streaming media, education, healthcare, and social networks. The demand for accurate and personalized information has driven the development of recommendation systems. However, different application scenarios place varying emphases on recommendation algorithms. For instance, e-commerce focuses on conversion rates, social platforms emphasize user relationship expansion, and the healthcare sector prioritizes accuracy and privacy protection. Consequently, optimizing recommendation algorithms based on industry-specific characteristics has become a key research focus. This paper summarizes the core technologies of recommendation algorithms and their applications across different domains. It also analyzes current challenges such as data sparsity, the cold start problem, and privacy protection, along with corresponding countermeasures. To address these issues, researchers have proposed optimization methods that integrate deep learning and reinforcement learning, as well as improvements such as cross-domain data fusion and user intent modeling. Furthermore, future trends in recommendation systems include cross-domain recommendations, enhanced privacy protection techniques, improved interpretability, and the adoption of federated learning to ensure user data security while enhancing recommendation quality. With the continuous advancement of artificial intelligence, recommendation systems will become more intelligent, personalized, and secure, providing users with more accurate and efficient recommendation services.

View pdf

View pdf

Lightweight pruning facilitates the deployment of machine learning models on resource-constrained devices. This review systematically examines pruning techniques across different technical paths, along with lightweight strategies that incorporate pruning. Regarding pruning techniques, the review successively delves into the principles, implementation methods, and applicable scenarios of structured pruning, unstructured pruning, and automated pruning. Structured pruning holds significant advantages in hardware implementation; unstructured pruning, on the other hand, demonstrates unique potential in fine-grained optimization, while automatic pruning methods achieve more precise model compression through intelligent search strategies. Subsequently, it centers on the synergy among pruning and other lightweight approaches, presenting the integration of pruning with quantization, the combination of pruning and distillation, as well as the pruning concepts incorporated in lightweight neural network architectures. The review concludes by highlighting some current challenges facing pruning technologies and offering insights into potential future research directions. These integration strategies not only enhance the model's operational efficiency in resource-constrained environments, but also offer innovative ideas for further compression while maintaining high accuracy.

View pdf

View pdf

There is an emerging trend transitioning from conventional intelligent artificial systems to embodied intelligence, which represents a systematic function integrated with a physical carrier. Unlike the previously abstract AI, embodied intelligence leverages theories and technologies from artificial intelligence, robotics, mechanical manufacturing, and design. It accomplishes specific tasks through interactions with the real world, thereby exerting a direct or indirect influence on the physical environment. New technology like Open AI Sora has marked a closer step for AGI (Artificial General Intelligence). With the continuous improvement of large model technology and deep learning, embodied intelligence has unprecedented opportunities to develop. Therefore, this paper will focus on the prospect of embodied intelligence and the chances brought by large models. This paper is written based on summarizing and discussing many previous papers, using the method of literature review and research. By taking advantage of large model, embodied AI has the prospect to make progress in interactions with humans, more accuracy in execution, and more fields.

View pdf

View pdf

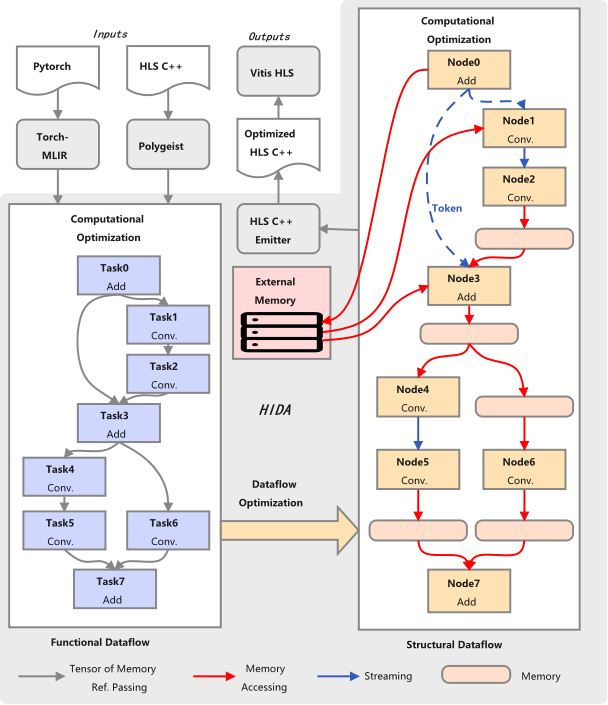

With the widespread application of Deep Neural Networks (DNNs) in edge computing and embedded systems, edge devices face challenges such as limited computational resources and strict power constraints. Microcontroller Units (MCUs), combined with the low-cost and mass-production advantages of dedicated Neural Processing Units (NPUs), provide a more practical solution for edge AI. This paper proposes a neural network optimization framework for NPU-MCU heterogeneous computing platforms. By leveraging techniques such as algorithm partitioning, pipeline design, data flow optimization, and task scheduling, the framework fully exploits the computational advantages of NPUs and the control capabilities of MCUs, significantly improving the system's computational efficiency and energy efficiency. Specifically, the framework assigns compute-intensive tasks (e.g., convolution, matrix multiplication) to NPUs and control-intensive tasks (e.g., task scheduling, data preprocessing) to MCUs. Combined with pipeline design and data flow optimization, it maximizes hardware resource utilization, reduces power consumption, and alleviates memory bandwidth pressure. Experimental results demonstrate that the framework performs exceptionally well in edge computing and IoT devices, effectively addressing the challenges of deploying neural networks in resource-constrained scenarios. This research provides a systematic optimization method for the industrial application of edge intelligence, offering significant theoretical and practical value.

View pdf

View pdf

In an era of rapid technological evolution, cloud computing has become indispensable across various industries due to its cost-efficiency, scalability, and accessibility. Yet, privacy and security concerns persist, as sensitive data can be susceptible to breaches and unauthorized access. At present, the two mainstream cloud computing architectures are centralized architecture and decentralized architecture. Both have advantages and disadvantages in terms of confidentiality, integrity and data availability. This paper employs a comparative analysis of these two architectures, synthesizing insights from recent studies and emphasizing the potential of emerging technologies like blockchain to strengthen privacy protection. By examining both architectures through a high-level lens, this study explores how decentralization, supported by distributed consensus mechanisms, can address vulnerabilities and enhance trust among stakeholders. It can be concluded that a decentralized approach, when underpinned by robust cryptographic methods, offers superior safeguards against evolving threats.

View pdf

View pdf

Automatic speech recognition (ASR) techniques are becoming more and more important in people’s daily life as a vital method of human-computer interaction. The ability to maintain a high recognition accuracy in noisy environments is the key for a model to be widely used in ASR. This article researches on the robustness against different kinds of background noise in Mandarin Chinese speech recognition and the baseline model used here is Bidirectional Long Short-Term Memory (BiLSTM). To compare the effects of different kinds of data augmentation method and different model structure, four common used data augmentation methods are applied in the process of training respectively and together, and two model methods, the CNNs and the attention mechanism, are combined with the baseline model, using the method of controlled experiment. After the model is trained, it will be tested within three kinds of background noise (car noise, café noise, white noise) to evaluate the anti-noise ability of different methods applied to the model. Among the four data augmentation methods, the method of random increased/decreased volume performed best in improving recognition accuracy, while the method of time-frequency masking increased Character Error Rate (CER) unexpectedly. As for the model methods, the CNNs performed better than the attention mechanism.

View pdf

View pdf

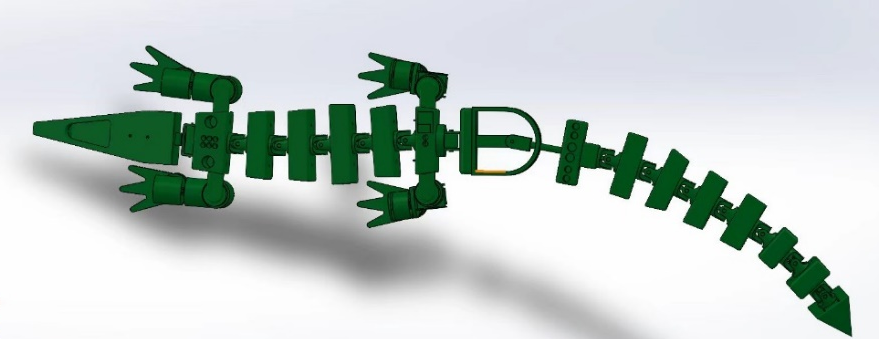

The crocodile simulation robot is a biomimetic robot designed and manufactured by integrating mechanical technology, electronics, and bionics principles. It is modeled after the appearance of a crocodile and is capable of mimicking its movements and behaviors. The robot features a realistic appearance, flexible motion, strong adaptability, and sensory capabilities. This study designed a crocodile simulation robot with a highly realistic appearance, agile movements, and strong environmental perception and adaptability. This design employs spherical gears to control head movement, universal joints to connect the torso, and a cable-driven system to steer the torso and tail. The study focuses on the development of the crocodile simulation robot, outlining its overall design concept, key technologies, and expected application scenarios. This crocodile simulation robot is designed based on biomimetic principles to achieve a highly realistic appearance, agile movements, and strong environmental adaptability. The key technologies include spherical gears for head control, universal joints for torso connection, and a cable-driven system for coordinated torso and tail movement. It is expected to be applied in fields such as environmental monitoring and scientific research, leveraging its lifelike motion and sensory capabilities.

View pdf

View pdf