Volume 22

Published on October 2023Volume title: Proceedings of the 5th International Conference on Computing and Data Science

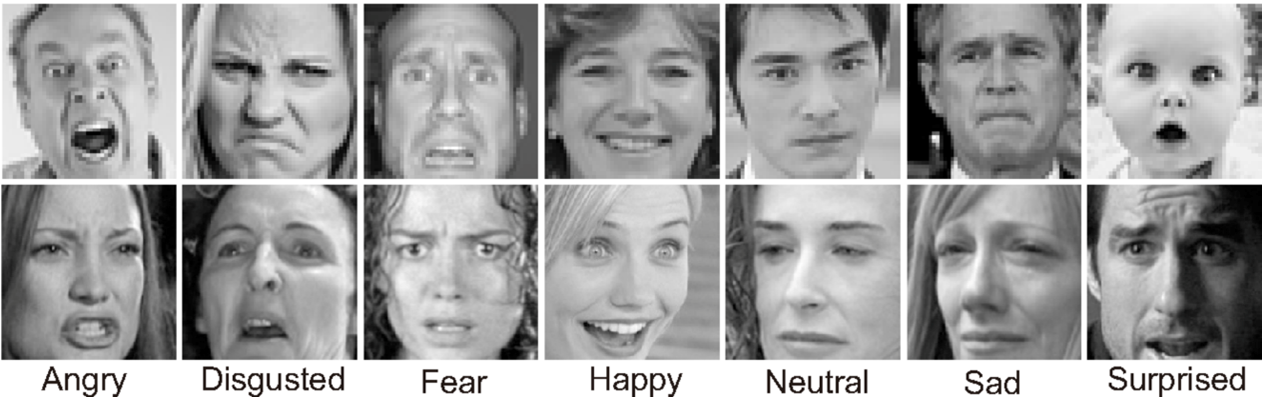

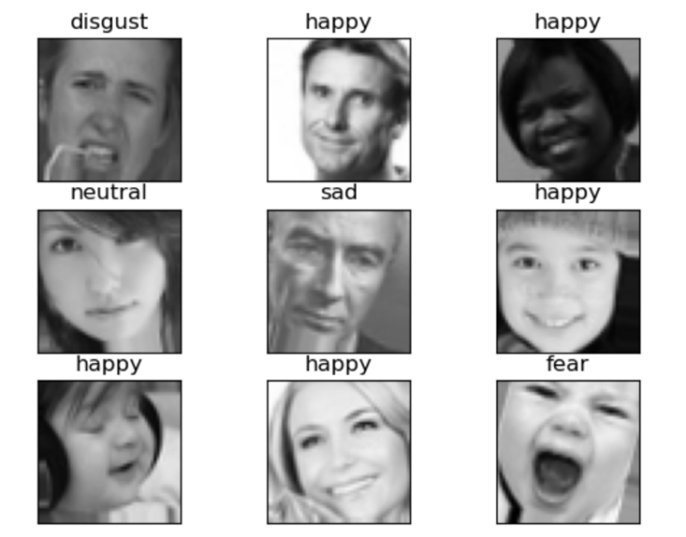

Facial Emotion Recognition (FER) holds great importance in the fields of computer vision and machine learning. In this study, the aim is to improve the accuracy of facial expression recognition by incorporating attention mechanisms into Convolutional Neural Networks (CNN) with FER2013 dataset, which consists of grayscale images categorized into seven expressions. The combination of proposed CNN architecture and attention mechanisms is thoroughly elucidated, emphasizing the operations and interactions of their components. Additionally, the effectiveness of the new model is evaluated through experiments, comparing its performance with existing approaches in terms of accuracy. Besides, the results demonstrate that the CNN architecture with attention mechanisms outperforms the original CNN by achieving an improved accuracy rate of 69.07%, which is higher than 68.04% accuracy rate of original CNN. Moreover, the study further discusses the confusion matrix analysis, revealing the challenges faced in recognizing specific emotions due to limited training data and vague facial features. In the future, this study suggests addressing these limitations through data augmentation and to reduce the gap between training and testing accuracy. Overall, this research highlights the potential of attention mechanisms in enhancing facial expression recognition systems, paving the way for advanced applications in various domains.

View pdf

View pdf

The potential of electric vehicles (EVs) to reduce greenhouse gas emissions in the transportation sector has given the adoption of EVs a considerable boost in recent years. Concurrently, the field of big data analytics has witnessed exponential growth, providing unprecedented opportunities for extracting valuable insights and optimizing various industrial sectors. This paper presents a comprehensive overview of the intersection between electric vehicles and big data analysis. Various EV-related data sources are explored along with the discussion of data computing platforms. Followed by this, this paper analyzes different use cases of big data analysis in EVs, covering key areas such as energy management, charging infrastructure optimization, and vehicle condition monitoring, which demonstrates how big data can be crucial for the successful integration of EVs into green smart cities. Finally, the author provides future research insight and opportunities for the use of big data techniques in EV adoptions. In particular, this paper serves as a roadmap for future research in the area of data analytics in EV integration.

View pdf

View pdf

While digital meters can automatically communicate with a database to store reading values, many industries still utilize analog meters which are not economically or physically viable to replace. Instead, computer vision modules may be attached to cameras to automatically read values and record them. This paper tests three implementations of gauge reading, two simple Hough line and circle transform methods and one lightweight naive line rotation method. A dataset was created for testing purposes, consisting of 46 images from various sources and variations including pointer rotations, binarizations, and text or logo removal. Results showed the line rotation technique substantially more robust and accurate than both Hough line implementations. Two major obstructions were detected: pointer tails and dense text/logos, and their removal via photoshop tools improved the average accuracy to roughly 1 degree from ground truth. This is accurate enough to replace human readers in most imprecise situations and is lightweight enough to function under nearly all circumstances. Future research seeks to validate these findings further by testing line rotation on more varied gauges.

View pdf

View pdf

The challenge of addressing the issue of low accuracy in specific scenarios encountered during the implementation of facial emotion recognition systems arises due to the wide array of environments and varying conditions. In this study, the Facial Expression Recognition-2013 (FER-2013) dataset sourced from the Kaggle serves as the basis for training the models, with subsequent analysis conducted on the experimental outcomes. The dataset comprises a training set and a testing set, each annotated with labels representing seven distinct emotions, ranging from "angry" to "surprise". The models developed for facial emotion classification, tasked with automatically recognizing emotions based on provided images, consist of a MobileNet-based model and a self-built model employing convolutional neural networks. Both models exhibit an accuracy of approximately 60%, yet demonstrate deficiencies in predicting the "neutral" label. Additionally, the utilization of techniques such as confusion matrix and saliency map enable the comparative evaluation of model performance across different emotion labels and facilitates an analysis of their corresponding dominant facial regions. Based on a comparison of results obtained from representative cases, two potential factors contributing to these limitations are identified: a paucity of training data and the presence of ambiguous features. The findings of this study are expected to inform future directions for improvement and modification of facial emotion recognition models in order to enhance their applicability in diverse scenarios.

View pdf

View pdf

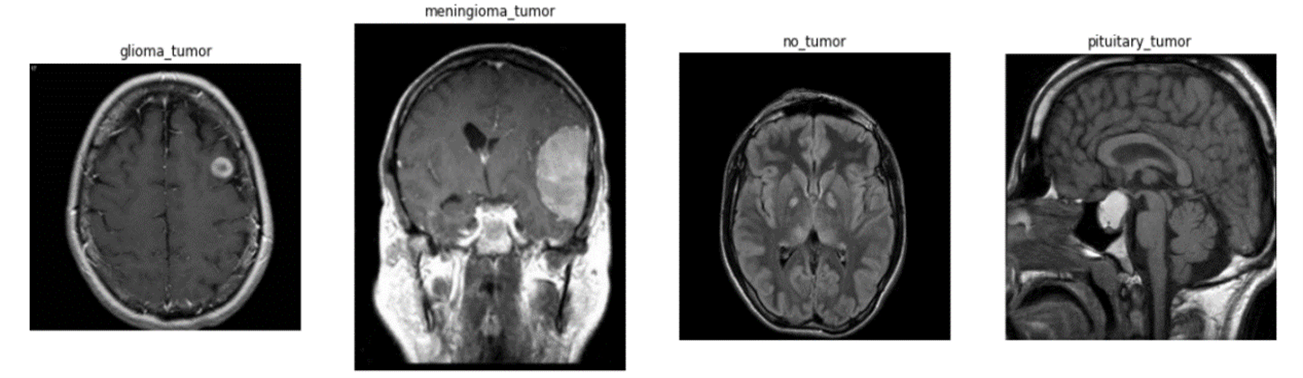

Brain tumor, recognized as one of the most formidable and aggressive diseases globally, continues to pose significant challenges for medical practitioners in clinical diagnosis and treatment. Addressing the burden on doctors and addressing resource limitations in certain hospitals necessitates the development of efficient and dependable alternative approaches. Convolutional Neural Networks (CNNs), renowned for their prowess in image recognition, hold immense potential in addressing this pressing issue. Leveraging transfer learning, the capabilities of established models such as VGG-16 and MobileNet can be harnessed to construct superior models within a comparatively abbreviated timeframe. This research paper aims to construct and evaluate VGG-16 and MobileNet-based models, employing transfer learning, to explore the applicability of these two classical models in the context of brain tumor diagnosis. The ultimate goal is to assist doctors and hospitals in alleviating the challenges associated with brain tumor diagnoses. The results demonstrated the effectiveness of brain tumor recognition based on CNNs.

View pdf

View pdf

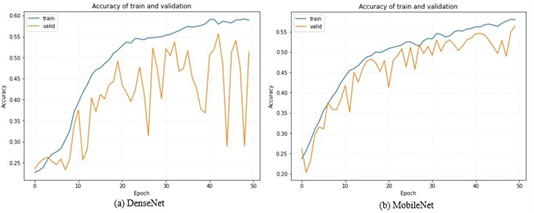

The accurate detection of emotions holds significant importance in the field of psychology, necessitating the careful selection of an appropriate model for facial expression classification. In this study, emotion detection is the classification task to compare the performance of MobileNet, ResNet, and DenseNet. For the detailed model, MobileNet, ResNet50, and DenseNet169 are selected for comparative analysis. The dataset FER-2013 is from Kaggle, which contains a training set and test set consisting of 29, 709 samples and 3589 samples, respectively, with seven facial expression categories. In terms of preprocessing, normalization, and data augmentation are considered. The whole dataset is normalized by dividing 255 and augmented with a Keras image generator. In the model-building step, the structure of the test models is controlled in the same structure. The pre-trained model from the Keras application connects with one global average pooling layer and adds one dense layer at the last as the output layer with the SoftMax activation function. Moreover, this study kept all hyper all parameters the same during the training period. After the model training, the confusion matrix is used to show the class relativity and the loss and accuracy of each model are plotted for analysis. Experimental results demonstrated that the MobileNet achieves 56.08% accuracy on test set which is more competitive than the DenseNet169 and ResNet50 and provides a relatively stable loss.

View pdf

View pdf

Galaxy morphology classification is essential for studying the formation and evolution of galaxies. However, previous studies based on Convolutional Neural Network (CNN) mainly focused on the structure of convolutional layers without exploring the designs of fully connected layers. In this regard, this paper trains and compares the performance of CNNs with 4 types of fully connected layers on the Galaxy10 DECaLS dataset. Each type of the fully connected layers contains one dropout layer, and dropout rates including 0%, 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80%, and 90% are tested in the experiment to investigate how dropout rates in fully connected layers can affect the overall performance of CNNs. Meanwhile, these models utilize the EfficientNetB0 and DensnNet121 as their feature extraction networks. During the training process, feature-wise standardization, morphological operations, and data argumentation are used for preprocessing. Technics including class weights, exponential learning rate decay, and early stopping are applied to improve model performance. Saliency maps and Grad-CAM are also used to interpret model behaviours. Results show that the architectures of fully connected layers have a significant effect on models’ overall performance. With the same dropout rate and convolutional layers, models using global average pooling and an additional dense layer outperform others in most cases. The best model obtained an accuracy of 85.23% on test set. Meanwhile, the experimental results on dropout indicate that dropout layer can reduce the effect of the architectures of fully connected layers on overall performance of some CNNs, leading to better performance with less parameters.

View pdf

View pdf

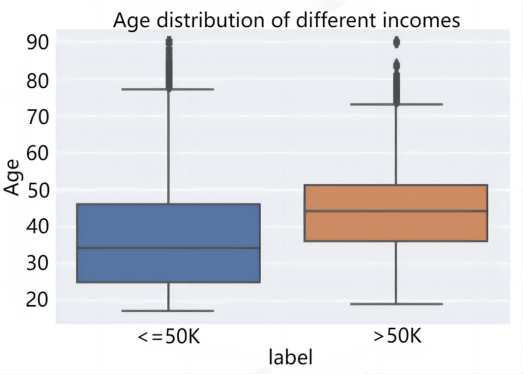

The national income level has always been a topic of concern, and there are many influences that affect the income. This paper focuses on the national work, age, education, marriage, gender, weekly working hours and other dimensions to explore the types of people with annual income above $50,000. In this paper, we select the data collected from the U.S. Census as the data set, divide the training set and the test set, and then construct logistic regression and decision tree models to predict the national income respectively. The experimental results show that the ACC of the logistic regression model is 0.773 and the AUC is 0.515, and the ACC of the decision tree model is 0.860 and the AUC is 0.900. It is verified that the decision tree has better performance in predicting national income.

View pdf

View pdf

Due to recent growth in technology, machine learning has emerged to be an effective auxiliary tool in medical field. However, the effectiveness of transfer learning architectures trained on non-medical image data remains unclear. In this paper, two VGG-16 models, a type of pre-trained Convolutional Neural Network architecture, were constructed to classify kidney CT images that belong to four categories: normal, stone, cyst, and tumor. Two VGG-16 models have identical parameters except for the pre-trained weights: one has pre-trained weights trained on ImageNet, and the other one trained on a random large-scale dataset. To gather a more detained insight into model’s performances, saliency maps and Grad-CAM are employed to assess the models' ability to extract relevant features from the CT images. The result demonstrated that VGG-16 model that is trained on ImageNet can achieve 98.96% accuracy, which is about 30% higher than the other VGG-16 model. The saliency maps and Grad-CAM also support the difference in test accuracy: the model with random pre-trained dataset has saliency map that highlights the whole picture and Grad-CAM image that does not highlight any part of the CT image data, showing that it cannot correctly locate the key features. Additionally, the model with ImageNet can correctly highlight the principal features in both maps. In this study, the utilization of ImageNet is proven to be effective in the usage of transfer learning in processing medical image. Future research and exploration should focus on further enhancing the application of transfer learning in the medical field.

View pdf

View pdf

With its potential to revolutionize a wide range of applications, including lie detection, social robotics, and driver fatigue detection, facial expression recognition is a field that is rapidly expanding. However, traditional machine learning methods have struggled with facial expression recognition due to limitations such as manual feature selection and limited representation capabilities. Additionally, these methods require large amounts of annotated data, which can be time-consuming and expensive to obtain. In order to overcome these difficulties, this paper suggests a novel method that builds recognition models using a multi-layer perceptron (MLP) and ResNet. This hybrid model offers improved performance over conventional CNN models, achieving an impressive accuracy rate of 85.71% on the FER_2013 dataset. Additionally, migration learning is used to increase the model's precision and avoid over-fitting. The FER_2013 dataset is used to train and test the model. The results of the trials show that the suggested model can recognize facial expressions while minimizing the overfitting problem typically associated with deep learning. The model will eventually include a self-attentive mechanism in the study in an effort to improve model resolution. By using it with color images, the team also hopes to increase the model's capacity for generalization.

View pdf

View pdf