Volume 56

Published on December 2024Volume title: Proceedings of the 2nd International Conference on Applied Physics and Mathematical Modeling

The paper will use the improved genetic algorithm that adds Gaussian perturbation based on the standard deviation of the population fitness and increases the variance probability operation to optimize the traditional genetic algorithm, and after iterating until the optimal scheduling strategy is found, we combine this algorithm with a mathematical model, and adopt a variety of variations to improve the efficiency of the algorithm. Among them, we take into account the customer flow, area of the store, employee work preference and other related factors to maximize its adaptability. We use real store employee data for simulation example experimental evidence, and compared with other algorithms, the results show that the study of the scheduling optimization ideas and algorithms are practical and feasible.

View pdf

View pdf

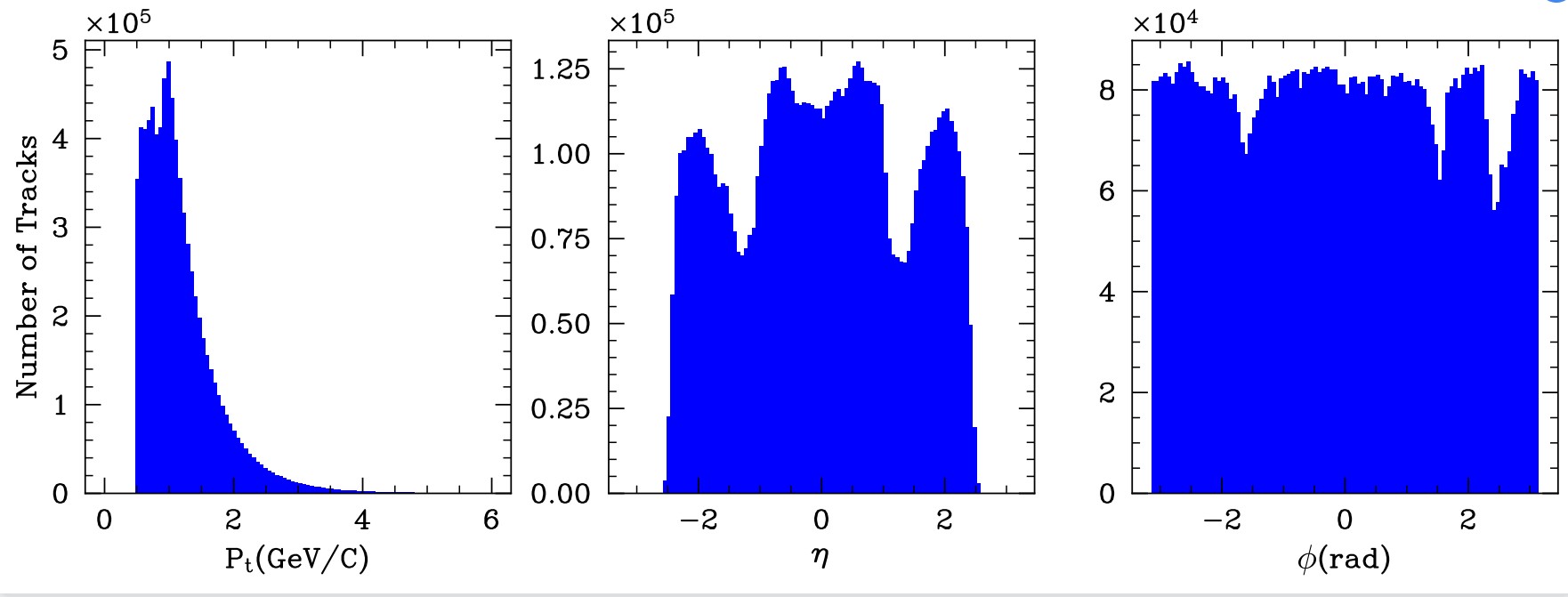

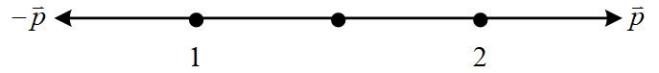

By recreating the first kind of matter in the history of the universe, the quark-gluon plasma (QGP), through PbPb collisions, scientists can study the state of the early universe after the big bang. In this paper, the data from PbPb collisions collected by the Alice experiment at Large Hardron Collider is analyzed to investigate the properties of the QGP. The team focused on Fourier decomposition of azimuthal and angular correlation analysis, using data at a center-of-mass energy of 5.02 TeV per nucleon pair. The distributions of the transverse momentum p_T, the pseudo-rapidity η and the azimuth angle ϕ were plotted and analyzed. The shapes of plots didn’t perfectly match the predictions because of data fluctuations and the limitations of detectors. However, the trend of positive correlation between the coefficients and the transverse momentum p_T was found. The results confirm previous research findings on the collective motion and anisotropic flow of QGP. The study provides insights into the QGP's behavior and supports the theoretical predictions about its properties.

View pdf

View pdf

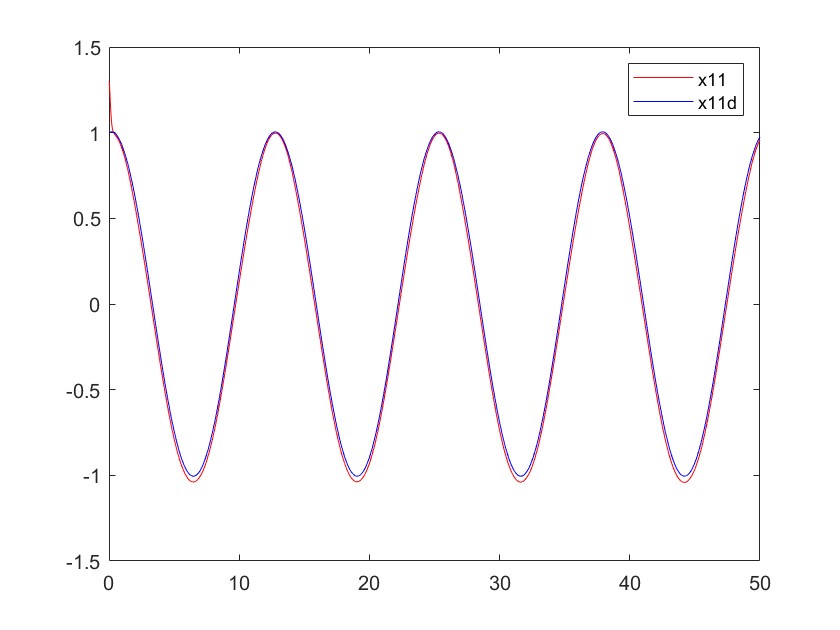

This paper introduces a method for controlling the trajectory of a manipulator robot with uncertainty, using reinforcement learning. The control is designed to work even when there are limitations on the inputs to the system. Reinforcement learning and neural networks are employed alongside standard robust control techniques to enhance the fixed-time convergence of the system state. A novel algorithm is proposed to develop a reinforcement learning-based approach. This approach utilizes radial basis function neural networks and nonsingular fast terminal sliding mode control to ensure error convergence within a predetermined time. This paper presents the task of monitoring the intended path followed by robotic arms in the presence of uncertain and unfamiliar disruptions. The experimental results validate that the suggested approach substantially improves both the stability and accuracy of trajectory tracking, making it more feasible for real-world applications in robotic systems.

View pdf

View pdf

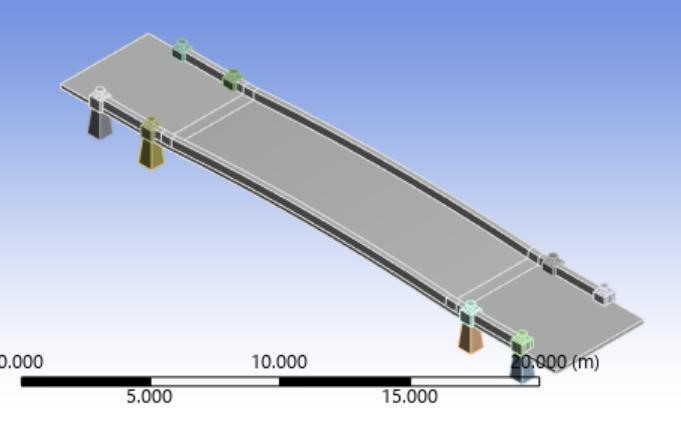

Earthquakes can impart the phenomenon of resonance, where the frequencies of seismic waves align with a bridge's natural frequency, causing amplification of vibrations that can lead to structural failures. Aiming to accurately predict potential vulnerabilities posed to the bridge when subjected to earthquakes, this research presents a vibration analysis of the Bow Bridge. Two models, which simulate the Bow Bridge with and without soil foundation, are conducted to investigate the bridge’s natural frequencies and mode shapes under soilless and soiled conditions. The research result shows that the average vibration frequency in a soiled environment is much lower than that in a non-soil environment, intuitively proving the mitigation of soil environment to bridge’s vibration frequencies. Based on the agreeable data output, this research demonstrates the feasibility of modeling in the prediction of practical construction.

View pdf

View pdf

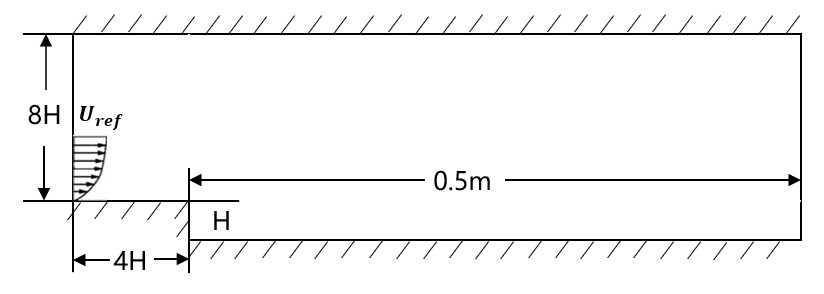

Computational fluid dynamics (CFD) is a field which is attracting more and more focus today for its ability to simulate comprehensive conditions. Reynolds-Averaged Navier-Stokes (RANS), which needs low cost, is one of the most popular methods used in engineering. However, RANS method has insufficient ability to simulate separated flow, which is a major challenge at present. One reason for this is that the model parameters introduce uncertainty into the simulation. This research investigates the effect of three parameters in the Generalized k-ω (GEKO) two-equation turbulence model on the calculations in backward facing step flow. It is found that C_sep has the greatest influence on the calculation of pressure coefficient and friction coefficient. C_nw has the greatest influence on the reattachment calculation through eddy-viscosity. The calculation results of the three variables show that there is correlation between them. Besides, a better set of parameters is obtained, which will improve the calculation accuracy for these three variables.

View pdf

View pdf

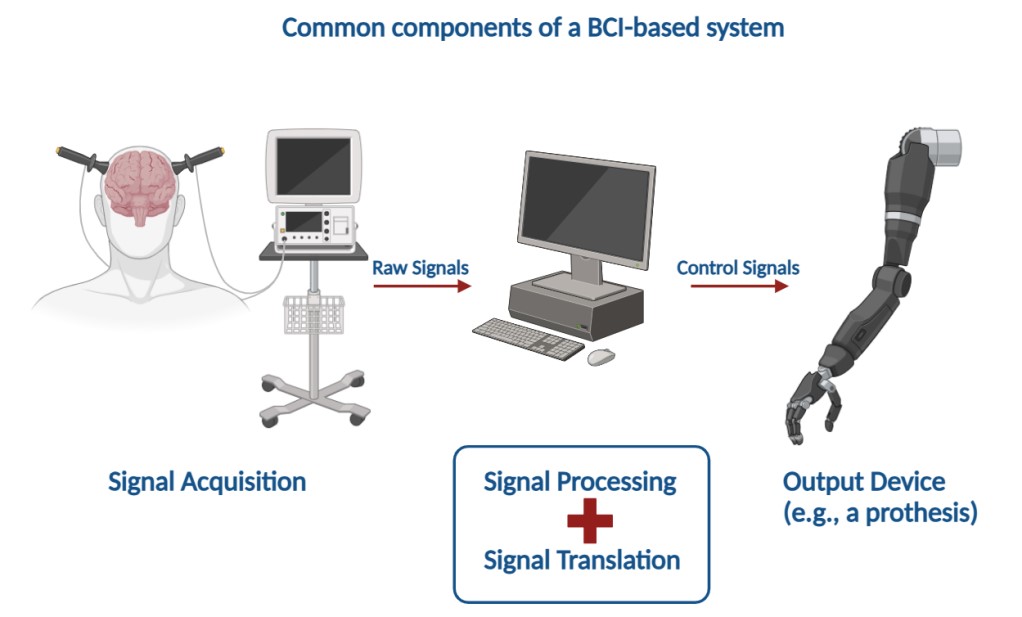

Recent advances in neuro-technology have been fast-pacing. Brain-computer interface (BCI), a well-known neuro-technology, has received significant attentions in recent decades. The most well-developed applications of BCI are for motor controlling, which provide users the chances to control protheses via cortical implants. However, the applications of BCI could certainly be explored beyond this, as it has gained increasing importance in oncological settings. Therefore, this paper would explore the oncology-based BCI systems in detail. It would firstly introduce the basic components of BCI and summarise how BCI could be applied beyond motor control settings to improve the clinical outcomes of patients with cancer, such as assisting the diagnostics, reducing the side effects from cancer therapies and enabling neurorehabilitation. It would also compare the advantages of BCI against current commonly used approaches or medications and discuss some physical & psychological, technical and ethical issues related to BCI-based systems, which should be carefully monitored and minimised by future studies. In the end, this article would briefly summarise the overall content mentioned and suggest some future directions for the oncology-based BCI developments.

View pdf

View pdf

Cybersecurity is instrumental to the modern world. The most effective protection of data online is cryptography and encryption. There are two main types: symmetric and asymmetric. They employ important mathematical concepts to encode vital information. Nevertheless, the encryption field remains largely within the world of real numbers. This paper analyzes an encryption method presented by George Stergiopoulos et al. utilizing complex numbers and investigates its possible usage. The process involves investigating the necessary complex variable applications and a comparative scoring system which provides vital outlook on the promise of this new methodology. Subsequently, the investigation of the time complexity, security and encryption speeds provides a vital outlook on the practical uses in society. The results are promising feasibility of this new algorithm and the encouragement of further investigation into complex variable applications that encrypt with a more substantial range than any operation in the real numbers. Thus, the explicit novel insightful comparison of both symmetric and asymmetric encryption systems to the proposed complex encryption shows vital promise and further interest in investigation into this field as it opens the possibilities to an infinite array of novel complex operations that were previously inaccessible due to the restraint of real numbers.

View pdf

View pdf

In this paper, we compare and analyse the effectiveness of deep learning models such as AlexNet, Vgg, GoogleNet and MobileNet in the task of pneumonia image classification. During the training process, GoogleNet showed the fastest convergence speed, reaching convergence at the 3rd epoch; subsequently, the other three models gradually stabilised. In the end, the loss of AlexNet is 0.426, the loss of Vgg is 0.566, the loss of GoogleNet is 0.936, and the loss of MobileNet is 0.626. By selecting the weights of the round with the best training effect of each model, the results of classification accuracy are obtained: 88.9% for AlexNet, 92.6% for Vgg, and 92.6% for GoogleNet. 92.6%, GoogleNet is 85.2%, and MobileNet is 96.3%. MobileNet demonstrated the best prediction performance on the test set. These results provide a useful reference for the application of deep learning models in medical imaging..

View pdf

View pdf

When muon particles in the cosmic ray pass through different materials, they will interact with the materials, leading to variation in muon event rates, which is the munber of muon detected per unit time. This study investigates muon event rates under two shielding conditions. In this experiment, six Cosmic Watch detectors are set into three pairs, arranged vertically, to identify the muon particles passing through the shielding materials. Muon event rates under these conditions are determined through coincidence analysis between data collected by paired detectors, which allows the specific events to be separated from the background radiation. To calibrate the muon event rates, environmental factors and differences between detectors are considered. Specifically, atmospheric pressure variations and the difference in time measurement caused by built-in quartz are corrected. The analysis reveals that the detected muon events rate is higher under a thin absorber (1cm iron) and is lower under a thick one(5cm lead). These findings align with the results of previous experiments, which provide insights into particle interactions with materials.

View pdf

View pdf

This paper delves into the significance and impact of quantum entanglement across the fields of physics, information science, and philosophy. Utilizing a literature review approach, the paper first introduces the concept and historical background of quantum entanglement, emphasizing its challenge to the limitations of classical physics and its potential applications in quantum communication and quantum computing. This paper thoroughly explains the theoretical foundations of the Einstein-Podolsky-Rosen experiment and Bell's inequality, along with related experimental validations and key results. In particular, it elucidates how quantum entanglement reveals the nonlocality in quantum mechanics and the characteristics of nonlocal information transfer. The discussion then shifts to the challenge quantum entanglement poses to physical ontology, exploring the philosophical implications and the new perspectives it offers in information theory. Finally, this study concludes by summarizing the profound impact of quantum entanglement as one of the most challenging and inspiring research areas in contemporary physics on scientific theory and philosophical thought, as well as anticipating future research directions and potential applications.

View pdf

View pdf